Sometimes the whole process is collapsed into three main stages:

and this in fact will be the usual use of these terms below. Production will refer to the whole process of making a linguistic message ready for transmission. Reception will refer to the whole process that takes place once a linguistic message has been transmitted.

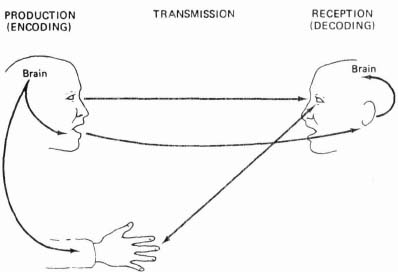

This model can now be applied directly to the various stages involved in human communication. In some cases, however, it will prove useful to break down the steps in the process into ‘substeps’, in order to make the model more usable for the investigation of linguistic disability. Each step in the communication chain will require detailed study, but to begin with let us look at the process in outline, to provide a general perspective. A representation of the communication chain is given in Figure 3.1.. For communication to take place, a minimum of two human beings are required, and these are symbolized by the facing heads. Communication may proceed in either direction.

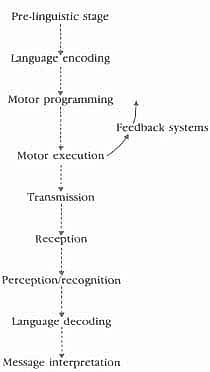

Figure 3.2. takes this general approach a stage further, introducing some additional features. It is this level of detail that is needed to discuss the physical basis of the communication chain (Chapter 4) and how this chain might be disrupted, resulting in disorders of communication (Chapter 5). This model is a development of the seven-step process in that several substeps of encoding and decoding are recognized. It also shows that communication does not just involve sending information in a linear way from one individual to another. Instead feedback loops are built into the process, which allow the message-sender to monitor the correctness and success of the message. This can include identifying errors in the encoding process or monitoring the receiver’s reactions for signs of misunderstanding or misinterpretation (see further, p. 74).

The Pre-linguistic Stage

The communication chain begins with an information source which is capable of constructing a message. These messages can be conceived of as ideas or thoughts, which we may, or may not, wish to communicate to others. Some of these thoughts will not be made public through the use of language and they will remain a part of our private mental lives. It is an important part of our social and interactional knowledge (sometimes called ‘social cognition’) to know which thoughts can be communicated to others, and which should remain private. Failure to acquire this social knowledge, with the resulting production of utterances which are untimely or inappropriate in their content, is likely to lead to interactional breakdown and social ostracism. Conversely, a failure to make ideas public when they are relevant and timely is also likely to lead to interactional failure. Individuals with such problems are likely to experience difficulties in forming and maintaining relationships, and may often find themselves in conflict with many of the major institutions of society.

We have termed the first stage in the communication chain the ‘prelinguistic stage’. This suggests that the ideas and thoughts entertained by the information source exist independently of language. A second separate step, as we shall see, gives the idea a linguistic shape. This notion of thought without language is controversial and is undoubtedly an oversimplification, and we are confronted here with the thorny issue of the relation between language and thought. Can we think without language? The answer to this question depends on the kind of activity involved. If we reflect on our thinking, we have some awareness of highly conscious forms of thinking. When given a visual puzzle to complete, we have the sensation of thinking in shapes and colours, and mentally performing operations such as the rotation of images. The feelings we have in listening to music are not as a rule possible to ‘put into words’ and do not seem to be language-dependent. It would seem therefore an uncontroversial conclusion that we have different modes of conscious thought available to us. But, on the other hand, there seem to be a large number of thoughts which do seem to critically involve language. It is not usually possible to work out the stages of a moral or scientific problem without formulating the problem linguistically – and sometimes it proves essential to speak the problem aloud, or write it down, before our thinking can be clarified. Some forms of characteristically human thought therefore do seem to involve language. But what of less conscious modes of thought, perhaps akin to the state of ‘day-dreaming’, where we are clear that we were thinking about something, but that the ‘something’ is intangible and the form of the thought is unclear. All of this suggests a notion of pre-linguistic thoughts and ideas, and the precursor stage to linguistic encoding. It may well be that encoding thoughts into language not only allows the opportunity to make them public, but even when they are not spoken aloud, language form gives them a permanence that pre-linguistic representation lacks.5

Before an idea or thought can be entertained, there must be knowledge of the thing that is to be thought about. Psychologists make a distinction between knowledge stored in autobiographical (or episodic) memory, and in semantic memory. Autobiographical memory is knowledge of specific events and their time-reference (i.e. knowledge of when, approximately, they occurred), while semantic memory is our knowledge store of facts, words and concepts. The size of information chunks in semantic memory varies from that of the individual concept, such as the meaning of ‘two’, to much larger structures such as ‘how to behave at a funeral’. These larger knowledge structures are sometimes termed ‘scripts’ or ‘schemas’. The difference between semantic and autobiographical/episodic knowledge is best displayed in trying to answer the following two questions: ‘when did you first go to London?’ and ‘when did you first learn that London was the capital of the United Kingdom?’ The first question may be relatively easy to answer as it addresses autobiographical memory, which is time-referenced, whilst the second is very difficult to answer as it addresses semantic memory, which is not so strongly time-referenced. The two types of knowledge are, however, linked. Our semantic or conceptual knowledge about dogs is built up through various encounters with dogs, experienced both directly and vicariously through hearing or reading about dogs. In the course of these encounters, we develop an abstract notion of ‘dog’, which is a synthesis of the common elements of dog encounters. Conceptual knowledge is likely to be fluid, as we constantly acquire new knowledge and modify past beliefs throughout our lifespan, and there is also the possibility that one individual’s knowledge of ‘dog’ is a little different from another’s. If you have been bitten by a dog, ‘ferocious’ may be a central component of your dog concept, but if your dog experiences consist only of interactions with a creature that wants nothing more than to have its tummy tickled, then your beliefs about dogs may be different. There is likely, however, to be a relatively stable core to concepts. If you were to hear of a fish that never entered water, you might find this surprising given that ‘aquatic-living’ is likely to be a core element of your concept of ‘fish’.

In order to talk about dogs or entertain ideas about dogs we have to have knowledge or a concept of dog, and to a reasonable extent one individual’s concept of dog has to be similar to another’s concept of dog if the two individuals are to communicate with one another. If people have difficulty in learning about the things in their world, and forming abstract concepts from a variety of experiences, then this will limit both the thoughts they can have about their world and also what they can communicate. Individuals with learning difficulties (or mental handicaps, see p. 154) are likely to experience such problems.

In the clinical context, the separation of a pre-linguistic and linguistic stage in the model of the communication chain can be justified only if, in reality, we can identify disorders which involve a difficulty with thinking, or with language, but not with both.6 Of course, we shall also find instances where both are impaired, as in many forms of learning difficulties. Because the pre-linguistic stage is a precursor to linguistic encoding, we will also find instances of ‘knock-on’ effects – where thought is incoherent and disorganized, resulting in equally incoherent linguistic encoding of those thoughts. Psychiatric conditions that involve thought disorders, such as schizophrenia, are examples of such disabilities.

Language Encoding

The second step in the chain is that of language encoding. In discussing this stage in the processing of messages, the aspects of language structure and use that were discussed in Chapter 2 – syntax, semantics, phonology/graphology and pragmatics – are essential. Imagine that we are watching two children (A and B) playing, and we have seen A hit B. At the pre-linguistic stage, we have two elements (A and B), an action (hitting) and a particular event linking them together. In coding this idea into a linguistic form, we need to select words that correspond to the elements and the action. To do this, we need to access information in our semantic store and select appropriate words, such as boy, girl, Mary, Nicholas, hit or punch. In retrieving the words, we also access their sound or phonological structure – that hit is made up of an initial consonant /h/, a vowel /I/ and a final consonant /t/. We also need to establish the relations between the units we have selected and so structure them into an accurate sentence structure. Who was the one who was hitting -Mary or Nicholas? Who got hit? Here we need our knowledge of sentence structure to capture the correct relation between the elements. We also need to decide the purpose to which our utterance will be put. For example, would we like to discover the reason for the event and maybe ask a question (‘Why did Mary hit Nicholas?’) or do we want to communicate this event in a statement to another who did not observe it (‘Mary hit Nicholas’). The decision on the function that the utterance has to serve will affect our choices not only of sentence structure but also of words. When we come to examine language disorders (p. 162), we will see that the different layers of linguistic encoding can be differentially affected by disability. Thus we can identify individuals with problems in syntactic encoding or phonological encoding, and so on. These disabilities may be developmental – that is, the linguistic knowledge fails to develop; or they may be acquired – the individual previously possessed competence in an area, but this was lost following damage to the cerebral cortex of the brain (p. 150). As well as identifying individuals with specific difficulties in individual areas of language encoding, it is more common to find that a number of areas of language structure are impaired, so that, for instance, the patient may have both a phonological and a syntactic disorder.

The process of linguistic decision-making – what function will the utterance have? what sentence structure? what words? what sound structure? – is complex as it stands. But this complexity increases with the realization that these decisions are also linked to variables external to the language system. A few examples will illustrate such interrelationships. An important input to our linguistic decisions is an assessment of what the receiver of the message already knows. In our example, the event of two children fighting, if the recipient of the message also observed the event, it would be redundant to send the message ‘Mary hit Nicholas’ because this information would already be known. It might be appropriate to ask a question such as ‘Why did Mary hit Nicholas?’. This ability to ‘know what the recipient knows’ is a very complex cognitive skill, and one that young children take some time to acquire. But, if the child is to achieve an adult-like level of communicative competence, it is cognitive skills such as these which have to be developed. A current and influential hypothesis of the cognitive impairment which underlies autism (see p. 161) suggests that these children are particularly slow in developing the ability to understand that the content of another’s mind might be different from their own. The ‘theory of mind’ hypothesis suggests that many of the communicative impairments which occur in autism are a consequence of this inability to ‘mind-read’.

The following examples illustrate the importance of our knowledge of social situations in linguistic decision-making. Awareness of such factors as the formality of a situation and the status of the participants is essential. These factors may influence a decision as to whether to speak or not to speak. For example, would you inform a senior professor that his trouser fly was undone? The perceived status of participants and the formality of a situation may also influence choices of words or even of the sound structure of words. For instance, in describing feelings of nausea to a doctor or an employer, most people would feel it more appropriate to select such expressions as ‘to be sick’ or ‘vomit’ than the more informal ‘throw up’. Similarly, the influence of social variables on decisions of sound structure of words can be observed. The dropping of initial /h/ sounds is typical of informal speech and is found in a number of British dialects. Habitual h-droppers’ do not, however, always drop their hs. If placed in a situation which they perceive to be formal, or addressing a listener of perceived high status, the amount of ‘h-dropping’ is likely to decrease. Indeed, women are reported to be more likely to modify their speech than men, although why they are more sensitive to such social variables is less clear.7 One might even observe the phenomenon of hypercorrection, where /h/ starts to appear at the beginning of words where it is not usually found – often words that begin with a vowel, such as idea or envelope. The point here is that linguistic competence is interlinked with many other areas of cognition. Encoding a linguistic message involves a series of decisions regarding language structures and uses, but these decisions are also influenced by external variables such as our social knowledge. There are cognitive disabilities, such as learning disabilities or mental handicap, which impair non-language competences but also have secondary effects on language. Limited social knowledge is one such example.

Linguistic encoding therefore involves pragmatic, semantic, syntactic and phonological processing, plus a series of external constraints on the encoding process. How, we might speculate, are these levels of language represented within the mind? Which comes first? Does the brain first of all ‘generate’ meanings, in the form of items of vocabulary, then put them into sentences, and then give them phonological shape? Or is a grammatical ‘shape’ first organized, into which vocabulary items are later placed? There are plainly many possibilities, and a great deal of research has taken place to find evidence which supports one or other of these models of language processing. These questions are addressed by the academic discipline of psycholinguistics – a field that bridges psychology and linguistics. The communicative behaviour of speakers is examined for clues as to how the human mind is organizing language. For instance, pausing and hesitation are informative. It is evident that we do not plan our utterances ‘a word at a time’: if this were the case, our ‘mental planning’ would be reflected by the way we would hesitate and melodically shape our utterances. There would be a pause after every word – or, at least, the same amount of pause after every word, with only occasional variations due to changes in our attention, tiredness, and so on. But speech is obviously not like that. We do pause and shape our utterances melodically, although these features do not seem to relate to word-sized units, but to larger units of grammar. In particular, the clause (compare p. 45) has emerged as a unit of speech that is more readily identified than any other as being phonologically ‘shaped’ by such features as pause and intonation. We are much more likely to pause between clauses than within clauses. And if we do pause within a clause, then again it is not just a matter of chance where the pause will occur: the likelihood is that the pauses will occur between the main constituents of clause structure (between Subject and Verb, and Verb and Object, for example).

Another technique which gives insights into mental processing of language is the study of speech errors. These can be the errors made by normal speakers or errors made by disordered speakers (though one must always be cautious when applying to normal brains the results of research into abnormal brains). The importance of information from speakers with communication disorders is, first, that they make more errors than normal speakers and so provide the researcher with more data with which to work. Second, certain types of language disorder -for example, aphasia, a language disorder that occurs after brain damage (see p. 165) – may selectively disrupt components of the language processing system. By detailed study of the errors made by an aphasic speaker, therefore, we can construct a picture of how the damaged components actually operate – insights that are not usually attainable from observations of normal speakers. Studies of speech errors also support the idea that the clause is a significant unit in the planning of speech. Certain types of speech errors, or slips of the tongue – those involving a switch around of words or sounds – occur within clauses: they typically do not cross clause boundaries. For instance, the following two examples illustrate, first, a likely tongue-slip, and second, a highly unlikely tongue-slip, happening to the (two-clause) sentence John caught the ball, and Mary laughed:

John baught the call, and Mary laughed

John laught the ball, and Mary baughed.

Speech errors are just one of the ways in which we can gain information about the way the human mind works, with reference to language. Despite some insights into the complex process of how language operates in the mind, so much is still unknown. Here, as in so many other areas reviewed in this book, there is a strong need for empirical research.8

Motor Programming

By the end of the linguistic encoding stage, a message will be fully specified in terms of its sentence, word and sound structure. The next step in the chain is to convert the linguistically encoded message into a form which can be sent to some external receiver. The actual choice of channel (speech or writing) is likely to have already been made, as the choice of transmission modality influences many of the linguistic encoding choices (compare the social pleasantries with which a letter begins, to those that start a conversation). The stage of motor programming takes the linguistic message and converts it into a set of instructions which can be used to command a motor output system, such as the muscles used in speech or writing. The motor program can be conceptualized as the software program which drives the hardware of the vocal organs and their associated nerves, if the output is to be speech. Motor programs are necessary for highly skilled, voluntary movements such as speech. In the event of loss of the software programs – through brain damage or their failure to develop normally – complex movements are disturbed, even though the muscles that implement the motor programs are not weak or paralysed. Volitional movements are particularly impaired; involuntary movements, which require little programming, are relatively unaffected. Hence patients with a motor programming disorder may have no difficulty in chewing or swallowing normally, because these are involuntary or automatic activities. Similarly, such patients may be able to move their lips and tongue to remove crumbs from the lips or from between the lips and the gums, but when they are required to produce a /w/ sound, the motor programmer fails to instruct the muscles to operate. Fine movements required for speech become distorted into grosser movement – instead of pursing the lips, the patient may pull them apart into an exaggerated smile.

Motor Execution and Feedback

Once the message has been programmed, the motor execution system can implement the plan. The motor system is made up of the motor areas of the brain, and nerves which run from the brain down to muscles. In addition, in order to produce smooth and controlled movement, there is a need for sensory feedback on the location of a muscle or joint, and its degree and rate of movement. Thus the motor system also requires information to be relayed from the periphery (the muscle) back to the centre (the brain) for well co-ordinated movement. This is particularly important for finely controlled movements of speech and writing.

Feedback

Feedback is another concept that stems originally from information theory and which is of major importance in discussing several kinds of communicative pathology. The term technically refers to the way in which a system allows its output to affect its input. A thermostat is a classic example of the way feedback operates: this device monitors the output of a heating system, turning the system off when it reaches a predetermined level, and turning it on again when it falls below a certain level. The amount of heat which the system puts out is ‘fed back’ as information into the system, and the system thereby ‘told’ whether it is functioning efficiently. In the present model, the system we are dealing with is the human being, and here too output affects input. The sensory feedback we have mentioned above, which tells us about the location and amount of stretch of muscles and joints, is termed ‘kinaesthetic’ feedback. Knowing (at an unconscious level) where our tongues are in our mouths is an important factor in maintaining our clarity of speech. When this information is interfered with, as when we lose sensation following a dental anaesthetic, or obtain novel sensations from a new set of false teeth or other dental appliance, our speech becomes distorted. Similarly, our ability to write also relies on kinaesthetic feedback, along with visual feedback, from our hand movements and the shapes we make on the page.9

The most noticeable way in which we monitor our speech is, however, via the ear: while we speak, we are continually monitoring what we say. At a partly conscious and partly unconscious level, we are aware of what we are saying, and how we are saying it, and can compare this with what we intended to say. When there is a mismatch, we introduce corrections into our speech – correct any slips of the tongue, change the direction of the sentence (perhaps using such phrases as I mean to say, or what I mean is…), and so on. These are examples of conscious self-correction. But at an unconscious level, feedback is continuously in operation, as can be demonstrated by experimentally interfering with the normal course of events. This can be done by delaying the time it takes for the sound of speakers’ own voices to be ‘fed back’ to their own ears. The process is called delayed auditory feedback. What happens is that, as speakers talk, their voices are stored for a fraction of a second on a recorder, and then played back to their ears through headphones, sufficiently loudly that it drowns out, or masks, what they are currently saying. If the delay is of the order of one-fifth to one-tenth of a second, the effect can be remarkable: speakers may begin to slur their voice and stutter in quite dramatic ways, and after a while may become so unconfident that they stop speaking altogether. Evidently, the delay between the time it takes for the brain to initiate the right sequence of sounds and to interpret the feedback has upset a very delicate balance of operation. The experiment demonstrates our reliance on auditory feedback in normal everyday speech.

One theory regarding the cause of stuttering behaviour (p. 193) suggests that some stutterers have inherent delays in their auditory feedback loop. A form of treatment for stuttering, which stems from this theory, is to mask the sound of the stutterer’s own voice with a burst of white noise. The masking noise is triggered by a microphone attached to the stutterer’s neck. When this picks up the stutterer’s speech, the white noise is activated, so drowning out the sound of the voice as they speak. This eliminates all auditory feedback, whether or not it is delayed. As soon as the stutterer stops speaking, the masking stops, enabling the person to listen to the contributions of other participants in the conversation. This rather drastic treatment is, however, successful with only a small proportion of stutterers, which suggests that not all suffer from a problem in delayed auditory feedback, and that this disorder may stem from a variety of sources.

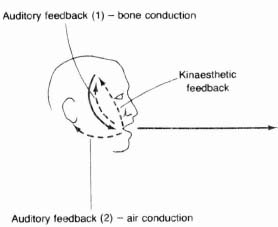

A second form of auditory feedback which operates in speech arises from the fact that we hear our own voices in two different ways. We hear ourselves and other speakers through vibrations in the air (air conduction), but we can also detect vibrations through the bones and tissues of the skull (bone conduction) (Figure 3.3). In fact we hear most of our own voice through this means, and not through the air: this is why we are usually surprised when we hear our voices on a tape-recorder for the first time – we may not recognize ourselves, or we may dislike this ‘squeaky’ voice. As bone vibrates at a slower rate than air particles, our perception of the pitch of our voice may differ from that of our listeners, who hear us very largely through air conduction.

Figure 3.3. Feedback in speech

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree