7.1 Case Studies of Natural Selection in Human Populations

The following case studies are not meant to represent everything that we know about natural selection in human populations, but instead are chosen to provide illustrative examples of different types of selection, different methods of analysis, and different lessons regarding natural selection.

7.1.1 Hemoglobin S and Malaria

The story of natural selection and the hemoglobin molecule is a classic in anthropology, providing an excellent example of balancing selection, rapid genetic change, and the effect of cultural and ecological influences on selection. Hemoglobin is a protein of the blood that transports oxygen to tissues throughout the body. The hemoglobin molecule of adults is made up of four protein chains. Two of these are identical and are called the alpha chains. The other two protein chains are the beta chains, and are identical to each other as well. The alpha chains are 141 amino acids in length, and the gene is on chromosome 16. The beta chains are 146 amino acids in length, and the gene is on chromosome 11 (Mielke et al. 2011). Our story here deals with the beta chain.

The normal form of the beta hemoglobin gene is known as the A allele, and people with the AA genotype have hemoglobin that functions normally for transporting oxygen. A number of mutant alleles have been discovered, including the S, C, and E alleles, among other rarer forms (Livingstone 1967). Our focus here is on the S allele, also known as the sickle cell allele. This allele is due to a single mutation that replaces the sixth amino acid of the beta chain, glutamic acid with the amino acid valine. This small genetic chain has noticeable effect in individuals who carry two copies of the mutant gene; that is, they have the genotype SS. Those with the SS genotype have the genetic disease sickle cell anemia. In this case, low levels of oxygen can cause the red blood cells to become distorted, and change from their normal donut shape to the shape of a sickle (hence the name sickle cell). The deformed blood cells do not carry oxygen effectively, causing serious problems throughout the body’s tissues and organs, and typically leading to death before adulthood without substantial medical intervention. Those who have the heterozygous genotype AS typically do not show this effect, and are known as carriers.

In terms of models of natural selection, we can assign those with the AA genotype the highest relative fitness (w = 1). Heterozygotes have slightly lower fitness, and those with sickle cell anemia (SS) have the lowest fitness. Given this information, the models in Chapter 6 can be used to make some general predictions about the evolution and variation of the hemoglobin alleles (here, we focus on the A and S alleles and ignore other alleles to make the points more clearly). Because the S allele is harmful in the homozygous case, we would expect selection against this allele. As S is a harmful mutant, we would expect its frequency to be very low, as expected under mutation–selection balance. Data from many human populations certainly fit this prediction. For example, the allele frequencies in a number of populations throughout the world are A = 1 and S = 0. In some other cases, S is not zero, but is very low, such as in Portugal (S = 0.0005), Libya (S = 0.002), and the Bantu of South Africa (S = 0.0006) (Roychoudhury and Nei 1988).

Such frequencies are consistent with a model of selection against an allele, and would simply be a good example of such selection if not for the fact that a number of human populations do not fit this general model. Some populations in the world have higher frequencies of the S allele that range from 0.01 to over 0.20 (Roychoudhury and Nei 1988). These frequencies are much higher than expected under a model of selection against the S allele. How could the frequency of a harmful allele be higher than expected? There are two possible answers. One is genetic drift. By chance, the frequencies of harmful alleles can drift upward given certain levels of population size and fitness values. The other explanation is balancing selection. When there is selection for the heterozygote, we have a situation where having only one copy of an allele actually confers higher fitness than someone with two copies, or someone with only one copy.

7.1.1.1 Balancing Selection and Hemoglobin S

It turns out that the higher frequencies of the S allele in a number of human populations can be explained by balancing selection. The clue to this effect is the geographic distribution of populations with higher (>0.01) frequencies of the S allele. Moderate to high frequencies of S are typically found in human populations in parts of west Africa and South Africa, as well as parts of the Middle East and India, among other regions. Further inspection shows that it is not a simple matter of geographic location—some African populations have high values, for example, while others have low values—but instead correlates with the distribution of epidemic malaria. Populations that have a history of malaria epidemics tend to have higher frequencies of the S allele.

Malaria is an infectious disease caused by a parasite, and is one of the most harmful diseases recorded in human history. Current estimates suggest that between 300 and 500 million cases of malaria occur each year, with between 1 million and 3 million deaths each year (Sachs and Malaney 2002). There are four different forms of malaria, each caused by a different parasite. The form of malaria relevant here is known as falciparum malaria, which is caused by the parasite Plasmodium falciparum and is the most fatal of the malarias. You cannot get malaria from someone else; the disease is transmitted by mosquitoes, a point that will be important shortly.

Your genotype for beta hemoglobin affects your susceptibility to falciparum malaria. Having an S allele renders red blood cells inhospitable to the malaria parasite, thus protecting the individual from its effects. If you have the normal hemoglobin genotype AA, you are more susceptible to malaria than someone who has an S allele. However, if you have two S alleles (genotype SS), you have sickle cell anemia, which is frequently fatal. On the other hand, if you have the heterozygous genotype AS, you do not have sickle cell anemia, and you are less likely to contract malaria. Thus, in a malarial environment, the heterozygote AS has the highest fitness followed by the AA genotype, and the SS genotype still has the lowest fitness.

We can see these fitness differences with an example provided by Bodmer and Cavalli-Sforza (1976 : 319) based on data from the Yoruba of Nigeria, a population in a malarial environment. The observed genotype numbers in adults were

(for a total of 12,387 adults). We use the allele counting method from Chapter 2 to see that there are 21,723 A alleles and 3,051 S alleles, for a total of 24,774 alleles. The allele frequencies are  and

and  . Note that if this population were at Hardy–Weinberg equilibrium (and hence no selection), the expected genotype numbers would be computed by multiplying the expected Hardy–Weinberg proportions by the total number of adults (12,387), which would give

. Note that if this population were at Hardy–Weinberg equilibrium (and hence no selection), the expected genotype numbers would be computed by multiplying the expected Hardy–Weinberg proportions by the total number of adults (12,387), which would give

Comparing the expected numbers to the observed numbers, we see that there are fewer adults with genotype AA than expected, more with genotype AS than expected, and far fewer SS than expected. This population is clearly not at Hardy–Weinberg equilibrium. Because we know the biochemical relationship of hemoglobin alleles with malaria, and because there is a surplus of heterozygotes than expected, the evidence fits balancing selection.

Bodmer and Cavalli-Sforza (1976) derived the absolute fitness values for each genotype by taking the ratio of observed to expected numbers. This gives absolute fitness values of

We now transform the absolute fitness values into relative fitness values, which can be used in our model of balancing selection from Chapter 6, by dividing each fitness value by the highest fitness value. For balancing selection, this is the absolute fitness for the heterozygote, so we divide each absolute fitness value by 1.120, giving

These values mean that for every 100 people with genotype AS who survive to adulthood, roughly 88 with genotype AA survive and 14 people with genotype SS survive.

The selection coefficients for the homozygotes are obtained by subtracting the relative fitness values of each from 1, giving

We can now derive the expected equilibrium frequencies using equations (6.21) and (6.22) from Chapter 6 as

These values are almost identical with the observed allele frequencies, suggesting that this population has reached an equilibrium under balancing selection.

7.1.1.2 Culture Change and the Evolution of Hemoglobin S

The case of hemoglobin S provides a classic example of balancing selection (perhaps the classic example). It also provides a classic example of how culture affects genetic evolution in human populations. In this case, a scenario can be created depicting the frequency of S increasing in parts of Africa because of human populations practicing agriculture (Livingstone 1958; Bodmer and Cavalli-Sforza 1976). Before the introduction of agriculture, human populations living in heavily forested areas of western Africa would have experienced little problem with malaria because the mosquito species that spreads malaria do not thrive in such dense forest. Because there would be no selective advantage for heterozygotes, any S mutants would be selected against, and the frequency of S would be very low, as it is today in populations where malaria is not a problem.

The situation changed when horticulture (simple agriculture using hand tools) spread into the area. Forests were cleared for planting using slash-and-burn horticulture. The clearing of trees and subsequent changes to soil chemistry that reduced water absorption led to an increased in sunlit areas and pools of stagnant water, both of which are ideal conditions for spreading of mosquito infestations. As the mosquito population grew, they fed on human blood, and repeated bites allowed transmission of the malaria parasite. As malaria increased in humans, the balance between mutation and selection changed. Now those with the heterozygote had the highest fitness because they had resistance to malaria but did not suffer from sickle cell anemia. As the heterozygotes were selected for, the frequency of S would have increased. However, as the frequency of S( = q) increases, so does the frequency of those with the SS homozygote ( = q2), who are then selected against, causing the increase in S to slow down and then stop to reach an equilibrium.

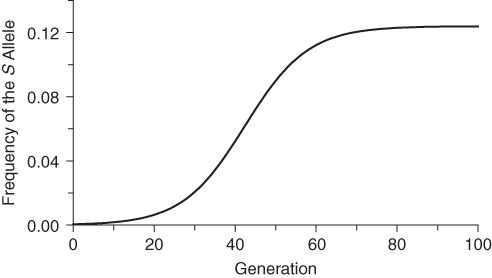

The scenario described above was modeled as shown in Figure 7.1. A single mutation (S) is introduced into a population of 1000 people, giving an initial allele frequency for S of 0.0005 (=1 in 2000 alleles, because each person has two alleles). The fitness values are those given above for the example from Bodmer and Cavalli-Sforza: wAA = 0.878, wAS = 1.000, and wSS = 0.138. As shown in Figure 7.1, there is little initial change in S, as there are few heterozygotes. As the frequency of S continues to rise, a point is reached after about 20 generations where there are sufficient numbers of heterozygotes and the frequency of S increases rapidly. By around 60 generations, the higher frequency of S means that there are an increasing number of deaths due to sickle cell anemia, and the curve levels off to reach an equilibrium quickly. There is very little change after 60–70 generations. In terms of an average of 20–25 years per generation, 60–70 generations translates to between 1200 and 1750 years, which is a very high rate of evolutionary change. Although this model is somewhat simplistic, the results would be broadly consistent even taking factors such as repeated mutation and fluctuations due to genetic drift into account.

Figure 7.1 Simulation of the evolution of hemoglobin S in a malarial environment. The initial allele frequency of S was set at 0.0005 using fitness values of wAA = 0.878, wAS = 1.000, and wSS = 0.138 (see text).

More recent research on the molecular genetics of the sickle cell mutation suggest that it arose and spread through selection and gene flow more than once, perhaps 5 times in different parts of Africa and Asia where malaria was common. We also need to look at the age of different mutations; the coalescent models described in Chapter 5 can be modified to estimate the time of an origin of a new mutation. The origin of the sickle cell mutations dates to roughly 2000 to 3000 years ago (Mielke et al. 2011). Such research combined with what we know about fitness differences in hemoglobin genotypes shows that natural selection has taken place very quickly and in very recent (evolutionary) history. The idea that we stopped evolving when we reached the status of anatomically modern humans 200,000 years ago clearly does not apply to hemoglobin variants.

Another important lesson here is how cultural behavior (in this case, agriculture) changed the fitness values. What was most fit in one environment is not necessarily the most fit in another environment, something to remember given the rapidity with which we humans change our relationship to the environment. In the case of hemoglobin S, cultural change did not eliminate genetic change, but it did change its nature and trajectory, from selection against a mutation to a case of balancing selection. The situation could continue to change if efforts to eradicate malaria in tropical environments ultimately prove successful. In that case, the selective advantage for the heterozygote would disappear and selection against the S allele would ultimately reduce the allele frequency close to zero. However, as shown in Chapter 6, this could take a long time. For example, if we started with the equilibrium frequency of 0.124 for the S allele under balancing selection and changed the fitness values to wAA = 1.000, wAS = 1.000, and wSS = 0.138, the frequency of S would still be 0.02 after 50 generations and 0.01 after 100 generations. Previous genetic variation does not disappear immediately even when culture changes the selective balance.

7.1.2 The Duffy Blood Group and Malaria

Given the intense effect of malaria on human mortality, it is not surprising that a number of genetic traits show evidence of natural selection because of malaria, including other hemoglobin alleles, genetic diseases known as thalassemias, and the enzyme glucose-6-phosphate dehydrogenase (G6PD). One such trait is the Duffy blood group, defined by the presence of different antigens on the surface of red blood cells. The gene for the Duffy blood group is found on chromosome 1 and consists of three codominant alleles: Fya, which codes for the a antigen; Fyb, which codes for the b antigen; and Fy0, which codes for the absence of any Duffy antigen. The Fy0 allele, also known as the Duffy negative allele, is of particular interest here. The Duffy blood group is a receptor site for entry of certain types of malaria parasites into the body. Individuals who are homozygous for the Duffy negative allele cannot be infected by the parasite Plasmodium vivax, which causes vivax malaria (Chaudhuri et al. 1993). In populations that have experienced vivax malaria, we expect that there would be selection for the Duffy negative allele, ultimately leading to fixation of this allele.

The Duffy negative allele is found at highest frequencies in central and southern Africa, reaching a value of 1.0 (100%) in a number of populations. It is also found at moderate to high frequencies in northern Africa and the Middle East, at low frequencies in parts of India, and at or close to zero in native populations throughout the rest of the world (Roychoudhury and Nei 1988). This is the most extreme variation possible in a species, where an allele ranges from 0 to 1 in different populations, and such a range in values typically indicates selection across different environments. Because those homozygous for the Duffy negative allele cannot be infected by vivax malaria, we might expect a close relationship between the prevalence of vivax malaria and the frequency of the Duffy negative allele; that is, the longer vivax malaria has been around, the higher the frequency of the advantageous Duffy negative allele.

The actual geographic correspondence is almost exactly the opposite that we initially expect from a model of selection for an advantageous allele. Populations that have very high (or even fixed) frequencies of the Duffy negative allele tend to have little, if any, vivax malaria (Livingstone 1984). This might seem paradoxical at first, but keep in mind that when we survey genetic variation in the present day, we are seeing the net effect of a variety of factors in the past, which means that we need to consider time depth. For example, one possibility is that all of the selection for the Duffy negative allele took place in the past. Under this model, in populations experiencing vivax malaria, selection would favor those homozygous for the Duffy negative allele. Over time, the frequency of Duffy negative would increase, ultimately reaching a level at or near 100%. As this happened, however, more and more people would be immune to vivax malaria, and at some point, the disease would not be able to survive because of lack of susceptible individuals in the population to perpetuate the spread of the disease. At the end of this selection process, the human populations would have very high (or fixed) frequencies of the Duffy negative allele and there would be no more vivax malaria in the region, which is the situation we see today. An obvious lesson here is that we can see different relationships with selection depending on when we are observing the “present.” Are we seeing the start, middle, or the end?

Further support for natural selection for the Duffy negative allele has come from molecular studies that have examined DNA sequences at and near the location of the Duffy blood group gene. Under natural selection, we expect that DNA variation should be reduced for the gene under selection (because other alleles are eliminated). Because of genetic linkage, where neighboring sections of DNA tend to be inherited together, we should also see reduced variation in DNA sequences near the gene, even if these neighboring sequences represent neutral genetic variation. Under natural selection, these neutral sequences are swept along in a process known as a selective sweep. Our ability to sequence sections of DNA allows us to compare observed levels of variation with those expected under a selective sweep and with those expected under neutral evolution (no selection). For the Duffy blood group and neighboring DNA sequences, the evidence shows reduced variation for those with the Duffy negative allele, confirming that selection has taken place (Hamblin and Di Rienzo 2000; Hamblin et al. 2002).

However, does this mean that the selection initially resulted from adaptation to vivax malaria? Not necessarily. Livingstone (1984) presents another hypothesis that can also explain the negative correlation between Duffy negative allele frequency and prevalence of vivax malaria. He suggests that there was already a high frequency of the Duffy negative allele in some populations, perhaps reflecting selection to some other disease. Consequently, these populations were already resistant to vivax malaria when it spread into Africa, but because almost everyone was immune, the disease never took hold. This alternative continues to be a possibility, although estimates of the date of the initial Duffy negative mutation from coalescent analysis suggest that it arose fairly recently, at about the same time as the origin of agriculture and the spread of malaria (Seixas et al. 2002). If so, then vivax malaria, and not some other disease, was responsible for fixation of the Duffy negative allele in Africa.

7.1.3 The CCR5– Allele and Disease Resistance

Allele and Disease Resistance

The case study of the Duffy blood group raised the interesting possibility that current adaptive value may not reflect past selection. In the case of the Duffy blood group, the current adaptive value of the Duffy negative allele is that it protects against infection by the vivax malaria parasite. However, as pointed out by Livingstone (1984), that does not mean that this allele was due to adaptation to vivax malaria in the past. As noted above, the current evidence does suggest that vivax malaria was initially responsible for selection for the Duffy negative allele, but this correspondence between initial and current selection might not apply to other genes or traits. Evolutionary biologists have long argued this potential lack of correspondence when trying to explain the origin of an evolutionary trait. For example, the fact that birds use their wings to fly does not necessarily mean that wings first evolved for flight. Instead, they may have evolved for some other reason, such as thermoregulation or to reduce running speed, and only later were coopted for another purpose. As another example, consider upright walking in human evolution. Our ability to walk on two legs allows us to carry tools and weapons, but this does not mean that upright walking was first selected for because of this ability. Some other reasons may have been responsible at first, and only later was the ability to carry tools a significant factor.

On a microevolutionary level, we can see the same thing when looking at variation of the CCR5– mutation. The CCR5 gene (short for C–C chemokine receptor type 5) is located on chromosome 3. This gene is responsible for the CCR5 protein, which functions in resistance to certain infectious diseases. A mutation of the CCR5 gene results in the deletion of a 32-bp section of the DNA sequence of CCR5, and is known as the CCR5–

mutation. The CCR5 gene (short for C–C chemokine receptor type 5) is located on chromosome 3. This gene is responsible for the CCR5 protein, which functions in resistance to certain infectious diseases. A mutation of the CCR5 gene results in the deletion of a 32-bp section of the DNA sequence of CCR5, and is known as the CCR5– (delta 32) mutation. The Δ32 mutation has a frequency of 0–14% in European populations, but is absent in the rest of the world (Stephens et al. 1998). Statistical analysis of DNA sequences near this locus provides strong support that the distribution of this allele has been shaped by natural selection (Bamshad et al. 2002).

(delta 32) mutation. The Δ32 mutation has a frequency of 0–14% in European populations, but is absent in the rest of the world (Stephens et al. 1998). Statistical analysis of DNA sequences near this locus provides strong support that the distribution of this allele has been shaped by natural selection (Bamshad et al. 2002).

As noted in Chapter 4, what makes the CCR5– allele interesting is the link to susceptibility to HIV, the virus that causes AIDS. Heterozygotes with one

allele interesting is the link to susceptibility to HIV, the virus that causes AIDS. Heterozygotes with one  allele show partial resistance to HIV, and homozygotes show almost complete resistance to AIDS (Galvani and Slatkin 2003). However, AIDS has been around for only a short time in human history and therefore could not have been responsible for the initial elevation of this mutant allele in some European populations. Instead, higher frequencies of CCR5–

allele show partial resistance to HIV, and homozygotes show almost complete resistance to AIDS (Galvani and Slatkin 2003). However, AIDS has been around for only a short time in human history and therefore could not have been responsible for the initial elevation of this mutant allele in some European populations. Instead, higher frequencies of CCR5– most likely arose because of selection related to some other disease. What disease could have been responsible?

most likely arose because of selection related to some other disease. What disease could have been responsible?

Answering this question is in part dependent on when the mutation first appeared in Europe. One coalescent analysis suggested a date of roughly 700 years ago (Stephens et al. 1998), which places the origin around the year 1300. One possibility that has been suggested is the pandemic of bubonic plague known as the black death that ravaged Europe from 1346 to 1352. However, Galvani and Slatkin (2003) modeled natural selection due to black death and found that even in a case where the mutant allele was dominant, an epidemic of bubonic plague would not have been strong enough to generate current levels of CCR5– in Europe. The period of potential selection due to bubonic plague was too short to result in frequencies much above 0.01. On the other hand, they found that models based on continuing epidemics of smallpox, another major historical disease, could account for current levels of CCR5–

in Europe. The period of potential selection due to bubonic plague was too short to result in frequencies much above 0.01. On the other hand, they found that models based on continuing epidemics of smallpox, another major historical disease, could account for current levels of CCR5– . Under a model of a dominant resistance allele, selection due to smallpox could have elevated the frequency of CCR5–

. Under a model of a dominant resistance allele, selection due to smallpox could have elevated the frequency of CCR5– to about 0.10 in 680 years. Assuming incomplete dominance extends the amount of time slightly, but still fits a relatively recent origin of the mutant allele.

to about 0.10 in 680 years. Assuming incomplete dominance extends the amount of time slightly, but still fits a relatively recent origin of the mutant allele.

Further insight into the evolution of the CCR5– allele comes from analysis of ancient DNA from human skeletal remains. Hummel et al. (2005) extracted DNA sequences from 14 skeletons from a mass grave of black death victims in Germany in 1350 and found that the allele frequency of CCR5–

allele comes from analysis of ancient DNA from human skeletal remains. Hummel et al. (2005) extracted DNA sequences from 14 skeletons from a mass grave of black death victims in Germany in 1350 and found that the allele frequency of CCR5– was not significantly different from a control group of famine victims from Germany in 1316 that did not have the plague (allele frequency = 0.125). If individuals with CCR5–

was not significantly different from a control group of famine victims from Germany in 1316 that did not have the plague (allele frequency = 0.125). If individuals with CCR5– were less likely to be infected with bubonic plague, then the allele frequency of CCR5–

were less likely to be infected with bubonic plague, then the allele frequency of CCR5– in the black death mass grave should be significantly lower than in the control group, which is not the case.

in the black death mass grave should be significantly lower than in the control group, which is not the case.

Hummel et al. (2005) also found evidence for the CCR5– allele in 4 of 17 Bronze Age skeletons from Germany dating back 2900 years. These results show that the mutant allele was common in Europe over 2000 years before the black death. They conclude that bubonic plague was unlikely to have been a major factor in the evolution of CCR5–

allele in 4 of 17 Bronze Age skeletons from Germany dating back 2900 years. These results show that the mutant allele was common in Europe over 2000 years before the black death. They conclude that bubonic plague was unlikely to have been a major factor in the evolution of CCR5– , and that smallpox is a more likely causal factor. It is possible, of course, that other infectious diseases might have also contributed to changes in CCR5–

, and that smallpox is a more likely causal factor. It is possible, of course, that other infectious diseases might have also contributed to changes in CCR5– over time. In any event, this example shows again how adaptive relationships that we see today (in this case, AIDS resistance) cannot be used to explain the origin and evolution of a mutant allele.

over time. In any event, this example shows again how adaptive relationships that we see today (in this case, AIDS resistance) cannot be used to explain the origin and evolution of a mutant allele.

7.1.4 Lactase Persistence and the Evolution of Human Diet

The case studies presented thus far have focused on disease. Adaptation to disease through natural selection makes sense as disease directly affects one’s probability of survival. As a change of pace, however, let us consider a different sort of selection: in this case, evidence of adaptation to changing diet. As with all mammalian species, human infants are nourished through breastfeeding. (The fact that in recent historical times some humans have used infant formula as an artificial substitute for mother’s milk does not negate the fact that we have evolved, as have all mammalian species, to breastfeed.) Infant mammals produce the enzyme lactase, which allows lactose (milk sugar) to be broken down and digested. The typical pattern in mammals is for lactase production to shut down after the infant is weaned. After this, a mammal can no longer easily digest lactose.

Many humans today are lactose-intolerant, which means that they cannot produce the lactase enzyme after about 5 years of age. The physical effect of lactose intolerance can vary, but can include flatulence, cramps, bloating, and diarrhea. The interesting fact of human variation is that although many people are lactose intolerant, others have no trouble digesting lactose as they continue to produce the lactase enzyme throughout their lifetimes. The gene that controls lactase activity is on chromosome 2. Apart from some minor mutations that are found in low frequencies, there are two lactase activity alleles: LCT*R, which is the lactase restriction allele that codes for a shutoff of lactase production after weaning; and LCT*P, which is the lactase persistence allele that codes for continued lactase production. The lactase persistence allele is dominant, so individuals with one persistence allele (genotype LCT*P/LCT*R) or two persistence alleles (genotype LCT*P/LCT*P) can more easily digest lactose. The recessive homozygotes (LCT*R/LCT*R) are lactose-intolerant (Mielke et al. 2011).

Lactase persistence has an interesting geographic distribution. It is highest in northern Europe, and moderate in southern Europe and the Middle East. On average, lactase persistence is very low in African and Asian populations, although some African populations, such as the Fulani and the Tutsi, have moderate to high frequencies (Leonard 2000; Tishkoff et al. 2007). Variation in Africa is particularly revealing because some populations are found with high frequencies of lactase persistence, and some are found with very low levels (and thus have a high prevalence of people who are lactose-intolerant).

The critical factor that explains global variation in lactase persistence is diet. Populations that have a history of dairy farming tend to have higher frequencies of the lactase persistence allele. Natural selection has been proposed as an explanation, where the lactase persistence allele was selected for in dairying populations because of the nutritional advantage among those that were able to digest milk (higher levels of fats, carbohydrates, and proteins, in addition to extra water from the milk). Because the cattle are domesticated, there was likely also selection for cattle that produced more milk and better nutritional content, a good example of the process of coevolution of two species. A study of European cattle revealed that the geographic distribution of six milk protein genes in cattle was correlated with levels of lactase persistence in humans and the locations of prehistoric sites associated with the early adoption of cattle farming (Beja-Pereira et al. 2003). As humans adapted to a diet that included milk, they also selected cattle that provided better quantity and quality milk.

The high frequency of the lactase persistence allele in Europe and in some African populations poses an interesting problem in understanding the origin and spread of the allele. Did it arise in Europe and spread to Africa, or arise in Africa and then spread to Europe? On the other hand, did it evolve independently in both Europe and in Africa? Molecular genetic analysis of the lactase persistence allele shows that the third hypothesis is correct. The specific mutation responsible for lactase persistence in Europeans is a change from the base C to the base T at base pair position 13910 in the lactase persistence gene. However, this variant is rare or absent in African populations, which instead undergo a change from G to C at base pair position 14010. Thus, different mutations appeared in different places that produced lactase persistence, and each mutation was selected for independently when dairy farming arose and milk became an important part of the human diet (Tishkoff et al. 2007).

The estimated age of lactase persistence mutations fits the archaeological evidence for the origin of domestication of cattle. Cattle farming began in northern Africa and the Middle East between 7500 and 9,000 years ago, which fits with the estimated age of 8000–9000 years for the T-13910 mutation in Europeans. A somewhat earlier age estimate of 2700–6800 years for the C-14010 mutation in Africa fits the younger age of 3300–4500 years ago for cattle domestication in sub-Saharan Africa (Tishkoff et al. 2007).

It is clear from both the European and African evidence that the increase in lactase persistence occurred in a very short time in an evolutionary sense. All of these changes, genetic and cultural, took place only within the last 10,000 years, which is a high degree of evolutionary change. Tishkoff et al. (2007) estimate a selection coefficient associated with lactase restriction of between s = 0.035 and s = 0.097. We know from Chapter 6 that natural selection can be rapid for a dominant allele. For example, selection for a dominant allele starting at a frequency of 0.001 using a selection coefficient of s = 0.05 can result in an allele frequency of 0.13 after 100 generations, 0.71 after 200 generations, and 0.87 after 300 generations. In terms of human lifetimes, 300 generations is about 7500 years using a length of 25 years for each generation. We can play with the numbers, but it is clear that a lot of evolution is possible in 10,000 years!

7.1.5 Genetic Adaptation to High-Altitude Populations

The examples presented thus far have focused on adaption through natural selection to disease and diet. There are also examples of how humans have adapted to different physical environments as our ancestors expanded out of Africa and spread across the world. One particular challenge has been dealing with the physiologic stress of living in a high-altitude environment. Some humans have adapted to living permanently at heights in excess of 2500 ms ( ∼ 8200 ft, or 1.6 mi) above sea level (Beall and Steegmann 2000). Some high-altitude populations live as high as 5400 m above sea level (Beall 2007). The main stress of high-altitude environments is hypoxia, which is a shortage of oxygen. As altitude increases, barometric pressure decreases. Consequently, there is less oxygen available in the blood, and arterial oxygen saturation drops, causing severe physiologic stress (Frisancho 1993).

When a low-altitude native enters a high-altitude environment, the hypoxic stress can be countered to some extent by a variety of physiologic mechanisms, such as increased red blood cell production and increased respiration. Over time, these stresses can often be quite serious. Some adaptation occurs in infants and children who move to high altitude through a process known as developmental acclimatization, where there are changes in the body during the growth process when adapting to an environmental stress. For example, children born at low altitudes who have moved to high altitude show an increase in aerobic capacity compared with those that stay at low altitude. Further, the younger the child who moves to high altitude, the greater the change (Frisancho 1993).

A number of studies have shown that children in high-altitude populations around the world tend to have increased chest dimensions, indicating greater lung volume relative to body size (Frisancho and Baker 1970; Frisancho 1993). Much of this growth appears to be the result of developmental acclimatization, as the growth response to high altitude is related to the age at which a child moves there; the earlier the age, the greater the response. However, there are exceptions to this general trend, which suggest that the growth response is not always solely developmental acclimatization, and there may be genetic differences as well (Greksa 1996; Beall and Steegmann 2000).

If there are at least some genetic influences on high-altitude environment, then some physiologic and biochemical traits may have been shaped by natural selection. More recent genetic studies have provided evidence of natural selection to high altitude, along with an interesting lesson about the nature of natural selection itself, which is that different populations have adapted in different ways to the hypoxic stress of high altitude (Beall 2007; Storz 2010). Comparisons of two widely studied high-altitude populations have provided some interesting contrasts. One group is the native inhabitants of the Andean Plateau in South America, descended from human populations moving into the region about 11,000 years ago. The other group is the native inhabitants of the Tibetan Plateau, who colonized the area about 25,000 years ago. Unlike low-altitude natives that visit high altitudes and suffer from hypoxic stress, members of these high-altitude populations have normal levels of oxygen consumption, although the physiologic pathways are different among Tibetans and Andeans. For example, an elevation in ventilation is a typical response of a low-altitude native to the stress of high altitudes. This response is also seen in Tibetans, but not in Andeans, who have lower resting ventilation levels. Other differences between Tibetans and Andeans include the level of oxygen in the blood, the level of oxygen saturation in the blood, and levels of hemoglobin concentration (Beall 2007). Work has begun to identify genes that are responsible for high-altitude adaptations and how they have been selected for in Tibetan populations (e.g., Beall et al. 2010; Simonson et al. 2010; Yi et al. 2010).

Current (as of 2011) research suggests that both Andean and Tibetan populations have evolved different genetic adaptations to high-altitude stress. This suggests that the starting point for both populations, in terms of initial genetic variation, was different, and that natural selection provided alternative means of adapting. The important lesson here is that there may be different paths of selection that operate differently depending on the types of genetic variation available, which, in turn, is influenced by variation in mutation and genetic drift.

Apart from the evidence for multiple adaptive solutions, studies of the genetics of high-altitude adaptation provide yet another example of the rapid pace of recent human evolution, because the high-altitude populations have been in those environments only within recent evolutionary history (i.e., within the past 11,000–25,000 years, which again is a short time ago in evolutionary history).

7.1.6 The Evolution of Human Skin Color

The final example of natural selection in this chapter deals with another example of human genetic adaptation to different environmental conditions. Human skin color (pigmentation) is a quantitative trait that shows an immense amount of variation between human groups around the world, ranging from some whose average level of pigmentation is very dark to those who are extremely light in color. Further, there are no apparent breaks in this distribution. Humans do not come in a set number of discrete shades; that is, humans are not made up of “black,” “brown,” and “white” people, but instead come in every shade along a continuum from very dark to very light (Relethford 2009).

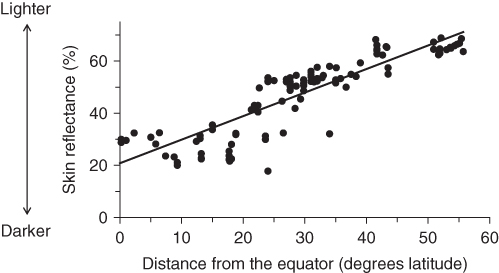

The wide range of variation in human skin color is one clue that it has been affected in the past by natural selection, and the specific geographic distribution of skin color is another. For native human populations (those who have not recently migrated), skin color tends to be darkest at or near the equator, and decreases with increasing distance from the equator, both north and south. This relationship is shown clearly in Figure 7.2, which plots the relationship between skin color (as typically measured as the percentage of light reflected off the skin at a wavelength of 685 nm) and distance to the equator for 107 human populations in the Old World (Africa, Europe, Asia, Australasia). Although there is some scatter, in general indigenous human populations show a strong correlation of skin color and distance from the equator. Such striking correspondence with the physical environment is a clue for natural selection, an inference strengthened by the fact that the amount of ultraviolet (UV) radiation received also varies by distance from the equator. UV radiation is strongest at the equator and diminishes with increasing distance away from the equator. Skin color, levels of UV radiation, and distance from the equator are all highly correlated (Jablonski and Chaplin 2000). The obvious conclusion is that the evolution of human skin color has been linked to varying degrees of UV radiation. Because pigmented skin acts as a protective barrier against UV radiation, dark skin color has evolved as a protection against excessive UV radiation in areas at or near the equator. Farther away from the equator, where levels of UV radiation are lower, the problem is not excessive, but insufficient, UV radiation, and lighter skin color likely evolved to allow more UV radiation to affect human skin. Given these geographic relations, we can turn to examining some possible selective forces that are related to UV radiation.

Figure 7.2 Geographic distribution of human skin color in the Old World. The dots represent samples of 107 human populations in the Old World that were measured using an E.E.L. reflectometer that measures the percentage of light at 685 nm reflected off of human skin on the upper inner arm, which is relatively unexposed in many human societies. Because skin color varies between the sexes, only male samples are included here as there are more studies of male skin color than female skin color). Distance from the equator is taken as the absolute value of the latitude for each population. Data sources for 102 samples are listed in Relethford (1997) and supplemented with data on five Australian native samples listed in Relethford (2000). The solid line is a linear regression showing the best fit of a straight line to the data points.

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree