KEY TERMS

Acquired immune deficiency syndrome (AIDS)

Directly observed therapy (DOT)

Extensively drug-resistant tuberculosis (XDR TB)

Highly active antiretroviral therapy (HAART)

The appearance of acquired immune deficiency syndrome (AIDS) in the early 1980s challenged the widely held belief that infectious diseases were under control. However, there had been intimations during the previous few decades that the microbes were not as controllable as generally believed. The influenza virus was proving stubbornly unpredictable, deadly new variants of known bacteria were beginning to crop up, and the familiar old bacteria were becoming strangely resistant to antibiotics. That trend has continued, and the importance of public health in combating these growing problems has become increasingly apparent.

The Biomedical Basis of AIDS

By the turn of the 21st century, the exotic disease that seemed to strike only gay men has turned into a world-wide scourge: The human immunodeficiency virus (HIV) now infects over 35 million individuals and kills more than 1.5 million a year.1 In the United States as of 2012, some 658,500 people had died of AIDS.2 Since the outbreak was first recognized, a great deal has been learned about HIV, how it causes AIDS, and how it is spread.

HIV is a retrovirus, a virus that uses RNA as its genetic material instead of the more usual DNA. Retroviruses have long been known to cause cancer in animals, and they were extensively studied for clues to the causes of human cancer, research that proved helpful for understanding the immunodeficiency virus when it was identified. Two human retroviruses—causing two types of leukemia—were known before HIV was discovered. Retroviruses infect cells by copying their RNA into the DNA of the cell, penetrating the genetic material like a “mole” in a spy agency. This DNA may sit silently in the cell, being copied normally along with the cell’s genetic material for an indefinite number of generations. Or it may take over control of the cell’s machinery, causing the uncontrolled reproduction typical of cancer.

The target of HIV is a specific type of white blood cell called the CD4-T lymphocyte, or T4 cell. T4 cells are just one of many components of the complicated immune machinery that is activated when the body recognizes a foreign invader such as a bacterium or a virus. The T4 cell’s role is to divide and reproduce itself in response to such an invasion and to attack the invader. In a T4 cell that is infected with HIV, activation of the cell activates the virus also, which then produces thousands of copies of itself in a process that kills the T4 cell. The T4 cells are a key component of the immune system because, in addition to attacking foreign microbes, they also regulate other components of the immune system, including the cells that produce antibodies, the proteins in the blood that recognize foreign substances. Thus destruction of the T4 cells disrupts the entire immune system.3

The course of infection with HIV takes place over a number of years. After being exposed to HIV, a person may or may not notice mild, flu-like symptoms for a few weeks, during which time the virus is present in the blood and body fluids and may be easily transmitted to others by sex or other risky behaviors. The body’s immune system responds as it would to any viral infection, producing specific antibodies that eliminate most of the circulating viruses. The infection then enters a latent period, with the viruses mostly hidden in the DNA of the T4 cells, although a constant battle is taking place between the virus and the immune system. Billions of viruses are made, and millions of T4 cells are destroyed daily.4 During this time, the person is quite healthy and is less likely to transmit the virus than during the early stage of infection (although transmission is still possible). Eventually however, after several years, the immune system begins to lose the struggle, and so many of the T4 cells begin to die that they cannot be replaced rapidly enough. When the number of T4 cells drops below 200 per cubic millimeter of blood, about 20 percent of the normal level, symptoms are likely to begin appearing, and the person is vulnerable to opportunistic infections and certain tumors. At the same time, the number of circulating viruses increases, and the person again becomes more capable of transmitting the infection to others.5 At this stage, the person meets the criteria for AIDS, which is defined by the T4 cell count and/or the presence of opportunistic infections.

The development and licensing of a screening test in 1985 was a major step forward in the fight against HIV. The test measures antibodies to the virus, which begin to appear 3 to 6 weeks after the original infection. This test is relatively fast and inexpensive; it is a sensitive screening test, giving the first indication that the individual may be HIV positive. The test is used for three purposes: diagnosing individuals at risk to determine whether they are infected so that they may be appropriately counseled and, if necessary, treated; monitoring the spread of HIV in various populations via epidemiologic studies; and screening donated blood or organs to ensure that they do not transmit HIV to a recipient of a transfusion or transplant. A major drawback of the antibody screening test is the absence of antibodies in the blood during the initial 3- to 6-week period after infection. This “window” of nondetectability may give newly infected people a false sense of security. More accurate tests that look for the virus itself in the blood are now available. These tests are used to confirm infection in people who have tested positive in the screening test. In the United States, they are also done on all donated blood to ensure that no virus-infected blood is used for transfusions.

Tests that directly measure a virus in the blood have contributed a great deal to understanding the biomedical basis of HIV infection. Measurement of “viral load”—the concentration of viruses in the blood—is a valuable tool for evaluating the effectiveness of therapeutic drugs. Viral load has also been found to influence the individual’s chances of transmitting the virus by sexual and other means. Thus a therapy that is effective in reducing viral load can help to control the spread of HIV.

The major pathways of HIV transmission vary in different populations. Homosexual relations between men are still the leading route of exposure for men in the United States. Injection drug use accounts for 10 percent of new HIV infections in Americans.2 Transmission by heterosexual relations, especially male to female, is becoming increasingly common in this country; it is the leading route of infection for females. In the developing countries of Asia and Africa, where HIV infection is spreading rapidly, heterosexual relations are the most common means of transmission. Several studies have found that circumcision protects men against contracting HIV from infected women; circumcision does not appear to protect women against contracting HIV from infected men. Studies of the effect of circumcision on male-to-male transmission have yielded mixed results.6

The sharing of needles is a common route of transmission in developing countries because of insufficient supplies of sterile equipment for medical use. In poor countries, including Russia and some nations in Eastern Europe, medical personnel often use one syringe repeatedly for giving immunizations or injections of therapeutic drugs. If one of the patients is HIV positive, this practice may transmit the infection to everyone who later receives an injection with the same needle. According to the World Health Organization (WHO), 40 percent of injections worldwide are given with unsterile needles.7 Transfusion with HIV-contaminated blood is no longer a significant source of HIV infection in the United States, but it still occurs in countries too poor to screen donated blood.

A special case of HIV transmission occurs from mother to infant, in utero or during delivery, in 25 to 33 percent of births unless antiretroviral drugs are given. The virus can also be transmitted to breast-fed babies in their mother’s milk. All infants of HIV-positive women will test positive during the first few months after birth. This is because fetuses in the womb receive a selection of their mothers’ antibodies, providing natural protection against disease (though not HIV) during their first months of life. Testing a baby’s blood for HIV antibodies provides evidence of the mother’s HIV status. Many states in the United States routinely perform HIV screening tests on newborns’ blood as part of their newborn screening programs. The special issues raised by maternal–fetal transmission of the virus have been the subject of ethical, legal, and political controversy at the national and state levels. Drug therapies are now capable of preventing transmission of the virus from mother to infant in 99 percent of cases.8 Similar drug treatment of mothers and/or infants can prevent transmission in breast milk.

In the United States, HIV/AIDS has become a disease of minorities. Although African Americans make up only about 12 percent of the U.S. population, almost half of new cases being diagnosed in recent years are among blacks. According to the Centers for Disease Control and Prevention (CDC), in 2013, the rate of infection was almost seven times higher in black men than in white men and 15 times higher in black women than white women.9 Hispanics are diagnosed at three times the rate of whites. Among the factors that contribute to the higher rates among minorities are the fact that people tend to have sex with partners of the same race and ethnicity; minorities tend to experience higher rates of other sexually transmitted diseases, which increase the risk of transmission of HIV; socioeconomic issues associated with poverty; lack of awareness of HIV status; and negative perceptions about HIV testing.10

Progress in treating HIV/AIDS over the past two decades has been dramatic. Early therapy focused on treating opportunistic infections, which were often the immediate cause of death in AIDS patients. The first antiretroviral therapy, zidovudine (AZT), was approved by the Food and Drug Administration (FDA) in 1987.11 The drug interfered with the replication of HIV by inhibiting the enzyme that copies the viral RNA into the cell’s DNA. However, the virus’s tendency to mutate rapidly leads to the development of resistance to the drug, meaning that its effectiveness can wear off.

As scientists gained a better understanding of the virus, they developed drugs that target different stages of viral replication. Protease inhibitors, which interfere with the ability of newly formed viruses to mature and become infectious, were introduced in 1995.11 At the same time, scientists recognized that treating patients with a combination of drugs that attack the virus in different ways reduces the opportunity for HIV to mutate and develop resistance. The introduction of these drug combinations, called highly active antiretroviral therapy (HAART), led to dramatic improvements in the survival of HIV-infected patients. As a result, the number of AIDS deaths fell by more than half between 1996 and 1998 and has continued to decline since then.12

The development of effective treatments for HIV/AIDS has had many beneficial consequences. HAART can reduce viral load to undetectable levels in the blood and body fluids of many patients, which greatly reduces the likelihood that the virus will be transmitted to others through sexual contact and other means. The availability of effective therapy also encourages at-risk people to be tested and counseled on ways to protect themselves and to prevent transmission of the virus to others. Scientists hoped that HAART would be able to completely eradicate HIV from the body, but this hope has not been realized. The virus manages to survive in protected reservoirs of the body, rebounding into active replication when the drugs are withdrawn. For some patients, side effects of the drugs can be severe and even fatal; about 40 percent of patients treated with protease inhibitors develop lipodystrophy, characterized by abnormal distributions of fat in the body, sometimes accompanied by other metabolic abnormalities.13 Moreover, the virus can develop resistance to these drugs if used improperly. A survey of blood samples taken between 1999 and 2003 found that 15 percent were resistant to at least one drug.14

New drugs continue to be developed, including a class called “fusion inhibitors,” introduced in 2003, which interfere with HIV’s ability to enter a host cell, and a class called integrase inhibitors, introduced in 2002, which prevents the virus from integrating into the genetic material of human cells.15 A totally new approach, published in 2014 but not ready for clinical application, uses genetic engineering to knock out a receptor on the membrane of T cells, making them resistant to HIV.16 Thus for many patients, HIV infection has become a chronic disease, necessitating life-long therapy but enabling them to live a relatively normal life. The drugs are expensive however, costing an average of $23,000 per year per patient, and many insurance plans cover only a limited portion of the cost.17,18

The greatest hope for controlling AIDS, especially in the developing world where the new drugs are unaffordable, is to develop an effective vaccine. Prevention through immunization has been the most effective approach for the viral scourges of the past, including smallpox, measles, and polio. Early hopes for the rapid availability of a vaccine against AIDS have faded, however. In fact, after several promising vaccine candidates failed in clinical trials, the National Institutes of Health (NIH) held a meeting of vaccine researchers in March 2008, to reassess whether a vaccine will ever be possible and what new approaches could be tried.19 It should perhaps not be surprising that a virus so well adapted to disabling the immune system should be so effective at eluding attempts to employ that same immune system against it. Part of the difficulty in developing an effective vaccine is that the virus itself is constantly changing its appearance, making it unrecognizable to the immune mechanisms that are mobilized against it by a vaccine. This quality is common to RNA viruses. Another difficulty is that there is no good animal model for studying HIV/AIDS.20

At present, the most effective way to fight AIDS is to prevent transmission (step 3 in the chain of transmission). This requires education and efforts at motivating people to change their high-risk behavior, an exceedingly difficult task.

HIV seems to have appeared from nowhere and to have spread over the entire world within a decade. Where did the virus come from? Genetic studies of HIV show that it is related to viruses that commonly infect African monkeys and apes, and it seems likely that a mutation allowed one of these viruses to infect humans. There is evidence that this type of event—cross-species transmission of viruses—may occur fairly frequently. Monkeys and chimpanzees are killed for food in parts of Africa, which could explain how humans were exposed.11 HIV is remarkable, however, for the speed with which it has spread into the human population worldwide.

Scientists conjecture that the human form of the virus may have existed in isolated pockets of Africa for some time, but that its rapid spread was the result of social conditions in Africa and the United States in the late 1970s. Because symptomatic AIDS does not appear until several years after the original infection, the first victims of the 1980s were probably infected in the early and mid-1970s. Investigators trying to track the early spread of the epidemic have gone back to test stored blood samples drawn in earlier times, and they have found HIV-infected samples from as early as 1966, in the blood of a widely traveled Norwegian sailor who died of immune deficiency. The sailor’s wife and one of his three children later died of the same illness, and their stored blood, when tested, was also found to be infected with HIV.21 An even older blood sample drawn from a West African man in 1959 has been found to contain fragments of the virus, but it is not known whether the man developed AIDS.22 This evidence implies that sporadic early outbreaks of the disease occurred in isolated African villages, going undetected for decades.

The reasons for the recent emergence of HIV disease as a significant problem include the disruption of traditional lifestyles by the movement of rural Africans to urban areas, trends magnified by population growth, waves of civil war, and revolution. The apparent worldwide explosion of AIDS then occurred because of changing patterns of sexual behavior and the use of addictive drugs in developed and developing countries, together with the ease of international air travel.

Ebola

In 1976, before the AIDS epidemic was recognized but while, as scientists now believe, the virus was spreading silently into African cities, another viral illness broke out with much more dramatic effect in Zaire and Sudan. Symptoms caused by the previously unidentified Ebola virus include fever, vomiting and diarrhea, and severe bleeding from various bodily orifices. Several hundred people became ill from the disease, and up to 90 percent of its victims died. The disease spread rapidly from person to person, affecting especially family members and hospital workers who had cared for patients. Investigators from the CDC and WHO identified the virus and helped devise measures, including quarantine, to limit the spread of the disease, which eventually disappeared. The Ebola virus broke out again in Zaire in the summer of 1995, killing 244 people before it again seemed to vanish.23 Since then, there have been repeated outbreaks in West and Central Africa. According to CDC data, more than 800 Africans died of Ebola between 1996 and early 2013.24

The Ebola virus infects monkeys and apes as well as humans, and on a number of occasions infected monkeys have been imported into the United States. In 1989, a large number of monkeys imported from the Philippines died of the viral infection at a primate quarantine facility in Reston, Virginia. In that episode, which served as the basis for Richard Preston’s book, The Hot Zone, several laboratory workers were exposed to the virus, which fortunately turned out to be a strain that did not cause illness in humans.25 Fruit bats, common in African jungles, are thought to serve as the reservoir for the virus between outbreaks in the human population.26 There are indications that, like HIV, Ebola may spread to humans when they handle the carcasses of apes used for food. Unlike HIV, the Ebola virus kills the apes it infects, leading to significant declines in populations of gorillas and chimpanzees; outbreaks in humans have been preceded by the discovery of dead animals near villages where the outbreaks occur.27 There is now concern that Ebola may be pushing West African gorillas to extinction. Attempts to develop a vaccine for humans have had success in protecting monkeys in laboratory studies; whether such a vaccine could be delivered safely to wild gorillas is uncertain.28

Ready to treat an Ebola Patient.

In 2014, a major Ebola epidemic spread through the populations of several countries in West Africa. Hardest hit were Liberia, Guinea, and Sierra Leone, poor countries that have been plagued by political unrest and inadequate medical care systems. Ebola spread easily to healthcare workers and to family members who cared for patients. Corpses of people who died of the disease teemed with the virus, and the West African funeral customs of touching and kissing the dead contributed to the contagiousness of the disease. An explosion of cases in Sierra Leone was triggered by the funeral of a traditional healer in early summer.29 Medical workers learned to don protective clothing that covered all surfaces of their bodies. However, in the heat of the West African summer, it was hard for workers to spend much time in such cumbersome garb.

More than 28,600 cases, with about 11,300 deaths, have been reported in the West African epidemic, and those numbers are thought to be undercounts.24 This time, the virus came to the United States. The first patient was a Liberian man who became ill while visiting relatives in Dallas, Texas in September 2014. Thomas Eric Duncan was taken to a hospital, examined, and sent home with antibiotics. Although hospital staff were told he had been in Guinea, the information did not trigger alarm, and Ebola was not suspected. Three days later, Duncan’s condition worsened and he was taken back to the hospital, where he died on October 8.30 Two nurses who cared for him contracted the disease within days of his death. They were treated at two of four hospitals in the United States that have special units for treating dangerous infectious diseases: Emory University Hospital and the National Institutes of Health Clinical Center. Both women recovered.31 Another American patient, Dr. Craig Spencer, arrived in New York in late October after treating patients in Guinea with Doctors Without Borders. He had been monitoring himself and was hospitalized at Bellevue Medical Center when he developed a fever. Dr. Spencer also recovered.32

The better outcomes achieved by American patients, compared with the high death rate among West Africans—which in some West African countries was more than 70 percent—is due in part to excellent supportive care provided them in U.S. hospitals. One measure some of them received was transfusion with serum from survivors, which contains antibodies to the virus. Some patients were treated with ZMapp, an experimental drug. Whether either of these treatments contributed to their survival is not certain and would need to be tested in a clinical trial. A surprising finding has been that, even after a patient appears to be fully recovered, the virus may linger in his/her body. Male survivors have been warned to use condoms because their semen contains Ebola virus for a still unknown period after recovery. Dr. Ian Crozier, who contracted the disease when working with WHO in Sierra Leone and was evacuated to Emory University Hospital in September 2014, learned after his discharge that one eye was badly infected with virus and he was in danger of losing his sight. He was treated with an experimental drug and gradually recovered his vision. Many of the survivors have also reported other aftereffects of the disease, including extreme fatigue, joint and muscle pain, and hearing loss.33

Several drugs appear to show promise in treating Ebola, although none has yet proved itself effective in a clinical trial. ZMapp, a combination of three antibodies, proteins that can attach to the virus and neutralize them, has shown promise in monkey studies. It may have helped the nine patients in the United States that were treated with it, many of them healthcare workers returned from Africa. A drug called favipiravir, developed in Japan as a treatment for influenza, works by interfering with the virus’s ability to copy itself. It was given to a number of patients in Guinea and appeared to be helpful in patients whose viral load was low to moderate. An older anti-Ebola drug, TKM-Ebola, created to fight the strain prevalent in an earlier outbreak in Central Africa, has been adapted to the current West African strain and was found to be effective in treating monkeys infected with the virus. In March 2015, a clinical trial of TKM-Ebola was launched in Sierra Leone, where the disease was still spreading.34,35

Developing and testing these drugs has been difficult, time-consuming, and controversial. They all require genetic engineering. Supplies of ZMapp were limited early in the epidemic, and they were exhausted by their use for American patients. In response to criticism that the drugs were not given to Africans, who suffered the brunt of the epidemic, one South African researcher was quoted as saying, “It would have been a front-page screaming headline: ‘Africans used as guinea pigs for American drug company’s medicine.’”36 As supplies of all three drugs became more plentiful and clinical trials were being planned, the epidemic began to wane in West Africa. Several trials were cancelled, but the one on TKM-Ebola in Sierra Leone was completed. Results are not yet available.

Trials of an experimental vaccine, by CDC and several institutions in Sierra Leone, is under way, focusing on workers most likely to be exposed to Ebola including doctors and nurses, cleaning staff, ambulance teams, and burial workers. Several other vaccines are also being tested. The waning of the epidemic by the time the trials began in April, while good news, makes it more difficult to determine the efficacy of the vaccines.37 While the need for drugs and vaccines may be ending for now, all Ebola experts expect that there will be more epidemics in the future.

Publicity about Duncan and healthcare workers who were exposed to Ebola through him or in West Africa caused alarm in the United States. The fact that Dr. Spencer had spent several days in New York City on his return from Africa, dining out, bowling, and taking the subway before he began to feel ill raised concern, although no one was infected by his actions.38 In October 2014, the governors of New York and New Jersey announced that all healthcare workers returning from West Africa would be quarantined. The first person affected by this policy was Kaci Hickox, a nurse who had worked with Doctors Without Borders in Sierra Leone treating Ebola patients. After a grueling twoday journey from Africa, she was greeted at Newark Liberty Airport by a frenzy of fear and disorganization. After being detained for hours among officials who had donned coveralls, gloves, and face shields, Hickox was sent to a nearby hospital. There she was placed in a tent with a toilet but no shower and told she would be kept there for a 21-day mandatory quarantine.39

Hickox appeared on a Sunday talk show to criticize Governor Christie’s policy. She noted that Ebola is infectious only after a patient begins to show symptoms and that she had not had symptoms. Moreover, a blood test had found no evidence of Ebola infection. Hickox hired a legal team to defend her civil rights, and Christie, after four days, freed her from the quarantine and arranged for her to be driven to her home in Maine. She never developed the disease.40

After the Hickox fiasco, policies on quarantine eased in most of the United States as science began to guide policy. It was recognized that mandatory isolation would discourage medical volunteers from going to West Africa to help eradicate the epidemic at its source. Most returning workers were willing to endure a milder form of quarantine at home, being monitored by public health workers, taking their temperature twice a day, and keeping a distance of three feet from others when in public.41

Other Emerging Viruses

Other new or resurgent viruses have appeared in various parts of the world, including the United States, in the recent past. In May and June 2003, for example, public health authorities in Illinois and Wisconsin received reports of a disease similar to smallpox among people who had had direct contact with prairie dogs. Prompt investigation by state officials and the CDC identified the cause as monkeypox virus, which was known from outbreaks in Africa. Although known to infect monkeys, the primary hosts for monkeypox are rodents.42

The outbreak in the United States spread to 72 people in six Midwestern states. Fortunately, monkeypox is not highly contagious in humans, and it is a less severe disease than smallpox. No one died in the outbreak. However, the incident raised alarms about exotic pets. The illness in the prairie dogs was traced back through pet stores and animal distributors to an Illinois distributor, who in April had imported several African rodents, including a Gambian giant rat that had died of an unidentified illness. In June 2003, the U.S. government banned the import of all rodents from Africa. Careful surveillance and isolation of exposed people and animals halted the outbreak by the end of July, and no further cases of monkeypox have been reported since then.43

In 1993, the CDC was called in when two healthy young New Mexico residents living in the same household died suddenly within a few days of each other of acute respiratory distress, their lungs filled with fluid. Within three weeks, biomedical scientists had recognized that the illness, which had claimed several other victims in the Four Corners area of the Southwest, was caused by hantavirus. Named after the Hantaan River in Korea, the hantavirus had been responsible for kidney disease among thousands of American soldiers in Korea during the 1950s. In New Mexico, the virus was found to be carried by deer mice, which had been unusually plentiful in the Four Corners area because of an unusually wet winter. All of the human victims of hantavirus had had significant exposure to mouse droppings, either in their homes or in their places of work.44

The CDC declared hantavirus pulmonary syndrome (HPS) a notifiable disease in 1995, and as of the end of 2013, 606 cases had been reported in 34 states.45 Over one-third of the victims have died, often in a matter of hours. It was hoped that adding HPS to the list of notifiable diseases would help medical workers to recognize it more readily. In the case of a Rhode Island college student who may have contracted the disease in 1994 from exposure to mouse droppings while making a film at his father’s warehouse, the hospital did not recognize that he was seriously ill and sent him home from the emergency room the first time he appeared there; two days later he returned much sicker, and he died five hours after being hospitalized.46

Rodents are suspected as carriers of several hemorrhagic fevers with symptoms similar to those caused by hantavirus or the Ebola virus: Bolivian hemorrhagic fever (caused by the Machupo virus), Argentine hemorrhagic fever (caused by the Junin virus), and Lassa fever in Sierra Leone are all carried by rats. Well-known, insect-borne viruses, such as yellow fever and equine encephalitis, are resurgent in areas of South and Central America where they had been thought to be vanquished. Dengue fever, also spread by mosquitoes, has taken on the new, deadly form of a hemorrhagic fever and is spreading north through Central America, threatening people along the southern border of the United States.23

In the summer of 1999, the United States first experienced the effects of West Nile virus, which spread rapidly across the country over the next few years. The first sign of the new disease was a report to the New York City Health Department by an infectious disease specialist in Queens, New York that an unusual number of patients had been hospitalized with encephalitis, an inflammation of the brain. The disease was suspected to be St. Louis encephalitis, a mosquito-borne disease that is endemic in the southern United States, and the diagnosis was supported by the patients’ reports that they had been outdoors in the evenings during peak mosquito hours. Soon, however, it became obvious that a great number of dead crows were being found in the New York area, and a veterinarian at the Bronx Zoo reported that there had been unprecedented deaths among the zoo’s exotic birds. Lab tests confirmed that the virus causing the human disease was the same as the one that was killing the birds, but St. Louis encephalitis virus was not known to infect birds. West Nile virus was well known in Africa, West Asia, and the Middle East. It is known to be fatal to crows and several other species of birds. It also infects horses. Fifty-six patients were hospitalized in the New York epidemic, seven of whom died.47,48

How the West Nile virus came to New York is not known. The most likely explanation is that it came in an infected bird, perhaps a tropical bird that was smuggled into the country. The virus is easily spread among birds by several species of mosquitoes, some of which also bite humans. Although the threat disappeared with the mosquitoes after the first frost in the fall, the next summer saw a spread of the disease to upstate New York and surrounding states. Carried by migratory birds, the virus has now arrived in all 48 contiguous states.49 It appears that West Nile virus is here to stay. In 2013 it caused illness in 2469 people.50 While the infection proves fatal to a small percentage of human victims, it often leaves patients with long-term impairments, including fatigue, weakness, depression, personality changes, gait problems, and memory deficits.51

Public health professionals fight the virus by educating the public about eliminating standing water where mosquitoes breed, wearing long sleeves, and using repellant. A vaccine is available for horses, and scientists are working on developing a vaccine that will be effective for humans.

A variety of environmental factors are responsible for the recent emergence of so many new pathogens. Human activities that cause ecological changes, such as deforestation and dam building, bring people into closer contact with disease-carrying animals. Modern agricultural practices, such as extreme crowding of livestock, intensify the risk that previously unknown viruses will incubate in crowded herds and be widely dispersed to human consumers. International distribution of meat and poultry may help to spread new pathogens. The popularity of exotic pets in the United States also can lead to the spread of pathogens, like monkeypox and perhaps West Nile virus, from animals or birds to people. A breakdown of public health efforts such as mosquito control programs because of complacency or insufficient funding has resulted in the reappearance of insect-borne diseases. Spread of the viruses in developing countries is facilitated by urbanization, crowding, war, and the breakdown of social restraints on sexual behavior and intravenous drug use. U.S. residents will not be able to escape the effects of these new pathogens. The ease and speed of international travel means that a new infection first appearing anywhere in the world could traverse entire continents within days or weeks. This was dramatically illustrated by the emergence and rapid spread of SARS. The SARS virus is believed to have been transmitted to humans from an animal species used for food in China, possibly the civet cat.

Influenza

Influenza—the “flu”—may seem like an old familiar infectious disease. However, it can be a different disease from one year to the next and has the capacity to turn into a major killer. This happened in the winter of 1918–1919, when the flu killed 20 million to 40 million people worldwide, including 196,000 people who died in the United States in October.52 Even in the average year, influenza kills 250,000 to 500,000 people worldwide.53 Although normally most deaths from flu occur in people above age 65, the 1918 epidemic preferentially struck young people.

Influenza virus has been studied extensively, and vaccination can be effective, but constant vigilance is necessary to protect people from the disease. Like HIV, influenza is an RNA virus, constantly changing its appearance and adept at eluding recognition by the human immune system. Because of the year-to-year variability of the flu virus, flu vaccines must be changed annually to be effective against the newest strain. Each winter, viral samples are collected from around the world and sent to WHO, where biomedical scientists conduct experiments designed to predict how the virus will mutate into next year’s strain. These educated guesses form the basis for next year’s vaccine.

At unpredictable intervals, however, a lethal new strain of the flu virus can come along, as it did in 1918. A strain that caused the Asian flu emerged in 1957, and a third, called Hong Kong flu, arrived in 1968. Neither of the latter outbreaks was as deadly as the 1918 epidemic, although a not insignificant 70,000 Americans died from the Asian flu. In 1976, CDC scientists thought they had evidence that another deadly strain—swine flu—was emerging, and the country mobilized for a massive immunization campaign. That time, the scientists had made a mistake; the anticipated epidemic never occurred. Infectious disease experts have for decades been expecting a new epidemic, which did occur in 2009, as discussed later in this section.

New strains of influenza virus, especially those that have undergone major changes, tend to arise in Asia, particularly China, and then spread around the world from there. One of the reasons China is an especially fertile source of new flu strains is that animal reservoirs for influenza—pigs and birds—are common there, living in close proximity to humans. Human and animal influenza viruses incubate in a pig’s digestive system, forming new genetic combinations, and are then spread by ducks as they migrate. While such hybrid viruses, containing human and animal genes, are only rarely capable of infecting humans, those that are able to do so are the most likely to be deadly.

Until recently, little was known about what made the 1918 strain of influenza so deadly, or how to predict the lethality of new strains that come along. Recently, however, genetic studies have been possible using samples of the 1918 virus. Tissue taken from soldiers who died in 1918 had been stored at the Armed Forces Institute of Pathology in Washington, D.C. and from victims in an Alaskan village who were buried in permanently frozen ground. Scientists have found that the 1918 virus has features in common with avian flu viruses that make them especially dangerous to humans, and they also resemble the human virus enough that they can spread easily among people.54 Similar avian features were found in the viruses that caused epidemics in 1957 and 1968.

Thus influenza experts were alarmed in 1997, when a 3-year-old Hong Kong boy died from a strain of influenza virus that normally infected chickens. There had been an epidemic of the disease in the birds a few months earlier. Antibodies to the virus were found in the blood of the boy’s doctor, although he did not become ill, and public health authorities watched for more cases with great concern. Two dozen other people became sick by December, and six died. To prevent further transmission from chickens to humans, the Hong Kong government ordered that all 1.5 million chickens in the territory be killed. That action seems to have been effective in halting the epidemic in humans.55

Bird flu emerged again in 2003 and has become widespread in Asia, Africa, Europe, and the Middle East, despite efforts to eliminate it by killing millions of birds.56 Between 2003 and the end of 2013, 649 human cases in 16 countries were reported and more than half had died.57 Thus far it appears that most of the human victims of the bird flu caught the virus from chickens, not from other humans. There is great concern that mixing of the viral genes could occur in a person infected with both the bird virus and a human flu virus, resulting in a much more virulent strain capable of spreading among humans. The new virus could start a global pandemic of a lethal form of the disease, as in 1918.

An outbreak of avian influenza struck the United States in spring 2015. Millions of turkeys and chickens have died or been culled in Midwestern states. No humans have caught this flu, but there is concern that it might happen. This virus, which shares some genes with the avian flu that infected poultry in Asia and Europe, is believed to have been carried to this continent by migrating ducks, geese, and swans. When it arrived in the new world, the virus then mixed with genes from North American viruses. The National Institutes of Health developed an experimental vaccine against the bird flu when it first affected humans in Asia. The CDC is considering whether it might provide some protection to workers dealing with infected flocks. It is also working on a vaccine against the new virus.58

In late 2011, controversy arose over research funded by the National Institutes of Health that created a highly transmissible form of the avian flu virus. The work was done in ferrets, which are a good model of how flu viruses behave in humans. Two groups of researchers, at the University of Wisconsin and a Dutch university, created mutations in the virus that enabled it to spread by aerosol. The National Science Advisory Board for Biosecurity asked that details of the experiments be withheld from publication to prevent terrorists from replicating them.59

However, a meeting of the WHO in February 2012 concluded that the risk of its use by bioterrorists was outweighed by the danger that changes might occur naturally in the wild that would give the virus the ability to cause a pandemic.60 Full publication will allow scientists to recognize warning signals that the virus is becoming more dangerous, which also might lead to better treatments.

The public health approach to influenza control can serve as a model for how to predict and possibly prevent the spread of other new viral threats. As the AIDS epidemic has shown, a new virus can come from “nowhere” and wreak havoc all over the world within a few years. Complacency over the “conquest” of infectious diseases has led governments to cut budgets and reduce efforts at monitoring disease. That should not happen again. The public health information-gathering network is more important than ever.

New Bacterial Threats

Bacteria, which a few decades ago seemed easily controllable because of the power of antibiotics to wipe them out, have, like viruses, emerged in more deadly forms. Previously unknown bacterial diseases such as Legionnaires’ disease and Lyme disease have appeared. More baffling is the fact that some ordinary bacterial infections have turned unexpectedly lethal. A great cause for concern is the development of resistance to drugs. Resistance can spread among pathogens of the same species and even from one bacterial species to another.

Legionnaires’ disease and Lyme disease are not new, but only recently have they become common enough to be recognized as distinct entities and for their bacterial causes to be identified. Legionella bacteria were able to flourish in water towers used for air conditioning. Regulations requiring antimicrobial agents in the water have been effective in limiting the spread of Legionnaires’ disease. The conditions that promote the spread of Lyme disease, however, are more difficult to change. The pathogen that causes Lyme disease was identified in 1982 as a spirochete that is spread by the bite of an infected deer tick.61 The reservoir for Lyme disease is the white-footed mouse, on which the deer tick feeds and becomes infected. Deer, on which the ticks grow and reproduce, are an important step in the chain of infection, and it is because of the recent explosion in the deer population in suburban areas that Lyme disease has now become such a problem for humans.

Infection with streptococci, the bacteria that cause strep throat, had normally been easily cured with penicillin. However, for reasons that are not well understood, a more lethal strain of the bacteria, called group A streptococci, has become increasingly common. The sudden death of Muppets puppeteer Jim Henson in 1990 from fulminating pneumonia and toxic shock was caused by this new, virulent strain. The headline-grabbing “flesh-eating bacteria” that infect wounds to the extent of necessitating amputations and even causing death are also group A streptococci. The group A strain, which produces a potent toxin, was prevalent in the early part of the 20th century, when it caused scarlet fever, frequently fatal in children, and rheumatic fever, which often caused damage to the heart. For decades, the group A strain was superseded by strains B and C, which were much milder in their pathogenic effects. But now, for reasons that are not clear, the group A strain has become much more prevalent.62,63

Another bacterium that has recently become more deadly is Escherichia coli, which is normally present without ill effect in the human digestive tract. In 1993, the new threat gained national attention when a number of people became severely ill after eating hamburgers at a Jack in the Box restaurant in Seattle, and four children died of kidney failure. The culprit was found to be a new strain of E. coli, which had acquired a gene for shiga toxin from a dysentery-causing bacterium. The toxin, against which there is no treatment, causes kidney failure, especially in children and the elderly. The shiga toxin gene had “jumped” from one species of bacteria to another while they were both present in human intestines. The resulting strain, called E. coli serotype O157:H7, is now quite common in ground beef, leading public health authorities to recommend or require thorough cooking of hamburgers.21(p.427) The “jumping gene” phenomenon has also been found in cholera and diphtheria bacteria, bacterial strains that can be benign or virulent depending on the presence or absence of genes that produce toxins.

Since the finding that E. coli O157:H7 is common in hamburger, it has been discovered to cause illness by a number of other exposures, including unpasteurized apple cider and alfalfa sprouts.64(pp.160–161) In 1999, there was an outbreak in upstate New York among people who had attended a county fair. The bacteria were found in the water supplied to food and drink vendors. It turns out that E. coli O157:H7 is widespread in the intestines of cattle, especially calves, which excrete large quantities of the bacteria in manure. The manure may contaminate apples fallen from trees or other produce, which if not thoroughly washed before being consumed, may spread the disease to people. At the New York State county fair, the water was contaminated because heavy rain washed manure from the nearby cattle barn into a well.65 A vaccine against the toxic bacteria has been approved for cattle in the hope of reducing the risk of human exposure.66

Perhaps the most disturbing development in infectious diseases is the antibiotic resistance among many species of bacteria, a development that leaves physicians powerless against many diseases they thought to be conquered. The process by which bacteria become resistant to an antibiotic is a splendid example of evolution in action. In the presence of an antibiotic drug, any mutation that allows a single bacterium to survive confers on it a tremendous selective advantage. That bacterium can then reproduce without competition from other microbes, transmitting the mutation to its offspring. The result is a strain of the bacteria that is resistant to that particular antibiotic. The mutated gene can also “jump” to other bacteria of the same or different species by the exchange of plasmids, small pieces of DNA that can move from one bacterial organism to another. Different mutations may be necessary to confer resistance to different antibiotics. Some bacteria become resistant to many different antibiotics, making it very difficult to treat patients infected with those bacteria.

Improper use of antibiotics favors the development of resistance, and the current widespread existence of resistant bacteria testifies to the carelessness with which these life-saving drugs have been used. For example, since antibiotics are powerless against viruses, the common practice of prescribing these drugs for a viral infection merely affords stray bacteria the opportunity to develop resistance. Another example of improper use is the common tendency of patients to stop taking an antibiotic when they feel better instead of continuing for the full prescribed course. The first few days’ dose may have killed off all but a few bacteria, the most resistant, which may then survive and multiply, becoming much more difficult to control. In some countries, antibiotics are available without a prescription, increasing the likelihood that they will be used improperly.

A practice that significantly contributes to antibiotic resistance is the widespread use in animal feeds of low doses of antibiotics for the purpose of promoting the growth of livestock and to prevent disease among animals living in crowded, unsanitary conditions. More antibiotics are used in this manner than in medical applications, and the practice has clearly led to the survival of resistant strains of bacteria that may not only contaminate the meat but that may also spread the antibiotic resistance genes to other bacteria.67 Studies have shown that these “superbugs” can be transmitted to humans.68 Because the agricultural industry benefits from the practice and has fought restrictions, the government has found it difficult to impose regulations on it. In 2013, the FDA took the step of asking antibiotics manufacturers to modify their labels in a way that discourages overuse of the drugs. The FDA also called for all use of antibiotics in farm animals to be overseen by veterinarians.69

The bacteria Salmonella and Campylobacter are estimated to cause 3.8 million cases of food-borne illness in the United States each year. In a 1999 study, 26 percent of the salmonella cases and 54 percent of the campylobacter cases were found to be resistant to at least one antibiotic, probably because of antibiotic use in animal feed.70 Resistance to erythromycin and other common antibiotics is increasingly found in group A streptococci, the lethal strain discussed previously.71 Infection with methicillin-resistant Staphylococcus aureus (MRSA) is a major problem in hospitals, burn centers, and nursing homes, where hospital staff may carry the bacteria from one vulnerable patient to another. Intensive efforts have succeeded in reducing the rates of MRSA infections in hospitalized patients by 54 percent between 2005 and 2011.72 Healthcare-associated infections, many of them caused by drug-resistant bacteria, are estimated to contribute to some 100,000 deaths annually in the United States.73

Multidrug-Resistant Tuberculosis (MDR TB)

Tuberculosis, which is spread by aerosol, used to be a major killer in the United States. Between 1800 and 1870, it accounted for one out of every five deaths in this country. Worldwide, it is still the leading cause of death among infectious diseases. It is a disease associated with poverty, thought to be conquered in the affluent United States, where the incidence of tuberculosis steadily declined between 1882 and 1985. Much of the success came from the early public health movement, which emphasized improvement of slum housing, sanitation, and pasteurization of cows’ milk, which harbored a bovine form of the bacillus that was pathogenic to humans. Patients were isolated in sanatoriums, where they were required to rest and breathe fresh air and, incidentally, were prevented from infecting others. With the introduction of antibiotics in 1947, mortality from tuberculosis was dramatically reduced, sanatoriums were closed, and tuberculosis seemed vanquished.

In 1985, however, the trend reversed. There were several reasons for the increase in the incidence of tuberculosis, which was particularly concentrated in cities and among minority populations. The HIV epidemic was certainly a major factor. People with defective immune systems are more susceptible to any infection, but HIV-positive people are especially vulnerable to tuberculosis. An increasing homeless population and the rise in intravenous drug use, both of which are associated with HIV infection, were also factors in increasing tuberculosis rates. Homeless shelters, prisons, and urban hospitals are prime sites for the transmission of tuberculosis.

But tuberculosis is not limited to the “down and out” or those who participate in high-risk behavior. “The principal risk behavior for acquiring TB infection is breathing,” as one expert says.74(p.1058) People have been infected with tuberculosis bacilli in the course of a variety of everyday activities: a long airplane trip sitting within a few rows of a person with active tuberculosis,75 hanging out in a Minneapolis bar frequented by a homeless man with active tuberculosis,76 and, most frighteningly, attending a suburban school with a girl whose tuberculosis went undiagnosed for 13 months.77

When tuberculosis bacilli are inhaled by a healthy person, they do not usually cause illness in the short term. Most often, the immune system responds by killing off most of the bacilli and walling off the rest into small, calcified lesions in the lungs called tubercles, which remain dormant indefinitely. Evidence that a person has been exposed shows up in tuberculin skin tests, which cause a conspicuous immune response when a small extract from the bacillus is injected under the exposed person’s skin. For reasons that are not well understood, probably having to do with individual immune system variations, a small percentage of people develop active disease soon after exposure; others may harbor the latent infection for years before it becomes active, if ever. Infected people have a 10 percent lifetime risk of developing an active case. The risk for people who are HIV positive is much higher: up to 50 percent.64 Most cases of active tuberculosis are characterized by growth of the bacilli in the lungs, causing breakdown of the tissue and the major symptom—coughing—which releases the infectious agents into the air.

Before the introduction of antibiotics, about 50 percent of the patients with active tuberculosis died. Antibiotics dramatically reduced not only the mortality rate, but the incidence rate as well, since the medication relieved coughing and therefore inhibited the spread of disease. However, the development of multidrug resistance (MDR), in some strains of the bacilli, has meant that the disease is much more difficult and expensive to treat, and the mortality rate is much higher.

The increased prevalence of the antibiotic resistant strains in all parts of this country during the 1980s is thought to be due to the fact that many patients did not take their medications regularly. The tuberculosis bacillus is a particularly difficult pathogen to deal with because it grows slowly and because diagnostic testing can take several weeks. Once the disease is diagnosed, even the most potent antibiotic must be taken for several months to wipe out the pathogens. Patients commonly begin to feel better after 2 to 4 weeks of taking an effective prescribed drug and, if they stop taking the medication at that point, they may relapse with a drug-resistant strain.

That MDR TB can be a threat to all strata of society was made clear by an epidemic that was finally recognized in a suburban California school in 1993. The source of the outbreak was a 16-year-old immigrant student who had contracted the disease in her native Vietnam.77 She had developed a persistent cough in January 1991, but her doctors had failed to diagnose the cause as tuberculosis until 13 months later. Even then, they did not report the case to the county health department, as required by law, and when the case was reported by the laboratory that had analyzed her sputum, the doctors refused to cooperate with the health department. By the time the county authorities took over her case in 1993, the girl had developed a drug-resistant strain. In accordance with standard public health practice, the health department then began screening all the girl’s contacts for tuberculosis infection. Some 23 percent of the 1263 students given the tuberculin skin test were found to be positive for exposure to the infection. Of those, 13 students had active cases of the drug-resistant strain of the disease. Fortunately, no one died.78

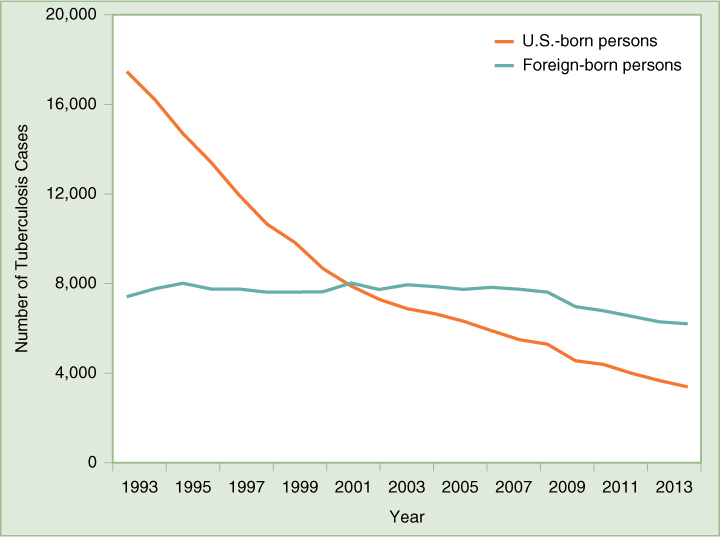

It is clearly in the community interest to ensure that all tuberculosis patients be properly diagnosed and provided a full course of medications, whether or not they can afford to pay, to prevent them from spreading the disease. New York City has proven that, by applying public health measures, it is possible to reverse the trend of increasing MDR TB, a trend that had been worse in that city than anywhere else in the nation. In 1992, the number of tuberculosis cases diagnosed in the city had nearly tripled over the previous 15 years, and 23 percent of new cases were resistant to drugs. The city and state began intensive public health measures, which included screening high-risk populations and providing therapy to everyone diagnosed with active tuberculosis. A program of directly observed therapy (DOT) was instituted for patients who were judged unlikely to take their medications regularly. Outreach workers traveled to patients’ homes, workplaces, street corners, park benches, or wherever necessary to observe that each patient took each dose of his or her medicine. As a result of these measures, the number of new cases of tuberculosis fell in 1993, 1994, and 1995, and the percentage of new cases that were MDR also fell by 30 percent in a 2-year period.79 New York’s success has been echoed by national trends, as shown in (FIGURE 10-1), giving hope that concerted public health efforts can eliminate tuberculosis as a serious public health threat in the United States. DOT is recognized all over the world as the most effective approach to dealing with tuberculosis.

As Figure 10-1 shows, the majority of tuberculosis cases reported in the United States are found among foreign-born persons. This reflects the fact that tuberculosis infection is widespread throughout the world, especially in developing countries and in countries with high rates of HIV infection. The prevalence of MDR TB strains is a major concern; the proportion of TB cases that are MDR can reach 20 percent in some countries, especially those of the former Soviet Union.80 In the United States, the proportion of multidrug-resistant cases is only about 1 percent, mainly occurring among foreign-born persons.81 Because of immigration and international travel, the United States will need to continue tuberculosis control programs at home and to actively participate in global efforts to control the disease around the world to avoid future outbreaks in this country.

The CDC in 2007 revised its requirements for overseas medical screening of applicants for immigration to the United States.82 Federal agencies also developed measures to prevent individuals with certain communicable diseases, including active tuberculosis, from traveling on commercial aircraft. Names of these individuals are placed on a Do Not Board list by federal, state, or local public health agencies and distributed to international airlines. A similar list is distributed to border patrol authorities in order to prevent individuals deemed dangerous to the public health from entering the country through a seaport, airport, or land border. These lists are managed by the CDC and the Department of Homeland Security.83