INTRODUCTION

As Mr Wolfman stepped out of the cold into Philadelphia’s bustling 30th Street train station, a billboard near the entryway caught his attention. The blurred image of an athletic bicyclist filled the background while a bold-faced statement proclaimed, “THE WIND IN YOUR FACE IS WORTH PROTON THERAPY.” Similar ads adorned the walls between the ticket counter and the waiting area. One showed a man and woman gleaming over a candle-lit dinner. Another, his favorite, showed an ecstatic pair of octogenarians dancing the night away. “SATURDAY NIGHT JITTERBUG IS WORTH PROTON THERAPY.”

Mr Wolfman’s mind wandered to the recent news special he saw on TV. Not too long ago, just a few miles away, a futuristic $150 million proton therapy center opened. The special said the new facility spanned the length of a football field and contained some of the most sophisticated medical technology ever created. To destroy cancer cells at the microscopic level, they assembled more than 200 tons of superconducting magnets and computer-guided subatomic particle accelerators.

Neither the news special nor the billboards at 30th Street Station mentioned whether all that equipment was truly necessary. They did not mention that each treatment with proton therapy is somewhere between two and six times as expensive as the alternative form of radiation. They did not mention that in many cases there is no evidence that proton beams work better than previously available, less expensive methods.

So Mr Wolfman chuckled to himself. The jitterbugging octogenarians reminded him of his own mother and father. As he stepped off the platform to board his train he marveled, “It’s amazing what modern medicine can do.”

We expect new technologies to be more expensive than their older counterparts. Of course the iPhone 6 is more expensive than the iPhone 5. It is faster. It has a better camera. It performs better. What we do not expect is to pay more money for iPhone 5-level performance year after year. This is because in almost every industry, technology that improves performance renders older technology less expensive. In 1965, Intel founder Gordon Moore observed that every 2 years the number of transistors in a computer processor doubles and the cost of computing falls by half.1 This observation, commonly referred to as “Moore’s law,” has since been extended to describe the rate at which technology impacts economic productivity in a broad range of settings.

Healthcare, as an industry, appears to be an exception to Moore’s law. Rather than falling, the costs of healthcare are rapidly rising. Meanwhile, the performance of our healthcare system has improved at a much slower pace. Consider life expectancy as one measure of health system performance. Over the last 50 years, the average US life expectancy has risen by 14%, from 69 to 79 years old. Meanwhile, the fraction of total spending in the United States that is devoted to healthcare has risen from 5% of GDP to 18% of GDP—an overall 360% increase. Certainly the 10 extra years of life we have gained make us better off today than 50 years ago. But it also seems very possible that we might have achieved the same result for less money. In fact, if healthcare obeyed Moore’s law, we would expect that at current healthcare spending levels we should be living well to the ripe old age of 69 times 360%—about 250 years old!

There is widespread consensus that our return on investment for new healthcare technologies ought to be better. The question (for which there is no obvious answer) is how much better? Expecting to live beyond age 250 may be asking too much. In healthcare we appear to be more willing to pay for limited performance compared to other industries where there is less at stake. Additionally, for any given medical condition in the modern era we have many different options to choose from and relatively little information available about the value of each option (see Chapters 3 and 4). Even when some value information is available, creating a payment system that rewards value is not straightforward (see Chapter 15). Dartmouth economist Jonathan Skinner explains the limitations of our current payment system bluntly, claiming health insurance is currently designed to “[pay] for any treatment that doesn’t obviously harm the patient, regardless of how effective it is.”2

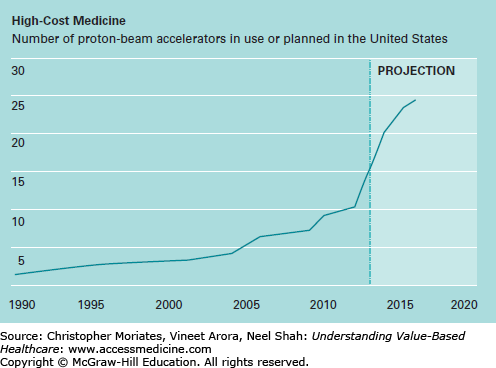

This payment system creates strong commercial incentives to deploy expensive technologies before we really know how well they work. Once a hospital builds a facility like a $150 million proton therapy center, they have every reason to use it as much as possible. Unsurprisingly, hospitals around the United States are “loading up” on proton beam accelerators at an astonishing rate (Figure 8-1). In the last 5 years alone, the number of proton therapy centers in the United States has doubled even though the benefits remain largely unproven.3,4

Figure 8-1

Number of proton-beam accelerators in use or planned in the United States. (Republished with permission of MIT Technology Review. From Skinner J. The costly paradox of health care technology. MIT Tech Rev. September 5, 2013. http://www.technologyreview.com/news/518876/the-costly-paradox- of-health-care-technology/. Permission conveyed through Copyright Clearance Center, Inc.)

Until recently hospitals were also purchasing the DaVinci surgical robot—a technology with similarly unproven benefits—with equal fervor. But then something interesting happened. After being named one of the “fastest growing” companies of 2012 by Fortune magazine, Intuitive Surgical (the makers of DaVinci robots), saw its sales slump significantly during the summer of 2014, largely due to widespread concerns about cost efficiency.5,6 Then more examples emerged of low-value technology getting scuttled in favor of cost-effective alternatives. During the fall of 2014, even as three new proton therapy centers were opening in other parts of the country, Indiana University announced that it would be closing its proton therapy center because insurers were refusing to pay for it.7 Many believe that these signs of wariness may be harbingers for the new era of value-based care. Certainly, as payers, purchasers, and patients continue to demand a better care at lower cost, frontline clinicians will be increasingly responsible for thoughtfully evaluating new technologies.

To meet this challenge we need to understand the return on investment of new technology more deeply. We need to know how much of our skyrocketing healthcare spending can be attributed to new technologies, as opposed to rising prices, the growing population, or any other cause. We need to know how to assess whether specific technologies are actually worth it, and have a reliable way of determining what “worth it” even means. In this chapter, we review some of the ways that economists have aimed to address these challenges as well as how clinicians might use these insights to provide our patients with better care.

TECHNOLOGICAL PROGRESS AS A DRIVER OF COST GROWTH

In the early 1960s, before Medicare was even officially launched, a contentious debate broke out over what the nation’s new public health insurance program should pay for and what it should not (Chapter 2). In 1972, after nearly a decade of highly politicized disagreement, Congress passed landmark legislation to extend Medicare coverage to all patients with failing kidneys.8 Around the same time, several new technologies made it possible to significantly extend the lives of these patients. As machines were developed to filter waste out of the blood stream, the first outpatient dialysis centers opened their doors.9 Soon, transplant surgery to replace dysfunctional kidneys with healthy donor kidneys became increasingly safe. These options were not cheap.

In deciding whether to extend Medicare to patients with failing kidneys, policymakers had to grapple with uncomfortable questions about how to reasonably value human life. The decision largely turned on the compelling testimony of a kidney-failure patient named Shep Glazer who argued that thousands of American citizens would die if Congress did not act immediately to make dialysis affordable.10 Although the law was ultimately passed, there was little consensus on how to address these challenges moving forward and there remains little consensus to this day.11 Nonetheless, the debate itself has made it very clear that medical innovation has a flipside. Richard Rettig, a RAND senior scientist who studied the policy implications of the decision to cover end-stage kidney disease, warned about an “inevitably sharper conflict that looms ahead between two societal goals: moderating the growth of medical expenditures and maintaining a world-class capacity to innovate in medicine.”12

In the late 1970s, two health policy professors from Brandeis University—Stuart Altman and Stanley Wallack—summarized the available evidence and helped popularize the view that technology was the “culprit” behind rising health costs.13 Proving this to the satisfaction of many economists took more research however. The first task was formally defining what “technological progress” means. The United States Congressional Budget Office (CBO) includes all “changes in clinical practice that enhance the ability of providers to diagnose, treat, or prevent health problems.”14 These advances can take several forms, ranging from novel drugs and devices to new applications of existing technologies. Given the expansiveness of this definition, adding up the effects of each individual change in clinical practice is highly cumbersome. Instead, some economists have aimed to estimate the impact of technology on healthcare costs indirectly, by first adding up other contributing factors and then seeing what is left over.

In 1992, Harvard economist Joseph Newhouse used one of the most famous examples of this approach.15 In order to isolate the impact of technology alone, he first estimated the contribution of an aging of the population, rising per capita incomes, and changes in the distribution of health insurance. He additionally factored in administrative costs, “defensive medicine” (clinicians utilizing technology to reduce malpractice liability), “physician-induced” demand (clinicians utilizing technology to make more money), and even the costs of caring for the terminally ill during the last year of their life. Surprisingly, he and others have consistently found that, collectively, changes in these factors over time explain only a fraction of the real growth in healthcare costs (Table 8-1).

Estimated contributions of selected factors to growth in real healthcare spending per capita, 1940 to 1990 (percent)

| Smith, Heffler, and Freedland (2000) | Cutler (1995) | Newhouse (1992) | |

|---|---|---|---|

| Aging in the population | 2 | 2 | 2a |

| Changes in third-party payment | 10 | 13 | 10b |

| Personal income growth | 11–18 | 5 | <23 |

| Prices in the healthcare sector | 11–22 | 19 | ∗ |

| Administrative costs | 3–10 | 13 | ∗ |

| Defensive medicine and supplier-induced demand | 0 | ∗ | 0 |

| Technology-related changes in medical practice | 38–62 | 49 | >65 |

Instead, Newhouse concluded that the unmeasured factor contributing to cost growth must be “the march of science and the increased capabilities of medicine”—in other words, the introduction of new technology itself! Using this method, Newhouse and other economists have estimated that up to 70% of medical spending growth over the last half-century may be due to cost-increasing advances in medical technology.16

For decades, these cost increases have portended trouble by straining budgets. As a result, healthcare cost growth is seldom seen in positive light. Then, in the early 2000s a Harvard economist named David Cutler began upending conventional wisdom. He argued that although there is clearly waste in the system, much of our increased spending on healthcare over time has in fact been worth it.17 While Newhouse primarily focused on estimating the costs of technology, Cutler focused on the benefits.

Consider how we used to manage heart attacks. In 1955 when President Dwight Eisenhower had a heart attack, his physicians recommended top-of-the-line medical care: one month of bed rest.18 Needless to say, this treatment was not very expensive. It was not very effective either. Today treating a heart attack with prolonged bed rest would be considered dangerous and grounds for medical malpractice. This is because since 1955 our treatments options have expanded enormously. Although these options have also made treating heart attacks more expensive, people who have heart attacks in the 21st century are unquestionably better off than those in the mid-20th century.

A heart attack patient today is likely to receive a number of intensive therapies, starting with an array of medicines: β-blockers to reduce the work effort of the heart (introduced in the 1970s), thrombolytics to dissolve any existing blood clots (introduced in the 1970s and 1980s), and drugs such as aspirin and heparin to thin the blood and prevent future clotting (first used for heart attack treatment in the 1980s).19,20 Surgical treatments have also become much more common. Coronary artery bypass—an “open heart” surgery that requires dividing the breast bone—was introduced in the late 1960s. Shortly afterwards, a “minimally invasive” procedure called percutaneous angioplasty made it possible to reopen blocked arteries without a large and painful incision in the chest. Before long, angioplasty would replace bypass surgery in the majority of cases. In the 1990s an additional procedure was added when angioplasty became paired with “bare metal” and then “drug-eluting” stents (wire mesh scaffolding designed to help keep arteries open). Although these stents are very expensive, they are also very effective and by the late 1990s they were used in over 84% of angioplasty cases.21

To compare the costs and benefits of these changes, Cutler and fellow economist Mark McClellan examined Medicare data over a 14-year period.22 In 1984, $3 billion was spent in total on heart attacks annually. By 1998 this number became $5 billion, even though the total number of heart attacks in the United States actually declined due to improvements in blood pressure, cholesterol, smoking, and other preventive measures. Cutler and McClellan attributed the increased total spending to an increase in the average amount spent per case, calculated to be nearly $10,000 after adjusting for inflation.23 They also showed that this difference was mostly driven by the increased use of technology-enabled surgery rather than increased prices. In 1984 nearly 90% of heart attacks were still being managed with medicine only; by 1998, more than half of heart attacks patients received surgical treatment.24

Cutler and McClellan then used social security records to determine how length of survival after a heart attack changed over this time period. In 1984 life expectancy after a heart attack was approximately 5 years on average, whereas by 1998 it had risen to 6 years.22 One interpretation of this information is that although we spent $10,000 more per person with a heart attack in 1998, this spending appeared to help them gain one year of life. Compared to many other things we spend money on this appears to be an incredibly great deal (just a few days in the hospital can easily cost $10,000). Cutler and McClellan conservatively estimated that the benefits to society of that 1 year of gained life is worth $70,000 per heart attack patient (this number is on par with other estimates, as we will discuss in the next section of this chapter).22 At a cost of $10,000 per patient, they concluded that society receives a net benefit of $60,000 per patient we treat for a heart attack.

Dr Cutler and others have similarly examined a variety of other key conditions as well, including advances in the treatment of hypertension, low-birthweight infants, and breast cancer (Table 8-2). The costs increases of caring for low-birthweight infants are particularly dramatic. Due to advances in neonatal intensive care, spending per patient increased by $40,000 between 1950 and 1990.25 Nonetheless, Cutler and fellow economist Ellen Meara assert that this investment was even more valuable that our investment in heart attacks because they caused infant mortality rates to plummet. The improved survival translated to an average of 12 years of life expectancy gained per patient, worth $240,000 to society ($200,000 net benefit). While most heart attack survivors do not work, all of the life years gained for low-birthweight infants are economically productive. University of Chicago economist David Meltzer has shown that for younger patients in particular, considering economic productivity and other future costs alongside survival can significantly increase the perceived cost-effectiveness of healthcare interventions.26

Summary of research on the value of medical technology changes

| Condition | Years | Change in Treatment Costs | Outcome | ||

|---|---|---|---|---|---|

| Change | Value | Net Benefit | |||

| Heart Attack | 1984–1998 | $10,000 | 1-year increase in life expectancy | $70,000 | $60,000 |

| Low-birthweight infants | 1950–1990 | $40,000 | 12-year increase in life-expectancy | $240,000 | $200,000 |

| Breast Cancer | 1985–1996 | $20,000 | 4-month increase in life expectancy | $20,000 | $0 |

| Hypertension | 1959–2000 | $520 | 6-month increase in life expectancy, discounted over an average lifespan | $5,117 | $4,597 |

The type of analysis spearheaded by Cutler and his colleagues where costs and benefits are quantified, summed, and compared head-to-head, is referred to as “cost-benefit analysis.” While many technologies have netted positive benefits over the long-term when viewed this way, it does not necessarily mean that these technologies are always used appropriately. In Chapter 1, we pointed out the gulf between the United States and our peer countries comparing our return on investment in healthcare (see Figure 1-6). Clearly, there is massive waste. How do we reconcile Cutler’s finding that much of the increased healthcare spending over time has been worth it with the notion that the United States could be getting more bang for its buck?

One explanation is that for every single technology that has been net beneficial over time, there are other technologies where the costs outweigh the benefits. On average, technology has yielded great net benefits for treatment of low-birthweight infants after they are born. By contrast, on average, technology has generated great waste in the way many pregnant women are managed before their infants are born. In the early 1970s, a few hospitals introduced fetal telemetry—the ability to monitor the heart beats of babies in utero in real time—in order to better predict which babies were distressed and needed to be quickly delivered by C-section. Within a few years, this technology became the standard of care and was used by nearly every hospital in the country, despite no evidence that newborn babies were better off.27 In fact, the only thing fetal telemetry appeared to do reliably was markedly increase the national C-section rate from just 5% of births in the early 1970s to 32% of births in 2014.28 Despite a lack of any apparent benefits, fetal telemetry continues in wide use today and is a significant contributor to the $5 billion price tag of avoidable C-sections.29

A second, more nuanced, explanation to reconcile the net benefits of technology with health system waste is that although many technologies are worth it some of the time, few technologies are worth it all the time. Take coronary stents for example. Cutler and McClellan showed that, on average, advances in cardiac care—particularly the increased use of stents over the last 20 years—have yielded benefits that far outweigh the increased costs. Still, to see these benefits we have to focus on improvements in care to the average patient over an extended time horizon. By contrast, studies of geographic variation in care (see Chapter 7), aim to determine the value of care for the marginal patient during a fixed snapshot in time (by “marginal patient” we are referring to a nonaverage patient for whom the technology is wasteful because they are different from the average patient in an important way). Washington University cardiologist Dr Amit Amin and his colleagues found that between 2004 and 2010 the use of drug eluting stents varied between 2% and 100% among physicians.30 Incredibly, this tremendous variation was only modestly correlated with patient risk. Dr Amin’s study and other examinations of geographic variation in healthcare, support the estimate that much of healthcare spending is unnecessary on the margin, even while increases in spending on average may be worthwhile.

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree