Translating Evidence into Medical Practice

Quality of Care 15

HEALTH SCENARIO

Mrs. E., a 69-year-old, Spanish-only-speaking Hispanic woman with a history of congestive heart failure (CHF), is admitted to Hospital A complaining of shortness of breath and difficulty breathing when lying down. During her stay, she is not evaluated for left ventricular systolic dysfunction (LVSD); as a result, she does not receive a prescription for an angiotensin-converting enzyme inhibitor (ACEI) or angiotensin receptor blocker (ARB) for LVSD despite having no contraindications. She receives postdischarge instructions verbally and in writing but only in English because Hospital A does not have onsite translators or a translation service. Two days after discharge, she is readmitted through the emergency department (ED) with an ejection fraction of 15% and severe pulmonary edema. Despite treatment, she dies.

Hospital A starts looking closely at its delivery of heart failure care when it receives the maximum 1% reimbursement penalty under the Medicare Readmissions Reduction program based on higher-than-expected 30-day readmission rates in the Medicare patients it treats for heart failure, pneumonia, and acute myocardial infarction (AMI) and a 1.1% penalty under Medicare’s Hospital Value-Based Purchasing Program based on its below average compliance with publicly reported, evidence-based practices in heart failure, pneumonia, AMI, and surgical care and on patients’ experience of care in fiscal year 2013. Hospital A finds that there are significant opportunities for improvement, and it seeks to address these issues to improve its patients’ experience and outcomes, and to avoid further financial penalties.

CLINICAL BACKGROUND

More than 5 million adults in the United States have heart failure, and more than 500,000 new cases are diagnosed each year. Approximately 275,000 deaths and more than 1 million hospitalizations are attributable to heart failure annually in the United States; it is the most common discharge diagnosis in persons older than 65 years, and nearly 25% of patients require rehospitalization within 30 days. The total cost of care for heart failure in 2013 was estimated at $32 billion; this number is expected to rise to $72 billion by 2020.

Clinical practice guidelines strongly recommend the evidence-based practices of left ventricular function assessment, ACEI and ARB prescription for left ventricular systolic dysfunction, smoking cessation counseling, and discharge instructions as part of standard care for heart failure, yet they remain underutilized: fewer than 60% of heart failure patients receive all recommended evidence-based diagnostic and therapeutic measures for which they are eligible.

The high prevalence and incidence of heart failure, together with its high burdens of mortality and morbidity and the availability of easily measurable, evidence-based treatments that reduce these burdens, makes it a high-priority target for quality improvement. It is one of the four conditions and categories for which Medicare has required hospitals to publicly report compliance with certain evidence-based recommendations since 2005 and performance on 30-day readmission and mortality rates since 2009. Most recently, Medicare has linked hospital reimbursement to these performance measures through its Readmissions Reduction Program and its Hospital Value-Based Purchasing (VBP) Program. Heart failure care also is a focus of the National Quality Strategy, falling within the priority of “promoting the most effective prevention and treatment practices for the leading causes of mortality, starting with cardiovascular disease.”

EPIDEMIOLOGY AND QUALITY OF CARE

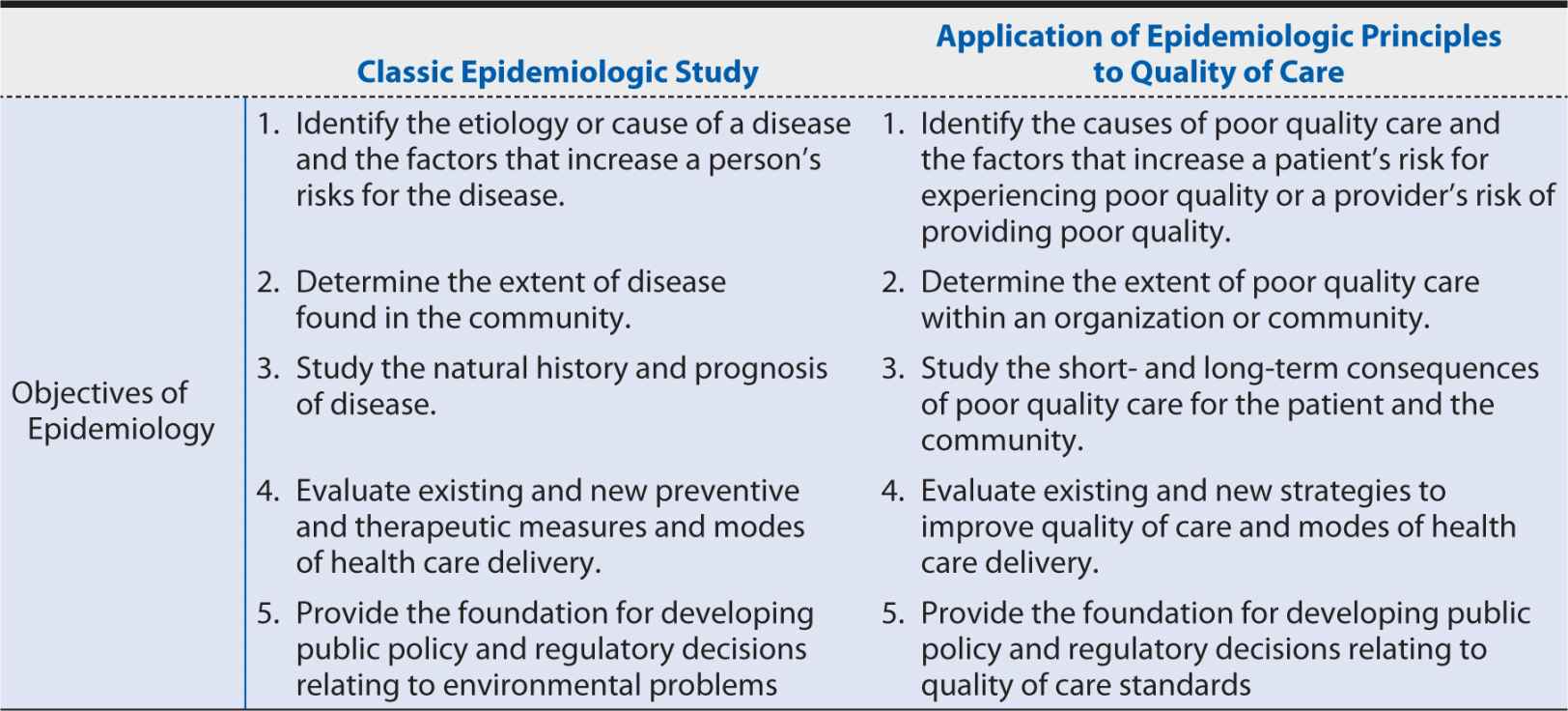

Although the public tends to associate “epidemiology” with investigations of dramatic outbreaks of contagious diseases, its principles have many more applications vital to day-to-day health care delivery. Prime among these are the measurement and improvement of health care quality. In such applications of epidemiology, we adjust the classic study of disease etiology (examining the possible relationship between a suspected cause and an adverse health effect) to focus on the provision of care as the independent or exposure variable and the reduction in adverse health effects as the dependent or outcome variable, taking into account environmental and other factors that may influence that relationship (Table 15-1).

Table 15-1. Objectives of epidemiology as applied to classic studies and to the measurement and improvement of quality of care.

WHAT IS “QUALITY OF CARE?”

“Quality of care” is a phrase that has become ubiquitous in the United States’ health care system in the time that has passed since the landmark year of 1998, when reports detailing serious quality-of-care concerns were released by the Institute of Medicine (IOM), the Advisory Commission on Consumer Protection and Quality in the Health Care Industry, and the RAND Corporation. The revelations these reports contained about the harms suffered through overutilization of some health care services, the underutilization of others, errors of commission and omission, and inequitable access to needed health care resources placed a national spotlight on “quality of care.” But what is “quality of care?” And how do we know when high-quality care has been provided? How can we tell which hospitals or physicians are the “best?” There are no easy answers to these questions, and “quality” will always remain somewhat subjective. However, significant strides have been made toward consensus on what “quality of care” should encompass and on essential attributes of measures used to document and compare it.

In the words of Robert Pirsig in Zen and the Art of Motorcycle Maintenance, “…even though Quality cannot be defined, you know what Quality is!” and it has been demonstrated and accepted that many aspects of quality of care are subject to measurement. Such measurement depends, however, on the perspective from which quality is examined and on the nature and extent of the care being examined. For example, patients choosing a health care provider or plan tend to view quality in terms of access, cost, choice of providers, the time the physician spends with them, and the physician’s qualifications. In contrast, physicians are likely to view quality of care in terms of the specific services they provide to the patients they treat—the benefits gained and the harms caused. Most definitions of quality of care do, however, agree on two essential components:

1. High technical quality of care, meaning, broadly, the provision of care for which the benefits outweigh the risks by a sufficient margin to make the risks worthwhile; and

2. Art of care, which refers to patients’ perception that they were treated in a humane and culturally appropriate manner and were invited to participate fully in their health decisions

Probably the most widely accepted definition of quality in the United States is the framework outlined in the IOM’s 2001 report Crossing the Quality Chasm, which states that “[h]ealth care should be:

• Safe: Avoiding injuries to patients from the care that is intended to help them

• Effective: Providing services based on scientific knowledge to all who could benefit and refraining from providing services to those not likely to benefit (avoiding underuse and overuse, respectively)

• Patient centered: Providing care that is respectful and responsive to individual patient preferences, needs, and values and ensuring that patient values guide all clinical decisions

• Timely: Reducing waits and sometimes harmful delays for both those who receive and those who give care

• Efficient: Avoiding waste, including waste of time, money, equipment, supplies, ideas, and energy

• Equitable: Providing care that does not vary in quality because of personal characteristics such as gender, race, ethnicity, geographic location, and socioeconomic status”

Internationally, the World Health Organization uses a definition based on six similar dimensions but with language that more explicitly invokes both population health and individual patient care. This “population health” aspect has been added to the United States’ definition through the Triple Aim for health care improvement, which has been adopted by the Department of Health and Human Services (DHHS) in the National Quality Strategy to achieve:

1. Better care: improve the overall quality by making health care more patient-centered, reliable, accessible, and safe.

2. Healthy people and healthy communities: Improve the health of the U.S. population by supporting proven interventions to address behavioral, social, and environmental determinants of health in addition to delivering higher quality care.

3. Affordable care: Reduce the cost of quality health care for individuals, families, employers, and government.

MEASURING HEALTH CARE QUALITY

Background

For most of the 20th century, there was a widespread belief that the individual training and skills of physicians were the most important determinants of health care quality.18 As a result, policies related to ensuring high-quality care tended to focus on standards for medical education and continuing education for practicing physicians. Routine measurement and reporting of quality indicators started gaining prominence toward the end of the 20th century as competition in the health care marketplace increased (particularly in the form of managed care structures that use financial incentives to influence physician and patient behavior); geographic variation in use of medical services was documented, raising questions regarding appropriate use; clinical and administrative databases were developed; and computing capability increased.

Public reporting of quality measures in the United States began “accidentally” in 1987 when the predecessor of today’s Centers for Medicare and Medicaid Services (CMS) produced a report of hospital-level mortality statistics. This report, which used only crude risk adjustment, was intended for internal use only but was obtained by members of the press under the Freedom of Information Act and widely released. Although this undoubtedly caused short-term furor, long-term benefits were realized in the form of demand for more fairly adjusted, quantitative quality reports in both the public and private sectors, which led to a proliferation of quality report cards for hospitals and health plans at national, state, and local levels. But even while applauding this increased transparency regarding quality of care, one must keep in mind not only the weaknesses of quality report cards in general (e.g., that they can capture only a few aspects of care and are hard to adjust for underlying differences in patient populations) but also that they are not all created equal. It is important to look closely at the kinds of measures used, the data sources from which these measures were collected, and the extent to which relevant differences in patient populations have been accounted for.

Structure, Process, and Outcome Measures

The dominant framework for quality of care evaluations is the “structure, process, and outcome” model developed by Avedis Donabedian in the 1960s. This model recognizes the fundamental interrelationships among these aspects of care: the necessary structures must be in place to facilitate delivery of the appropriate processes of care, which in turn achieve or increase the probability of the desired outcomes.

Donabedian defines structure as the relatively stable characteristics of providers of care, the tools and resources at their disposal, and the physical and organizational settings in which care is provided. As such, it encompasses factors such as the number, distribution, and qualifications of professionals; the number, size, equipment, and geographic distribution of hospitals and other care facilities; staff organization; internal structures for the review of clinical work; and the reimbursement strategies through which providers are paid. Structure measures are typically the easiest to collect data on—personnel and equipment, for example, can be counted in a straightforward manner—making it unsurprising that these are the initial foci of many quality evaluation programs. However, structure measures can indicate only general tendencies toward good- or poor-quality care. Meaningful evaluations of quality need to include measures more directly related to the care actually provided—in other words process or outcomes of care.

A process is an activity occurring within or between practitioners and patients, as determined through either direct observation or review of recorded data. The basis for using process measures to evaluate the quality of care provided is the scientific evidence of the relationship between the process and the consequences to the health or welfare of individuals or the community, taking into account the value placed on health or wellness by the individual or community in question. To use the terminology that has entered the health care lexicon in recent decades, process measures look at the practice of evidence-based medicine, or “the integration of best research evidence with clinical expertise and patient values.” The strengths of process measures include the ability to specify criteria for and standards of good-quality care to establish the range of acceptable practice, the incentive they provide to document the care delivered, and the ability to attribute discrete clinical decisions to the relevant providers. They also are easy to interpret and sensitive to variations in care and provide a clear indication of what remedial action is needed in the case of poor performance. Weaknesses include the fact that they do not consider how well a process was carried out, only if it was performed or not, and may even give credit for wrongly delivered processes, such as the wrong dosage of a recommended drug or use in patients at high risk for adverse reactions. Furthermore, their emphasis on technical interventions may encourage high cost care, and the priority they place on a narrow aspect of care may lead to loss of quality in other areas.

Outcome measures reflect changes in the patient’s current or future health status (including psychosocial functioning and patient attitudes and behavior) that can be attributed to the antecedent care provided. In many ways, outcome measures provide the best evaluation of the quality of care as they capture the goals of the care being provided, and they reflect all aspects of the care provided. This may be particularly important when multiple providers and their teamwork, culture, and leadership, as well the patients themselves contribute to the care. However, the duration, timing, or extent of measurement of outcomes that indicate good-quality care can be hard to specify, and data can be difficult and expensive to obtain. Furthermore, outcomes can be substantially affected by factors outside the control of the provider who is being evaluated (e.g., patient characteristics), so that careful risk adjustment—typically requiring detailed clinical data and development of risk models by statisticians and epidemiologists—is required to avoid penalizing providers who treat higher risk patients. Currently, there is no standardized approach for risk adjusting outcome measures, and varying approaches may result in substantial differences in the measured performance. Furthermore, even after risk adjustment, outcome measures may be unstable over time and unable to provide reliable comparisons for small-volume providers or where the outcome of interest is a rare event. For example, in the 1990s, the Veterans Affairs (VA) cardiac surgery program implemented semiannual internal reports to identify hospitals with high or low risk-adjusted operative mortality rates among patients undergoing coronary artery bypass grafting (CABG); however, to detect a risk-adjusted mortality rate twice the VA average with 80% statistical power and at a significance level of 5%, a minimum 6-month hospital volume of 185 CABG surgeries was required—a volume reached by only one VA hospital, once, in 5.5 years.

As should be clear from these relative strengths and weaknesses of structure, process, and outcomes measures, they have varying degrees of usefulness according to the purpose of the quality evaluation. This question must be answered before any attempt at evaluation is initiated to ensure the correct sort of measure or mix thereof—is selected. Generally, the broader the perspective of the evaluation, the more relevant outcome measures are, since they reflect the inter-play of a wide variety of influential factors. For narrower perspectives—for example, care provided by an individual physician—process measures may prove more useful because they are less subject to causes of variation outside the provider’s control. Structure measures can be very useful when one of the goals of the evaluation is to elucidate possible reasons for variations in quality between countries, regions, or providers because they provide insight into the resources available to providers and patients and the incentives to give and seek out certain kinds of care.

ATTRIBUTES OF QUALITY MEASURES

Measure Development

There is no formal consensus on how health care quality measures should be developed, although there are general consistencies among the methods applied. The first step in measure development is evaluating the need for quality indicators or measures based on the perspectives of all relevant stakeholders (e.g., patients, physicians, hospital leaders, payers, and policymakers). After this, the need for measure development should be prioritized according to (1) the incidence or prevalence of the condition and the health burden on the individual; (2) evidence, in the form of variable or substandard care, of an opportunity for quality improvement; (3) evidence that quality improvement will improve patient outcomes or population health; and (4) evidence that relevant quality measures do not already exist or are inadequate. Other relevant factors include the significance of resources consumed by and the cost effectiveness of treatments and procedures, variations in use, and controversies around use.

After an area has been identified as a measure development priority, key steps include:

1. Specifying a clear goal for the measurement

2. Using methodologies to incorporate research evidence, clinical expertise, and patient perspectives

3. Considering the contextual factors and logistics of implementing measurement

Included within these steps might be such requirements as that the quality measure be based on established clinical practice guidelines or that it undergo pilot testing to ensure that data collection and analysis are feasible and that the reporting meets the needs of the specified goal.

Broadly speaking, there are two approaches through which these steps are applied to the development of measures: the deductive approach (going from concept to data) and the inductive approach (going from data to concept). The former is more frequently used, with quality indicators or measures being based on recognized quality of care concepts and derived directly from existing scientific evidence or expert opinion. Although this deductive approach can help identify gaps in existing measurement systems, it can be limited by the lack of existing data sources through which the measure can be implemented and may focus efforts on quality of care issues that, although conceptually important, are not the ones most in need of improvement.

The less commonly used inductive approach starts with identifying existing data sources, evaluating their data elements, and then querying the data to identify variations from which quality indicators and measures can be developed. Strengths of this approach include the use of existing data and the ability to look at local variation to identify priorities and feasibilities, which may make providers more willing to accept measures to explore and track that variation. A third option is to combine the deductive and inductive approaches, as advocated by the Agency for Healthcare Research and Quality (AHRQ); this enables both the current scientific evidence and the availability and variability of existing data to be taken into account during the development process, supported by the engagement of relevant stakeholders and end users to ensure the measures developed are both relevant and meaningful.

In the United States, measures developed by the National Quality Forum (NQF) are considered the gold standard for health care quality measurement, based on the rigorous, consensus-based process through which they are developed. The NQF is a nonprofit organization with members drawn from patients, health plans, health professionals, health care provider organizations, public health agencies, medical suppliers/industry, purchasers, and those involved in quality measurement, research, and improvement, focused on the evaluation and endorsement of standardized performance measurement. The measure development process is designed to obtain input from and to consider the interests of all these stakeholders, as well as the scientific evidence supporting the rationale for the proposed quality measure.

Evaluation of Quality Measures

Essential Attributes of Quality Measures

At the most basic level, quality measures need to be:

• Important—the target audience(s) will find the information useful

• Scientifically sound—the measure will produce consistent and credible results

• Feasible—the measure can be implemented in the relevant health care context

• Usable—the target audience(s) can understand the results and apply them to decision-making processes

Whether one is involved in the measure development process, seeking relevant measures for quality reporting and improvement purposes, or interpreting reported performance on a quality measure, it is important to assess the essential attributes of the measure. These should be familiar from those discussed for variables used in the classical disease etiology applications of epidemiologic methods:

• Reliability: In the context of quality measurement, reliability can refer either to consistency of performance scores in repeated measurements or stability of performance scores over time. Reliability can be assessed in multiple ways: providers (whether they are individual physicians, or hospitals) can be classified according to their performance on the measure (or measure set) and the distribution of providers compared across measurement periods. Or, taking the providers individually, the correlation between successive measurement periods on a particular measure and the overall proportion of measures satisfied can be examined. Reliability can be affected by both variation in the provider’s characteristics over time (e.g., seasonal variation in volume of patients admitted for a particular condition) and interobserver variation, whereby individuals collecting data relevant to the measure interpret identical data differently. These sources of variation need to be considered and accounted for in defining the measure and the data collection and reporting processes used to implement it.

• Validity: Three types of validity can be defined. First, criterion validity refers to how well the quality measure agrees with a reference standard measurement. When reference standard data can reasonably be obtained, this can be assessed by examining the agreement between the quality measure data and the reference standard data in terms of sensitivity and specificity and by calculating receiver operating characteristic (ROC) curves. For example, for quality measures based on hospital administrative data, patient charts could be reviewed for a subset of patients to obtain “reference standard” data for comparison. Another aspect of validity that is important for quality measures is construct validity, which refers to how well the measure represents the aspect of quality it is intended to target and relates to the strength of the evidence linking the two. For example, a hospital’s incidence rate of central line-associated infections has good construct validity as a measure of patient safety because there is strong evidence showing that the majority of these infections can be avoided if preventive actions are consistently applied in a timely manner. Finally, a quality measure needs face validity, which refers to the extent to which it is perceived to address the concept it purports to measure. This is important in quality measurement in getting the relevant providers to “buy in” to the importance of improving performance on the measure to improve the care provided to their patients. Although face validity does, to a large degree, overlap with construct validity, it is not necessarily implied by it. Steps may have to be taken to educate providers on the evidence demonstrating construct validity to establish face validity or to show that the evidence applies in the local context within which they provide care.

• Bias: Bias is a systematic error in the design, conduct, or analysis of a study that leads to a mistaken estimate of an exposure’s effect on the outcome of interest. There are multiple types and sources of bias that are relevant to quality measures that should be considered in developing, choosing, and interpreting performance measures:

![]() Selection bias occurs when the cases with the condition or procedure of interest that can be identified from the data source do not represent the entire population of patients with that condition or procedure. As a result, the measured performance will not reflect the true rate at which the quality measure occurs in the relevant population. For example, if one were examining the quality of care provided to patients undergoing cholecystectomy and considered only inpatient data sources, the results would likely be subject to selection bias because hospitals admit all patients requiring open cholecystectomy but only some of those undergoing laparoscopic cholecystectomy (the less complex, lower risk cases are treated in outpatient settings). Quality measures may also be subject to selection bias when there is inadequate or variable coding of key diagnoses, interfering with consistent identification of relevant cases.

Selection bias occurs when the cases with the condition or procedure of interest that can be identified from the data source do not represent the entire population of patients with that condition or procedure. As a result, the measured performance will not reflect the true rate at which the quality measure occurs in the relevant population. For example, if one were examining the quality of care provided to patients undergoing cholecystectomy and considered only inpatient data sources, the results would likely be subject to selection bias because hospitals admit all patients requiring open cholecystectomy but only some of those undergoing laparoscopic cholecystectomy (the less complex, lower risk cases are treated in outpatient settings). Quality measures may also be subject to selection bias when there is inadequate or variable coding of key diagnoses, interfering with consistent identification of relevant cases.

![]() Information bias occurs when the means of obtaining information about the subjects is inadequate, causing some of the information gathered to be incorrect. Sources of information bias relevant to quality measure data collection include the way in which data are abstracted from medical records or in how interviewers or surveys pose questions; surveillance bias (in which providers actively looking for particular events, e.g., venous thromboembolism, have better detection procedures in place and thus appear to have higher rates than providers investing less effort in or with fewer resources available for detection programs); and, particularly relevant to survey-based measures, recall bias (in which, for example, an individual who has an adverse outcome is more likely to recall a potentially relevant exposure than an individual who did not have such an outcome) and reporting bias (in which an individual is reluctant to report an exposure or an outcome because of his or her beliefs, attitudes, or perceptions). Hospital quality measures that rely on hospital discharge data sets may suffer from information bias through their lack of data on postdischarge outcomes. For example, when looking at conditions in which the 30-day mortality rate (determined from death certificates) substantially exceeds the in-hospital mortality rate, hospitals with shorter lengths of stay may appear to perform better if only in-hospital mortality data are considered because the period of observation is shorter for them. Information bias also encompasses misclassification bias, through which individuals who are not members of the target population (e.g., because of a contraindication to the recommended process of care) are included in the measurement or, conversely, individuals who should be included in the measurement are classified as falling outside the target population.

Information bias occurs when the means of obtaining information about the subjects is inadequate, causing some of the information gathered to be incorrect. Sources of information bias relevant to quality measure data collection include the way in which data are abstracted from medical records or in how interviewers or surveys pose questions; surveillance bias (in which providers actively looking for particular events, e.g., venous thromboembolism, have better detection procedures in place and thus appear to have higher rates than providers investing less effort in or with fewer resources available for detection programs); and, particularly relevant to survey-based measures, recall bias (in which, for example, an individual who has an adverse outcome is more likely to recall a potentially relevant exposure than an individual who did not have such an outcome) and reporting bias (in which an individual is reluctant to report an exposure or an outcome because of his or her beliefs, attitudes, or perceptions). Hospital quality measures that rely on hospital discharge data sets may suffer from information bias through their lack of data on postdischarge outcomes. For example, when looking at conditions in which the 30-day mortality rate (determined from death certificates) substantially exceeds the in-hospital mortality rate, hospitals with shorter lengths of stay may appear to perform better if only in-hospital mortality data are considered because the period of observation is shorter for them. Information bias also encompasses misclassification bias, through which individuals who are not members of the target population (e.g., because of a contraindication to the recommended process of care) are included in the measurement or, conversely, individuals who should be included in the measurement are classified as falling outside the target population.

![]() Confounding bias occurs when patient characteristics, such as disease severity, comorbidities, functional status, or access to care, substantially affect performance on a measure and vary systematically across providers or areas. For example, hospitals treating patients across large geographic areas (national referral centers) may show artificially low risk-adjusted mortality rates because of the confounding effect of illness severity factors associated with referral selection and mortality. This produces lower-than-expected mortality rates in the subgroup of patients referred to the hospital over a distance that biases the hospital’s overall rate downward if the risk-adjustment model does not adequately capture this patient characteristic. To the extent that confounders can be identified from the data sources available, risk adjustment can improve performance of the quality measure.

Confounding bias occurs when patient characteristics, such as disease severity, comorbidities, functional status, or access to care, substantially affect performance on a measure and vary systematically across providers or areas. For example, hospitals treating patients across large geographic areas (national referral centers) may show artificially low risk-adjusted mortality rates because of the confounding effect of illness severity factors associated with referral selection and mortality. This produces lower-than-expected mortality rates in the subgroup of patients referred to the hospital over a distance that biases the hospital’s overall rate downward if the risk-adjustment model does not adequately capture this patient characteristic. To the extent that confounders can be identified from the data sources available, risk adjustment can improve performance of the quality measure.

• Case-mix and risk adjustment: Differences in case mix make direct performance comparisons among providers or over time challenging. Case-mix adjustment can be controversial and is complicated in terms of both the analysis and the implementation of data collection and reporting. However, when using outcome measures, risk adjustment for patient and environmental factors outside the provider’s control (e.g., severity of illness or comorbidities, patient access to resources) are essential to fair performance comparisons. Furthermore, building clinically appropriate exceptions into specifications for both outcome and process measures (e.g., excluding patients admitted for comfort care only from an in-hospital mortality measure) is necessary both for the validity of the measures and for clinician acceptance of the measures.

• Currency: Health care delivery is dynamic, and measures of quality need to be relevant to the contemporary context. As such, measures cannot simply be developed and evaluated once and then implemented in perpetuity. Studies show that more than half of clinical practice guidelines are outdated within 6 years of adoption and that systematic reviews of clinical effectiveness evidence can require updating as frequently as every 2 years. Quality measures require updates to remain current with advances in medical technology and clinical practice and to replace measures that have “topped out” (i.e., almost all providers routinely achieve near optimal performance) with measures reflecting new priorities.

Finally, an attribute of any quality measure that must be considered is its potential for manipulation and the perverse incentives its use may inadvertently create to improve measured performance without improving the quality of care actually provided. This might include “cherry picking” easy cases, encompassing both the refusal to treat complex, high-risk patients who might nonetheless benefit and treating less severe patients with dubious indications to inflate volumes and improve apparent performance; “teaching to the test,” whereby the narrow focus on the particular area of care being measured leads to the neglect of the broader aspects of quality; and “upcoding” of severity or comorbidities used in risk adjustment to create an overadjustment and favorably influence the findings.

Data Sources

The purpose underlying a specific instance of quality measurement and the approach adopted to achieve it frequently dictate the type of data that needs to be collected. Conversely, given the limited resources typically available for quality measurement and improvement, the data that can be obtained at a feasible cost and within a reasonable timeframe need to be considered. The potential information sources run a broad gamut of data collection methods, but certain common sources and methods recur frequently in quality measurement.

Clinical records: Patient medical records are the source of information for most studies of medical care processes, for disease- or procedure-specific registries, and for quality improvement or monitoring initiatives in both ambulatory and inpatient settings. In the sense that they should contain detailed information about the patient’s clinical condition as well as about the clinical actions taken, they are an excellent source of data for assessing the quality of care provided. However, they also have several practical drawbacks. First, the time and effort required to perform chart abstraction of traditional paper records make this an expensive form of data collection, severely limiting the size of the sample of providers or patients whose care is evaluated and making real-time performance measurement unfeasible. Second, it assumes complete and accurate documentation of the patient’s condition and the actions taken, an assumption that the research literature does not bear out. Third, when data collection requires interpretation of the clinical information (e.g., determining whether an adverse event has occurred), there can be substantial disagreement between reviewers (i.e., poor interrater reliability). Vague specification of variables and poorly designed abstraction tools can also compromise data quality.

The growing use of electronic health records (EHRs) sparked the hope—and perception—that clinical record-based quality of care data would become readily obtainable and of better quality. However, electronic quality measure reports show wide variation in accuracy because of processes of care being recorded in free text notes or scanned documents, where they are “invisible” to the automated reporting algorithm; different test results showing as “most recent” to the reporting algorithm versus a human reviewer; and poor capture of valid reasons for not providing recommended care. Between 15% and 81% of the “quality failures” identified in EHR-based reports satisfy the performance measures or exclusion criteria on manual review.

Another challenge to wide-scale use of EHR reporting is data incompatibility between sites and EHR systems because of differences in meaning and structure of data elements and the development of local glossaries for coded information; local documentation practices and data extraction methods also impact data quality and completeness, so one cannot assume comparability just because two sites use the same EHR product or belong to the same parent organization.

Direct observation: An alternative to clinical records is direct observation by a well-qualified observer. Although this preserves the benefit of providing detailed clinical information and avoids the reliance on the clinician’s documentation of actions taken during a particular encounter, it adds to the expense and time required for data collection, does not solve the problem of data completeness with regard to information about the patient the clinician possesses from previous encounters, and introduces the additional complication of being likely to alter the clinician’s behavior (the Hawthorne or observer effect in which study subjects alter their behavior because they know that they are being monitored.) Additionally, although direct observation may be a reasonable means of collecting data on process and structure measures, there are many outcome measures of interest in health care quality (e.g., 30-day postdischarge readmission) that occur at unknown and unpredictable times and places and so will likely be missed.

Administrative data: Routine collection of data for insurance claims and enrollment make administrative databases readily available, inexpensive sources of quality measure data. This enables quality measurements to be done on large samples or even on all patients with the relevant condition treated by the provider during the measurement period and at more frequent intervals. Administrative data are frequently used for public reporting (e.g., CMS Hospital Compare measures for AMI, pneumonia, and heart failure) and value-based purchasing programs, for health care organizations’ internal quality initiatives, and for studies evaluating the effectiveness of such initiatives. Because administrative databases and collection processes are designed primarily to support operations such as billing and reimbursement, however, they generally lack the clinical detail needed to determine whether a particular clinical action was necessary or appropriate; they may also be vulnerable to “upcoding” of the severity or complexity of a patient’s illness or procedure when reimbursement is tied to these factors or underreporting of adverse events for which providers are not reimbursed.

In the United States, administrative data are a rich source for process measures because of the way health care charges are constructed. In contrast, the outcomes data routinely available tend to be limited to hospital readmissions or ED visits and in-hospital mortality rates. Postdischarge mortality data can sometimes be obtained through the National Death Index, CMS, or state vital statistics offices, but these sources typically have substantial time delays, apply only to selected populations (e.g., Medicare patients), or are available only for limited purposes (e.g., research). The AHRQ has also developed a set of tools (patient safety indicators) to identify complications and adverse events from hospital administrative data, although these show variable sensitivity and specificity for identifying the events they target. Use of administrative data to risk adjust outcomes is also problematic: failure to distinguish between preexisting conditions and complications that occur during the episode of care makes it difficult to assess the baseline risk factors.

Survey data: Survey data typically are used for quality measures related to patients’ experiences of care, but surveys of clinicians or hospital administrators may also be used to collect data on frequency of use of certain treatments and procedures (process measures) or the availability of specific resources (structural measures). Strengths include the opportunity to elicit information from perspectives not typically captured in the routine delivery or administration of health care—specifically, those of patients, nurses, and consumers who make choices among health care plans or providers—and the variety of media through which they can be administered (in person or by phone, mail, or email). With the growing emphasis on patient-centered care, such data are becoming increasingly important to health care providers. Disadvantages to survey data include the expense involved in collecting them and the typically low response rates and associated nonresponse bias.

The most widely used patient surveys are the Consumer Assessment of Healthcare Providers and Systems (CAHPS) surveys, which are developed and maintained by the AHRQ. These surveys focus on aspects of quality of which patients are the best judges, such as the communication skills of providers and ease of access to health care services, and cover care provided in hospitals, nursing homes, home health care, dialysis facilities, and ambulatory care physician offices. They are used by commercial insurers, Medicaid, State Children’s Health Insurance Program, and Medicare plans, and items from the Hospital CAHPS (HCAHPS) survey are among the quality measures that are reported publicly for hospitals participating in Medicare and used for the Medicare Hospital VBP program (see later discussion).

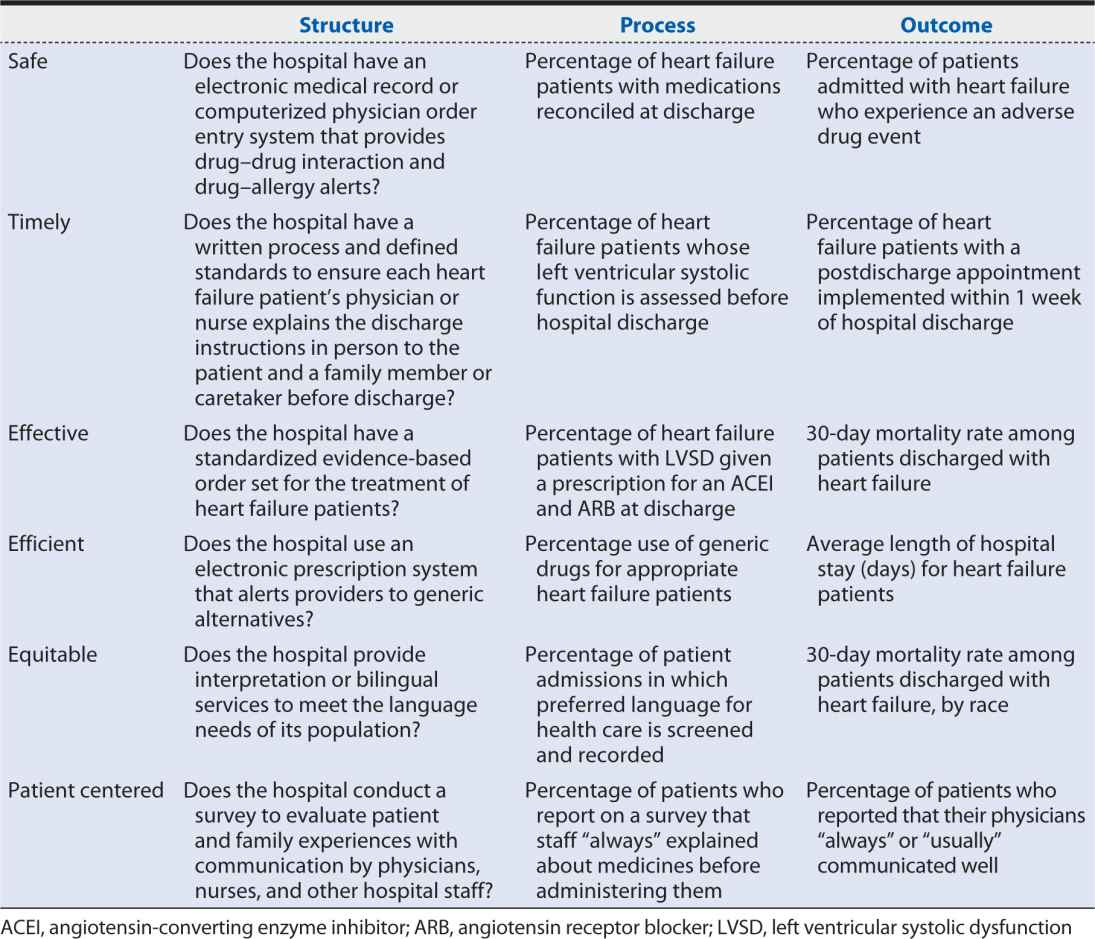

EXAMPLES OF QUALITY MEASURES

Hospital A in the Health Scenario is seeking to evaluate and improve the quality of care it provides to patients admitted for heart failure. Table 15-2 provides examples of structure, process, and outcome measures within each of the six domains of quality identified by the IOM that Hospital A might consider.

Table 15-2. Example structure, process, and outcome measures in the six domains of quality.

USES OF QUALITY MEASURES

Quality of care evaluations are used for a wide range of purposes. These include:

• Public reporting

• Pay-for-performance or value-based purchasing programs

• Informing health policy or strategy at the regional or national level

• Improving the quality of care provided within an organization

• Monitoring performance within an organization or across a region

• Identifying poor performing providers to protect public safety

• Providing information to enable patients or third-party payers to make informed choices among providers.

National Quality Measurement Initiatives

Quality measurement and evaluation play an important role in quality control regulation. They are prominent issues on the national health care agenda, largely through their incorporation into the criteria used by CMS for participation and reimbursement, but also through efforts of non-profit organizations such as the NQF, National Committee for Quality Assurance (NCQA) and the Institute for Healthcare Improvement (IHI).

The Joint Commission

The Joint Commission is an independent, not-for-profit organization that seeks to improve health care “by evaluating health care organizations and inspiring them to excel in providing safe and effective care of the highest quality and value.” Health care organizations seeking to participate in and receive payment from Medicare or Medicaid must meet certain eligibility requirements, including obtaining a certification of compliance with the Conditions of Participation, which are specified in federal regulations. Under the 1965 Amendments to the Social Security Act, hospitals accredited by the Joint Commission were deemed to meet the Medicare Conditions of Participation; in 2008, this “deeming” authority was extended to other organizations CMS approves, but the Joint Commission still evaluates and accredits more than 20,000 health care organizations and programs in the United States.

In 2001, the Joint Commission adopted standardized sets of evidence-based quality measures, moving the focus of the accreditation process away from structural requirements and toward evaluating actual care processes, tracing patients through the care and services they receive, and analyzing key operational systems that directly impact quality of care. The Joint Commission uses the quality measure data collected from accredited hospitals for a number of purposes intended to help improve quality. These include producing quarterly reports summarizing performance data for individual hospitals and health care organizations; highlighting measures showing poor performance issues; and reporting performance on Quality Check, a publicly available website. The Joint Commission’s measures are further used internally by hospitals and health care organizations to drive quality improvement through goal-setting, audit-and-feedback, and executive pay-for-performance programs.

Centers for Medicare and Medicaid Services Quality Measurement and Reporting

CMS’ quality measurement initiatives began in 1997 using the Healthcare Effectiveness Data Information Set (HEDIS) established by the NCQA to assess the performance of health maintenance organizations and physicians and physician groups. In 2001, DHHS announced The Quality Initiative, which aimed to assure quality health care for all Americans through published consumer information and quality improvement support from Medicare’s Quality Improvement Organizations. Today, public reporting of quality measures is required of all nursing homes, home health agencies, hospitals, and dialysis facilities participating in Medicare, and physicians voluntarily report quality measures through the Physician Quality Reporting System (PQRS). As of 2015, physician reporting is required to avoid financial penalties, and performance is factored into physician reimbursement.

Hospital quality performance data are publicly reported on the Hospital Compare website (http://www.medicare.gov/hospitalcompare/search.html) for:

• Timely and effective care: Hospitals’ compliance rates with evidence-based processes of care for patients with AMI, heart failure, pneumonia, stroke, and children’s asthma, as well as for surgical care, pregnancy and delivery care, and blood clot prevention and management

• Readmissions, complications and deaths: 30-day readmission and mortality rates for AMI, heart failure, and pneumonia patients; 30-day readmission rate for elective hip/knee replacement patients; 30-day readmission rate for medical, surgical and gynecologic, neurologic, cardiovascular, and cardiorespiratory hospital patients are included in this measure; complication rates among hip and knee replacement patients; and rates (and mortality rates) of serious surgical complications

• Patient experience: Selected questions from the HCAHPS survey, mailed to a random selection of adult patients after their discharge from hospital, addressing such topics as how well nurses and doctors communicated, how responsive hospital staff were to patient needs, how well the hospital managed patients’ pain, and the cleanliness and quietness of the hospital environment

• Use of outpatient medical imaging: Measures targeting common imaging tests that have a history of overuse (e.g., magnetic resonance imaging for low back pain) or underuse (e.g., follow-up imaging within 45 days of screening mammography)

Theoretically, such public reporting enables patients to influence quality of care through selective choice of the best available providers. There is little evidence that this occurs, however, partly because there is a lack of awareness, trust, and understanding of the reported quality data, and partly because patients’ choices are often constrained by the insurance plans available to them.

The 2010 Patient Protection and Affordable Care Act (PPACA) brought a renewed focus on hospital quality measures: where, previously, hospitals had been required to report the quality measures above to receive payments from Medicare, PPACA authorized the use of these quality performance data to determine how much hospitals are paid by establishing the Hospital VBP Program and the Hospital Readmissions Reduction Program.

The Hospital VBP Program is a CMS initiative that rewards acute-care hospitals with incentive payments for the quality of care they provide to Medicare patients. Hospital VBP took effect in October 2012, initially placing 1% of hospital’s base payments “at risk” according to their performance on measures in two areas: (1) clinical processes of care (drawn from those publicly reported for AMI, heart failure, pneumonia, and surgical care) and (2) patient experience (drawn from the HCAHPS survey), weighted 70:30 to obtain a total performance score. CMS evaluates hospitals for both improvement (relative to the hospital’s own performance during a specified baseline period) and achievement (relative to the other hospitals’ performance with the achievement threshold for points to be awarded being set at the median of all hospitals’ performance during the baseline period) on each measure and uses the higher score toward the total performance score.

For the second year of the Hospital VBP program (i.e., starting October 2013), CMS added an Outcome Measures domain containing the 30-day mortality measures for AMI, heart failure, and pneumonia. Planned future revisions include adding an Efficiency domain and adjusting the included measures and weighting scheme more heavily toward outcomes, patient experience of care, and functional status measures. The amount of hospitals’ reimbursement placed at risk according to their performance also changes each year, increasing 0.25 percentage point until it reaches a maximum of 2% in October 2016. As might be imagined, this amounts to significant amounts of money at the national level: in the program’s first year, with only 1% placed at risk, almost $1 billion of Medicare reimbursement was tied to hospitals’ performance.77

The Hospital Readmissions Reduction Program also took effect in October 2012, reducing Medicare payments to hospitals with “excess” readmissions within 30 days of discharge, defined in comparison with the national average for the hospital’s set of patients with the applicable condition, looking at 3 years of discharge data and requiring a minimum of 25 cases to calculate a hospital’s excess readmission ratio for each applicable condition. The initial list of “applicable conditions” was limited to those that have been reported publicly since 2009 (AMI, heart failure, and pneumonia) but will be expanded in the future. In the program’s first year, the maximum penalty that applied was 1% of the hospital’s base operating diagnosis-related group (DRG) payments, but in October 2014, this rose to 3%. In the program’s first year, about 9% of hospitals incurred the maximum penalty, roughly 30% incurred none, and the total penalty across the more than 2200 hospitals receiving penalties was about $280 million (0.3% of total Medicare base payment to hospitals). From an individual hospital’s perspective, Ohio State University’s Wexner Medical Center estimated a loss of about $700,000 under a 0.64% penalty. Because the program is structured around performance relative to the national average (rather than a fixed performance target), roughly half the hospitals will always face a penalty, and the overall size of the penalty will remain much the same, even if, as it is hoped, the hospitals improve overall over time.

Proprietary Report Cards

In the past 2 decades, numerous quality “report cards” have entered the scene, many of them developed and conducted by for-profit companies. Examples include those issued by Consumer Reports, US News & World Report, Healthgrades, and Truven Health Analytics. Although hospital marketing departments frequently tout their facility’s rankings in these reports, the methods by which they are produced typically do not bear scrutiny when examined for criteria such as transparent methodology, use of evidence-based measures, measure alignment, data sources, current data, risk adjustment, data quality, consistency of data, and preview by hospitals. Many of these report cards use a combination of claims-based and clinical measures (often representing data already reported on CMS’ Hospital Compare website) and their own unique measures based on things like reputation or public opinion.

Local Quality Measurement and Improvement

Local quality measurement and monitoring became popular in the 1990s as health care organizations and providers adopted continuous quality improvement (also called total quality management) from the Japanese industrial setting. Key principles are:

1. “Quality” is defined in terms of meeting “customers’” needs, with customers including patients, clinicians, and others who consume the services of the institution.

2. Quality deficiencies typically result from faulty systems rather than incompetent individuals, so energy should be focused on improving the system.

3. Data, particularly outcomes data, are essential drivers and shapers of systems improvement, used to identify areas where improvements are needed and to determine whether the actions taken to address those areas are effective.

4. Management and staff at all levels of the organization must be involved in quality improvement, and a culture emphasizing quality must be created.

5. Quality improvement does not end; there is always room for further progress.

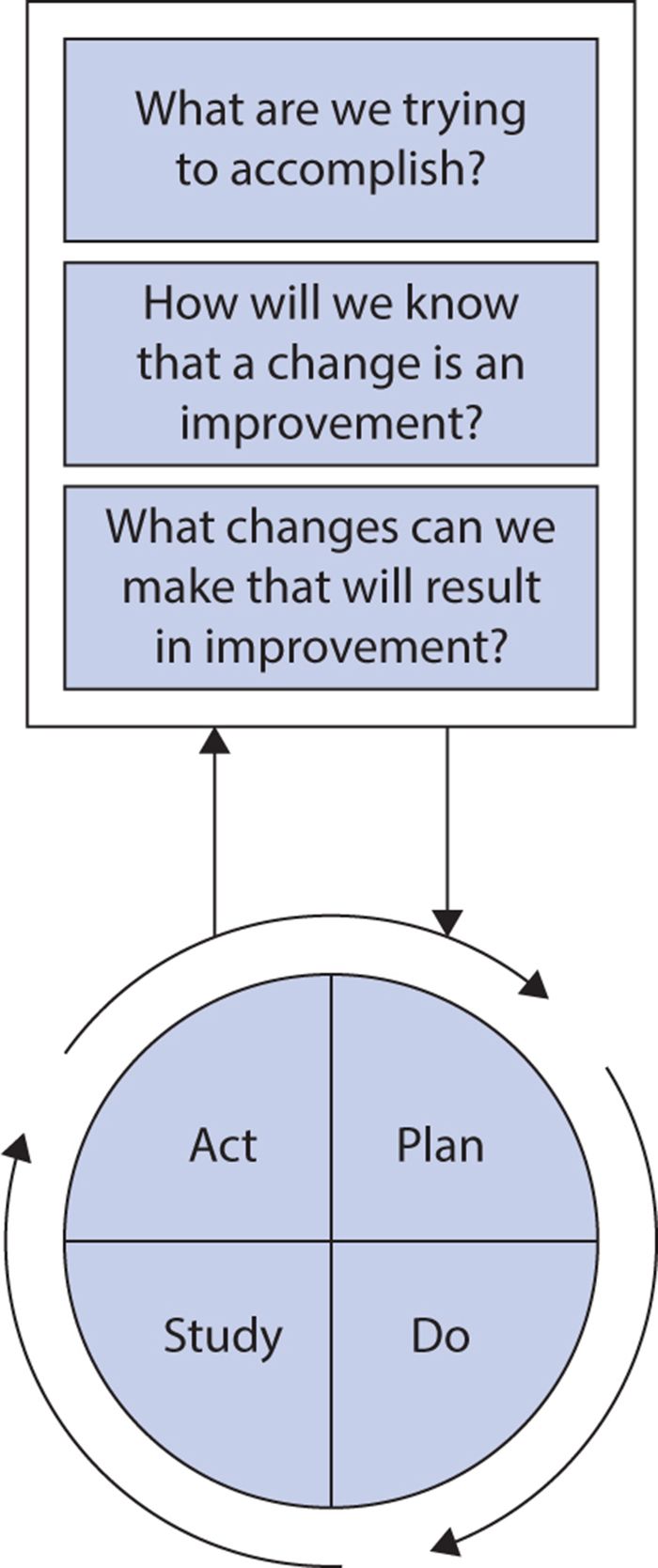

The Institute for Healthcare Improvement advocates use of the Model for Improvement (Figure 15-1) to guide implementation of these principles in health care. The model combines three simple questions with the plan, do, study, act (PDSA) cycle developed by W. Edwards Deming.

Figure 15-1. The model for improvement. (Reproduced with permission from Institute for Healthcare Improvement, Cambridge, MA; 2014; available at http://www.IHI.org.)

The PDSA cycle is an iterative process, repeating the following four steps until the desired improvement is achieved:

1. Plan a change aimed at quality improvement (including a plan for data collection and analysis to evaluate success).

2. Do the tasks required to implement the change, preferably on a pilot scale.

3. Study the results of the change, analyzing data collected to determine whether the improvement sought was achieved.

4. Act on results (alter the plan if needed or adopt more widely if it showed success).

Hospital A from the Health Scenario applies this methodology to its efforts to improve the quality of heart failure care. Hospital A knows, from the penalties it received under the Medicare VBP and Readmissions Reduction Programs, that it needs to improve compliance with the publicly reported heart failure processes of care, to decrease its 30-day readmissions, and to improve patients’ experiences of care.

Reviewing the literature, clinical quality leaders at Hospital A learn that adopting a standardized evidence-based order set for heart failure can significantly increase compliance with recommended practices for inpatient heart failure care. They also learn that involving pharmacists in medication reconciliation and patient education on discharge instructions for heart failure patients shows promise in reducing readmission rates. Finally, they find some evidence that providing language translation services for communications with physicians and nurses improves patients’ experience of care. They decide to focus initially on improving performance on the publicly reported heart failure processes of care and documenting patients’ language preferences to determine the translation services that are needed. Their chosen intervention is hospital-wide implementation of a standardized order set based on the current American College of Cardiology/American Heart Association clinical practice guidelines.

Quality measures chosen: (1) The proportion of eligible heart failure patients for whom physicians used the standardized order set, (2) performance on the publicly reported heart failure process measures, and (3) the proportion of patients with a documented language preference

Performance targets: (1) Physicians use the standardized order set for at least 80% of eligible heart failure patients; (2) performance on each of the publicly reported process of care measures is at or above the national average for that measure in the most recent period reported on the Hospital Compare website (April 2012–March 2013), and (3) all admitted patients have a language preference documented. Statisticians in the quality improvement department also develop an analytic plan to compare performance on the heart failure process measures for 12 weeks both before and after implementation of the order set.

Definition of the target population: For order set use and the publicly reported heart failure processes of care: all patients discharged with a final diagnosis of heart failure; for documentation of language preference: all patients

Data sources and collection: Hospital A obtains data on the publicly reported heart failure measures from the integrated outcomes, resource, and case management system it uses to collect the data needed to construct its billing charges. The information technology department adds a field to this system for patients with a heart failure diagnosis to record whether or not the standardized order set was used and, for all patients, to record language preference.

Evaluation of the improvement initiative: During the first 12 weeks of the improvement initiative, Hospital A sees documentation of a language preference reach 95% and use of the standardized order set reach 34% of eligible heart failure patients. The quality leaders do not see any noticeable changes in their performance on the process of care measures. They believe the low adoption rate of the order set explains this lack of improvement, so they initiate an intensive program to encourage physicians to use it, including education on the underlying scientific evidence, demonstrations on how to access and use it within the electronic medical record system, and fortnightly physician-specific reports showing what percentage of eligible heart failure patients for whom they had used the order set compared with their peers.

After a further 12 weeks, Hospital A sees use of the standardized order set approach 60% of eligible patients and performance on all three of the evidence-based processes of care improve significantly from preintervention levels, reaching the targeted national average for left ventricular function assessment and prescription of ACEIs and ARBs for LVSD. Hospital A is pleased with the progress and decides to continue its efforts to increase adoption of the standardized order set, placing particular emphasis on the portion dealing with discharge instructions as they introduce a program to integrate pharmacists into the medication reconciliation and discharge instruction process. Having used the data collected on language preference to identify the predominant translation needs in Hospital A’s patient population, the quality leaders next turn their attention to improving performance on the provider communication measures, initiating a new PDSA cycle to implement the chosen translation resources and increase awareness and use of them by physicians, nurses, and patients.

THE NATIONAL QUALITY STRATEGY

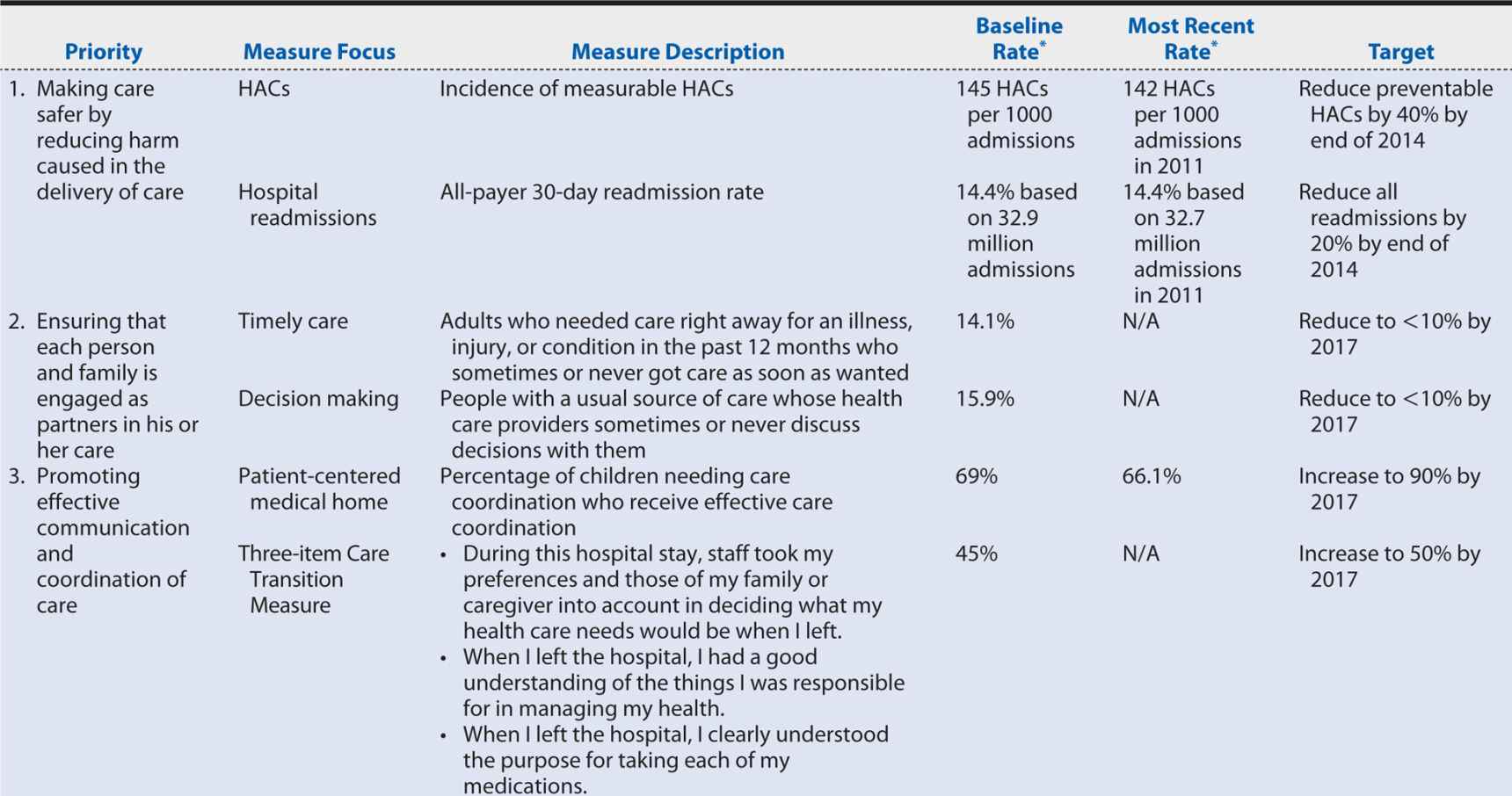

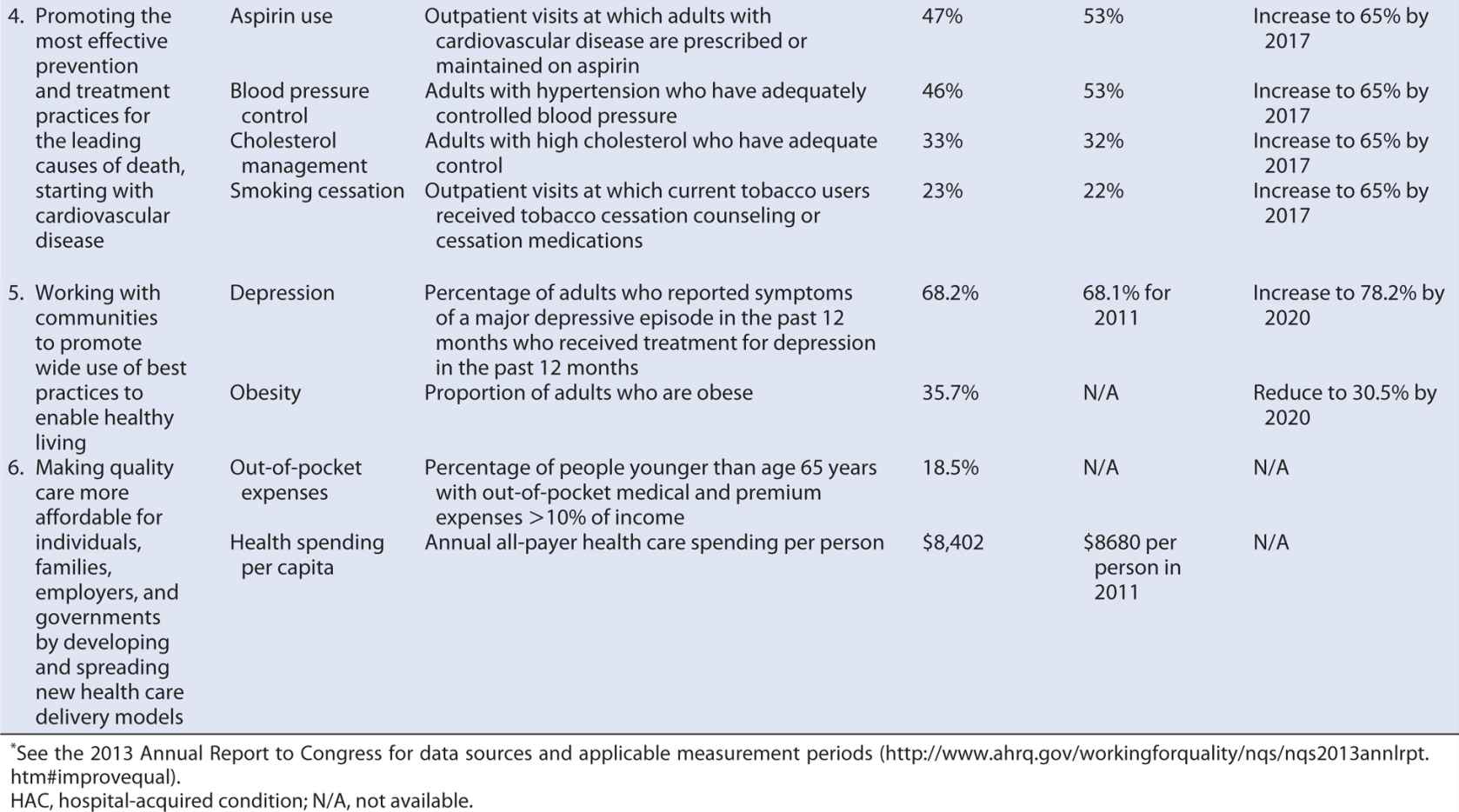

Section 3011 of PPACA required the Secretary of DHHS to establish a national strategy for quality improvement in health care that sets priorities to guide improvement efforts and provides a strategic plan for achieving the goal of increasing access to high-quality, affordable health care for all Americans.87 The National Quality Strategy (NQS) was developed through a collaborative process that called on a wide range of health care stakeholders for contributions and comment, incorporated input from the National Priorities Partnership (52 public, private, for-profit, and nonprofit organizations working to improve the quality and value of health care in the United States) and culminated in public comment on a proposed approach, principles, and priorities. Table 15-3 summarizes the NQS’ resulting aims, priorities, and principles.

Table 15-3. Aims, priorities, and principles of the National Quality Strategy.

Building consensus on how to measure quality is a critical step in implementing the NQS to enable patients; providers; employers; health insurance companies; academic researchers; and local, state, and federal governments to align their efforts and maximize the gains in quality and efficiency. Agencies within DHHS are moving toward common performance measures across programs (reducing the reporting burden on providers), and groups such as the Measures Application Partnership, a public–private partnership that reviews performance measures being considered for federal public reporting and performance-based payment programs are working more broadly to align measures used in public and private sector programs. The annual NQS progress reports have identified national tracking measures, goals, and target performance levels relevant to each of the six priority areas, providing some guidance for such alignment.

CURRENT HEALTH CARE QUALITY STATUS AND TARGETS

The United States’ poor performance on health and health care quality measures relative to other industrialized nations is well-known, as is the much higher per capita health care spending. In 2011, the United States spent $8608 per capita on health care, an amount exceeded only by Norway, Luxembourg, and Switzerland. By comparison among other Western nations, the Netherlands and Australia each spent roughly $6000, Germany and France spent around $5000, and the United Kingdom and New Zealand spent approximately $3500. The United States’ health care spending amounts to about 17% of its gross domestic product compared with the 12% or less of similarly industrialized nations.

Various theories have been advanced for the United States’ disproportional spending—for example, the aging of the U.S. population, poorer health behaviors, racial and ethnic diversity and associated health disparities, and greater utilization or supply of health care resources. However, a 2012 comparison between the United States and 12 other industrialized Organization for Economic Cooperation and Development nations demonstrated that the United States has a comparatively young population and that adults were less likely to smoke than in the comparison nations; that although the health disadvantage is more pronounced in low-income minority populations, it persists in non-Hispanic whites and adults with health insurance, college education, high income, or healthy behaviors; and that it has fewer physicians, physician visits, and hospital beds and admissions per capita than was typical of the comparison nations. U.S. adults, however, were the most likely to be obese (a condition to which an estimated 10% of health care spending may be attributable), and U.S. hospitals stays (although shorter) were almost twice as expensive as those in other countries—a difference that could result from more resource-intensive hospital stays, higher prices for services, or a combination thereof. Fees for physician services, diagnostic imaging, procedures, and prescription medications are all higher in the United States, and the pervasive medical technology and associated prices appear to be potent drivers behind the “supersized” portion of resources devoted to health care in the United States.

The high spending might be justifiable if the United States had proportionately better health and health outcomes, but that is not the case—and the health disadvantage seems to be increasing. A 2013 report from the National Research Council and IOM found that individuals in the United States in all age groups up to 75 years have shorter life expectancies than their peers in 16 industrialized nations and that the United States has comparatively high prevalence and mortality rates for multiple diseases, faring worse than average across nine health domains: adverse birth outcomes; injuries, accidents, and homicides; adolescent pregnancy and sexually transmitted infections; HIV and AIDS; drug-related mortality; obesity and diabetes; heart disease; chronic lung disease; and disability. To be fair, the United States did perform better than average on control of hypertension and serum lipids, cancer and stroke mortality rates, and life expectancy after 75 years. These “strengths,” however, reflect what the U.S. health care system tends to reward (through payment), focusing on prolonging life as death encroaches rather than on promoting the healthy behaviors and preventive measures that, starting early in life, can avoid much subsequent loss of life and quality of life. Determinants underlying the United States’ health disadvantage include:

1. The lack of universal health insurance coverage or a tax-financed system that ensures health care affordability (something every other industrialized nation provides)

2. A weaker foundation in primary care

3. Greater barriers to access and affordable care

4. Poor care coordination

5. Unhealthy behaviors, including high-calorie, low-nutrition diets; drug abuse; alcohol-involved motor vehicle crashes; and high rates of firearm ownership

We know, because other countries have achieved it, that a better health care system and a healthier population are realistic goals. The question is what are the people of the United States, particularly the stakeholders in the health care system, prepared to do to achieve them? Based on what we see in other countries, it will likely require health care to become less profit focused, bringing the costs and use of physician services, hospital stays, and procedures and imaging into line with international practice. It will also likely require resources to be shifted away from developing and adopting expensive technology that offers only incremental gains in health to a small number of people toward preventive care and public health initiatives with which the gains per dollar spent are much larger. These are unlikely to be popular changes, but they are what the data show the United States needs, and true scientists will not ignore that evidence. Nor will those who are serious about quality improvement.

Some steps are being taken in this direction. Several of the National Quality Strategy priorities (discussed earlier) address the determinants of the U.S. health disadvantage, targeting care coordination, prevention, and healthy living. Progress on these and the other priority areas is slow (Table 15-4), but at least relevant measures, baseline rates, and target performance levels have been identified. Further progress can be achieved if all health care stakeholders incorporate the National Quality Strategy priorities and associated measures into their own daily operations and, applying the principles of quality improvement, enter into PDSA cycles within those unique contexts.

Table 15-4. Measures, targets and progress identified for the National Quality Strategy Priority Areas.

SUMMARY

In this chapter, the measurement and improvement of health care quality were illustrated using the example of inpatient heart failure care. Quality is defined in terms of six principal domains: safety, timeliness, effectiveness, equity, efficiency, and patient centeredness. Quality measures can be divided into three broad types—structure, process, and outcome—each of which is subject to particular strengths and weaknesses but that provides insights into different aspects of quality. The choice between structure, process, and outcome measures (or some combination thereof) depends on the purpose and context of the particular quality evaluation and from whose perspective quality is being assessed. Generally speaking, outcomes measures are most useful when the perspective is broad because they can reflect the influence of a wide range of factors. Process measures may be more appropriate for narrower perspectives (e.g., evaluating an individual provider’s performance) because they are less subject to the influence of factors outside the provider’s control. Structure measures provide little insight into the care that is actually provided but are useful when trying to elucidate differences among health care settings and contexts that contribute to variations in care that suggest opportunities for improvement.

Health care quality data can be derived from a variety of sources but are most typically obtained from clinical records, provider and payer administrative databases, and surveys. Particularly with the former two sources, it is important to keep in mind that the data have not been collected or recorded with the purpose of quality measurement in mind but rather to facilitate the provision of care and daily operations of the health care organization. As such, they may not contain the level of detail desirable for quality measurement or may contain inaccuracies that although not problematic for their primary purposes, distort the picture of the quality of care provided.

Quality measures have a variety of applications, ranging from internal efforts to improve the quality of care provided with an organization to informing national policies regarding health care policy. Recent public attention has mostly been focused on public reporting efforts and the integration of quality measures into pay-for-performance and value-based purchasing programs. However, much of the day-to-day application of quality measures comes in the context of continuous quality improvement initiatives implemented by or within individual practices, departments, hospitals, or health care organizations. Borrowed from industrial settings, continuous quality improvement relies on the PDSA cycle: plan a change aimed at quality improvement, do the tasks required to implement the change, study the results of the change, and act on the results. The National Quality Strategy, established by the DHHS under the PPACA, is intended to provide guidance that will help providers; payers; researchers; and local, state, and federal governments align their quality improvement initiatives to enable both more efficient and more effective improvement. Success in these areas is hoped to bring the United States’ health care spending and outcomes into line with other industrialized nations, which it currently outspends and underperforms.

1. Compliance with evidence-based recommendations for venous thromboembolism prophylaxis in the intensive care unit is an example of a quality measure most connected with which of the IOM domains of quality?

A. Effective

B. Patient centered

C. Equitable

D. Efficient

2. The proportion of patients with low back pain who had magnetic resonance imaging without trying recommended treatments first, such as physical therapy, is an example of which type of measure within Donabedian’s framework for measuring quality of care?

A. Structure

B. Efficiency

C. Process

D. Effective

3. The proportion of people who report being uninsured all year, by English proficiency and place of birth, is an example of a quality measure most connected with which of the IOM domains of quality?

A. Equitable

B. Patient centered

C. Access to care

D. Safety

4. A safety net hospital has a higher unadjusted 30-day readmission rate for heart failure than the private hospital in an affluent neighborhood across town despite showing better performance on the public-reported recommended processes of care for heart failure. The safety net hospital has a high volume of indigent patients, but the private hospital’s patients typically have higher education levels, fewer comorbidities, and better access to care. A possible explanation for the unadjusted 30-day readmission result is

A. surveillance bias.

B. reporting bias.

C. information bias.

D. confounding bias.

5. In 1998, Hospital A, a large academic teaching hospital, had a 2.5% operative mortality rate among patients undergoing CABG. In 1999, Hospital A partnered with local cardiac and vascular surgeons to open a neighboring specialty heart and vascular hospital, equipped to perform percutaneous coronary intervention (PCI) but not open heart surgery. From then on, the vast majority of patients with single- or two-vessel disease underwent revascularization via PCI at the specialty hospital, and patients with multivessel disease or other factors making their condition more complex continued to be treated via CABG at Hospital A. In 1999 and 2000, Hospital A saw a sharp increase in its operative mortality rate. By focusing quality improvement efforts on surgical technique and postsurgical care, it was able to reduce the mortality rate somewhat in subsequent years but has not been able to equal or better the 2.5% achieved in 1998. An explanation for this result is

A. surveillance bias.

B. reporting bias.

C. selection bias.

D. confounding bias.

6. Looking at whether a physician practice routinely conducts a survey to assess patient experience related to access to care, communication with the physician and other practice staff, and coordination of care is a(n) _________ measure within Donabedian’s framework, addressing the _________ domain of health care quality.

A. Outcome, patient-centered

B. Structure, timeliness

C. Process, timeliness

D. Structure, patient-centered

7. Administrative data sources (e.g., hospital billing data or health insurance claims data) are frequently used for quality measurement because

A. the data are routinely collected for operational purposes and can be obtained rapidly and at relatively low cost for large numbers of patients.

B. the data contain the comprehensive clinical detail needed to risk adjust outcome measures to enable fair comparisons among providers.

C. in the United States, thanks to the way health care charges are constructed, they are a rich source of outcomes data.

D. they provide insight into the health care system from the patient’s perspective, enabling assessment of the patient-centered domain of quality.

8. Quality of care measures are useful for

A. patients or payers trying to choose among health care providers.

B. state or government agencies responsible for setting health care policies and priorities.

C. hospitals seeking to improve the care they provide their patients.

D. all of the above.

9. Which of the following quality measures is NOT typically assessed through patient surveys, such as the Consumer Assessment of Healthcare Providers and Systems?

A. Communication skills of providers

B. Technical skills of providers

C. Access to care

D. Understanding of medication instruction

10. Medical records are useful for all of the following purposes EXCEPT

A. assessing medical care processes.

B. monitoring quality improvement efforts.

C. assessing patient experience.

D. disease-specific registries.

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree