EMERGENCY TOXICOLOGY

Purpose

Purpose

The majority of poisoned patients enter the health care system in the emergency department. Treatment is often based on exposure history and signs and symptoms of poisoning based on physical examination. Laboratory testing may be performed to confirm the physician’s diagnosis or to identify a toxin in the absence of a differential diagnosis.

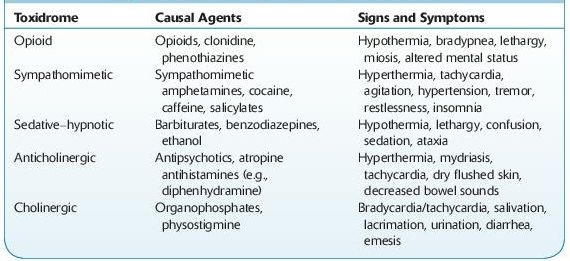

Knowledge of toxidromes is important as a starting point for patient evaluation (Table 14-1). These consist of a collection of signs and symptoms that are typically produced by specific toxins.

TABLE 14–1. Signs and Symptoms of Common Toxidromes

Application

Application

Testing offered by clinical toxicology laboratories consists of screening and confirmation.

Screening Methods and Limitations

Screening Methods and Limitations

Screening tests are usually conducted on urine. These require little or no sample preparation and are frequently immunoassay based. These tests have high sensitivity; however, they have limitations due to moderate specificity. Many commercially available tests cross-react with multiple drugs within a class due to choice of target drug. They may also be sensitive to adulterants. Clinicians must be aware of the commercial tests utilized in their laboratory, as cross-reactivities differ between manufacturers and within manufacturers over time.

These tests are typically performed on automated chemistry analyzers. Although individual drug–drug classes are available, many hospital laboratories offer these tests as panels and are available as “stat” tests.

Immunoassay testing may be based on

Radioimmunoassay (RIA)

Radioimmunoassay (RIA)

Enzyme multiplied immunoassay technique (EMIT)

Enzyme multiplied immunoassay technique (EMIT)

Enzyme-linked immunosorbent assay (ELISA)

Enzyme-linked immunosorbent assay (ELISA)

Fluorescence polarization immunoassay (FPIA)

Fluorescence polarization immunoassay (FPIA)

Kinetic interaction of particles (KIMS)

Kinetic interaction of particles (KIMS)

Cloned enzyme donor immunoassay (CEDIA)

Cloned enzyme donor immunoassay (CEDIA)

Immunoassays are typically qualitative assays, although semiquantitative results are possible with some kits. For qualitative testing, the instrument is calibrated at one concentration, called the cutoff concentration. For example, when utilizing EMIT, all specimens that have absorbance values equivalent to this cutoff calibrator or greater will be reported as positive. Manufacturers provide this calibrator, so the laboratory has no choice in this concentration unless they alter the kit provided (e.g., dilution, to obtain alternate/user-defined cutoffs).

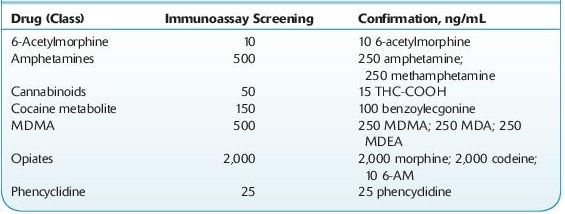

Cutoff concentrations for these kits have historically been decided with reference to the DHHS SAMSHA–mandated cutoffs for federal workplace drug testing, the so-called NIDA5 drugs/classes (PCP, opiates, cannabinoids [marijuana], cocaine metabolite, amphetamines). These cutoff concentrations are not generally appropriate for clinical use, since the cutoff values for several drug–drug classes are fairly high (see Table 14-2). This decreases the likelihood of false-positive results. The detection of drug abuse rather than legitimate drug use is targeted (see Forensic Toxicology).

TABLE 14–2. U.S. DHHS Cutoff Concentrations for Urine

Practitioners should also be aware of the relative cross-reactivities of the tests ordered. For example, immunoassays for opiates target morphine and typically do not produce positive results with samples containing synthetic and semisynthetic opioids such as oxycodone, fentanyl, propoxyphene, and tramadol.

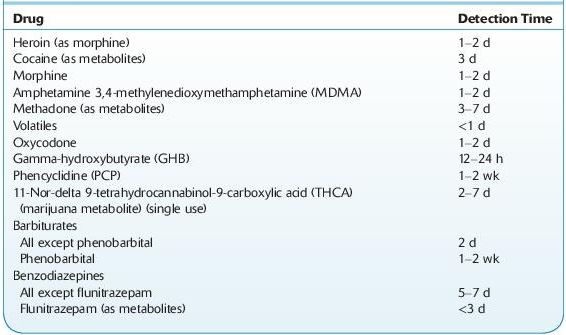

Table 14-3 lists the detection time of several drugs in urine. Note variables that must be considered include dose, frequency and route of administration, formulation, and patient-related factors (e.g., disease, other drugs, genetic polymorphisms).

TABLE 14–3. Approximate Detection Times in Urine of Some Drugs of Abuse

Confirmation Methods and Limitations

Confirmation Methods and Limitations

Confirmation tests are typically performed following a positive screening result. Confirmatory tests are ordered if it is necessary to identify a specific drug, obtain a quantitative result, or make a determination for legal purposes. For example, a positive opiate immunoassay result will not establish the identity of the opiate. A more specific test is required. These are typically chromatography based and are not performed on a “stat” basis. Chromatography is a separation process based on the differential distribution of sample constituents between a moving mobile and a stationary phase. Chromatography is a separation technique not identification. Mass spectrometry provides identification, since it provides mass and charge information unique to individual drugs. Identification may not be necessary in therapeutic drug monitoring (TDM). Sample pretreatment, extraction, and complex instrumental analysis are required. Common methods used for confirmation tests are

Gas chromatography (GC)

High-performance liquid chromatography (HPLC)

GC/mass spectrometry (GC/MS, GC/MS/MS)

Liquid chromatography/mass spectrometry (LC/MS, LC/MS/MS)

Interpretation of Quantitative Results in Urine

Interpretation of Quantitative Results in Urine

Drug concentrations in urine are not reflective of the dose of drug administered or a specific dosing regimen. Semiquantitative results provided by immunoassays may reflect contributions from more than one drug. For example, a presumptive positive immunoassay screen for opiates may be due to the presence of morphine, heroin, 6-acetylmorphine, and codeine in the specimen. Confirmation testing should provide identification of each drug present and may also provide quantitative results for each specific drug. In these instances, the laboratory may report total or free drug levels, that is, drug present that may be conjugated or unconjugated.

The concentration of drug present in a random urine specimen is the result of the drug delivery system, acute or chronic drug administration, the time of specimen collection, the hydration status of the individual, renal and hepatic function, urine pH, the presence of other drugs resulting in drug interactions, individual pharmacokinetics, and pharmacogenetic polymorphisms. Urine drug/creatinine ratios may be calculated in order to minimize effects on drug concentrations due to changes in the fluid intake of the patient. Monitoring over time may assist in the determination between abstinence and renewed drug use.

Specimen Validity and Drug Testing Background

Specimen Validity and Drug Testing Background

Specimen validity is an important but often overlooked aspect of laboratory testing. Validity refers to the correct specimen identity (i.e., a urine sample is in fact human urine). The collectors of samples in physician offices and other sites have the responsibility of ensuring that adequate specimens are collected from patients. The validity of a specimen may be questioned if the sample is substituted or adulterated.

A substituted sample is a substance provided in place of the donor’s specimen. This may be drug-free urine (from another individual) or another liquid such as water.

A substituted sample is a substance provided in place of the donor’s specimen. This may be drug-free urine (from another individual) or another liquid such as water.

An adulterated sample is a specimen to which substance(s) have been added to destroy the drug in the sample or interfere with the analytical tests utilized to detect drugs. Common additives include vinegar, bleach, liquid hand soap, lemon juice, and household cleaners. In the last several years, commercial products have become available to subvert drug tests. These products have been found to contain substances that include glutaraldehyde, sodium chloride, chromate, nitrite, surfactant, and peroxide/peroxidase.

An adulterated sample is a specimen to which substance(s) have been added to destroy the drug in the sample or interfere with the analytical tests utilized to detect drugs. Common additives include vinegar, bleach, liquid hand soap, lemon juice, and household cleaners. In the last several years, commercial products have become available to subvert drug tests. These products have been found to contain substances that include glutaraldehyde, sodium chloride, chromate, nitrite, surfactant, and peroxide/peroxidase.

In addition, specimens may be diluted by adding liquids to the urine at the time of collection to decrease the concentration of drug in the sample below the cutoff concentration used for the test. In vivo dilution involves the ingestion of diuretics and other substances to remove the drugs from the body or dilute the urine, for example, drinking an excessive quantity of water before a drug test.

Minimizing Specimen Validity Issues at the Collection Site

In many industries where urine is collected for drug testing for nonmedical purposes, for example, preemployment screening, specimens are collected under chain of custody and also several physical procedures are in place to minimize the likelihood of specimen substitution or adulteration. These procedures include witnessing the collection, not providing access to water in the bathroom, and coloring toilet bowl water. In addition, donors may not be permitted to wear loose clothing in which a substituted specimen could be hidden. Before the specimen is sealed in the collection container with tamper-resistant tape, the collector may record the temperature of the specimen (normal range for validity testing considered 90–100°F) as well as the urine color.

Urine Characteristics

Physical, chemical, or DNA tests may be used to assure the validity of a specimen. These tests are most frequently requested in connection with urine drug testing, especially for drugs of abuse such as cannabinoids (marijuana), heroin, and cocaine.

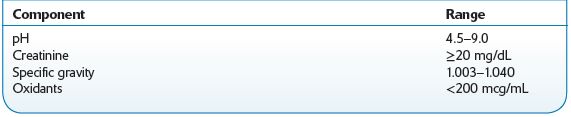

Creatinine, specific gravity, and pH are tests performed to assess whether a specimen is consistent with normal human urine. The U.S. Department of Health and Human Services Mandatory Guidelines specify acceptable ranges for these tests (for federally regulated drug testing specimens), and clinical laboratories and other providers have tended to adopt these values, some with slight modifications.

Creatinine is formed as a result of creatine metabolism in skeletal muscle, and the amount produced is relatively constant within an individual. This parameter is utilized clinically to assess renal function. A level ≥20 mg/dL in human urine is considered normal. Diluted and substituted (with water) specimens will have creatinine concentrations <20 mg/dL.

Specific gravity for liquids is the ratio of the density of a substance (urine) to the density of water at the same temperature. Hence, it is a measurement of the concentration of dissolved solids in the urine. The specific gravity of normal human urine ranges between 1.003 and 1.030. High values may be caused by disease (kidney disease, glucosuria, liver disease, dehydration, adrenal insufficiency, and proteinuria), whereas low values may be caused by diabetes insipidus (DI). Dilution and adulteration (e.g., with the organic solvents methanol and ethanol) results in a value <1.000.

The pH of normal human urine is typically between 5.0 and 8.0. The reference range is 4.5–9.0. This parameter can be affected by diet, medications, and disease. Acidic urine may be caused by acidosis (respiratory and metabolic), uremia, severe diarrhea, starvation, and diets with a large intake of acid-containing fruit. In contrast, an alkaline urine may be attributed to alkalosis, urinary tract infections, high-vegetable diets, and sodium bicarbonate. Individuals with dietary or disease causes of acidic or alkaline urine will be consistently in this range, rather than a random urine high or low due to adulteration or substitution. If lemon juice or vinegar is added to urine, the pH is lowered. A high urine pH results from the addition of bleach or soap.

Methodology

Tests should be conducted as soon as possible after urine collection. Creatinine may be determined by dipstick or automated clinical chemistry analyzer based on a chemical reaction that produces a color result. The specific gravity may be measured by refractometry in which the refractive index of the urine is determined. Alternatively, the dipstick method is based on ionic strength. A procedure available for automated clinical chemistry analyzers utilizes urine chloride ion concentration and spectrophotometry. pH is measured using a pH meter or colorimetrically manually or using an automated clinical chemistry analyzer.

Laboratories also offer tests for common adulterants. These include specific tests for nitrite and glutaraldehyde, typically using colorimetric assays, or generalized tests for oxidants. The latter detects compounds that exert their action by oxidation and include chromate and peroxidase. The method as performed on an automated clinical chemistry analyzer evaluates the reaction between a substrate and oxidant in the sample producing color that can be measured at a specific wavelength.

Specimen Requirements

A random urine specimen should be refrigerated after collection and sent to the laboratory as soon as possible. Urine samples suspected of bacterial contamination will produce invalid pH results. Sodium azide should not be used as a preservative, as this may cause interference with the Oxidant test. Table 14-4 presents reference ranges.

TABLE 14–4. Reference Ranges for Urine Specimens

THERAPEUTIC DRUG MONITORING

THERAPEUTIC DRUG MONITORING

Purpose

Purpose

Therapeutic drug monitoring (TDM) is the determination of drug levels in blood. The purpose of such measurement is to optimize the dose in order to achieve maximum clinical effect. TDM is typically performed on drugs with a low therapeutic index.

Indications

Signs of toxicity.

Signs of toxicity.

Therapeutic effect not obtained.

Therapeutic effect not obtained.

Suspected noncompliance.

Suspected noncompliance.

Drug has narrow therapeutic range.

Drug has narrow therapeutic range.

To provide or confirm an optimal dosing schedule.

To provide or confirm an optimal dosing schedule.

To confirm cause of organ toxicity (e.g., abnormal liver or kidney function tests).

To confirm cause of organ toxicity (e.g., abnormal liver or kidney function tests).

Other diseases or conditions exist that affect drug utilization.

Other diseases or conditions exist that affect drug utilization.

Drug interactions that have altered desired or previously achieved therapeutic concentration are suspected.

Drug interactions that have altered desired or previously achieved therapeutic concentration are suspected.

Drug shows large variations in utilization or metabolism between individuals.

Drug shows large variations in utilization or metabolism between individuals.

Need medicolegal verification of treatment, cause of death or injury (e.g., suicide, homicide, accident investigation), or to detect use of forbidden drugs (e.g., steroids in athletes, narcotics).

Need medicolegal verification of treatment, cause of death or injury (e.g., suicide, homicide, accident investigation), or to detect use of forbidden drugs (e.g., steroids in athletes, narcotics).

Differential diagnosis of coma.

Differential diagnosis of coma.

Applications

Applications

The clinician must be aware of the various influences on pharmacokinetics— factors such as half-life, time to peak and to steady state, protein binding, and excretion.

The clinician must be aware of the various influences on pharmacokinetics— factors such as half-life, time to peak and to steady state, protein binding, and excretion.

The route of administration and sampling time after last dose of drug must be known for proper interpretation. For some drugs (e.g., quinidine), different assay methods produce different values, and the clinician must know the normal range for the test method used for the patient.

The route of administration and sampling time after last dose of drug must be known for proper interpretation. For some drugs (e.g., quinidine), different assay methods produce different values, and the clinician must know the normal range for the test method used for the patient.

In general, peak concentrations alone are useful when testing for toxicity, and trough concentrations alone are useful for demonstrating a satisfactory therapeutic concentration. Trough concentrations are commonly used with such drugs as lithium, theophylline, phenytoin, carbamazepine, quinidine, tricyclic antidepressants, valproic acid, and digoxin. Trough concentrations can usually be drawn at the time the next dose is administered (this does not apply to digoxin). Both peak and trough concentrations are used to avoid toxicity but ensure bactericidal efficacy (e.g., gentamicin, tobramycin, vancomycin).

In general, peak concentrations alone are useful when testing for toxicity, and trough concentrations alone are useful for demonstrating a satisfactory therapeutic concentration. Trough concentrations are commonly used with such drugs as lithium, theophylline, phenytoin, carbamazepine, quinidine, tricyclic antidepressants, valproic acid, and digoxin. Trough concentrations can usually be drawn at the time the next dose is administered (this does not apply to digoxin). Both peak and trough concentrations are used to avoid toxicity but ensure bactericidal efficacy (e.g., gentamicin, tobramycin, vancomycin).

IV and IM administration should usually be sampled 30 minutes to 1 hour after administration is ended to determine peak concentrations (meant only as a general guide; the laboratory performing the tests should supply its own values).

IV and IM administration should usually be sampled 30 minutes to 1 hour after administration is ended to determine peak concentrations (meant only as a general guide; the laboratory performing the tests should supply its own values).

Blood should be drawn at a time specified by that laboratory (e.g., 1 hour before the next dose is due to be administered). This trough concentration should ideally be greater than the minimum effective serum concentration.

Blood should be drawn at a time specified by that laboratory (e.g., 1 hour before the next dose is due to be administered). This trough concentration should ideally be greater than the minimum effective serum concentration.

If a drug is administered by IV infusion, blood should be drawn from the opposite arm.

If a drug is administered by IV infusion, blood should be drawn from the opposite arm.

The drug should have been administered at a constant rate for at least 4–5 half-lives before blood samples are drawn.

The drug should have been administered at a constant rate for at least 4–5 half-lives before blood samples are drawn.

Unexpected test results may be due to interference by complementary and alternative medicines (e.g., high digoxin levels may result from interference from danshen, Chan Su, or ginseng).

Unexpected test results may be due to interference by complementary and alternative medicines (e.g., high digoxin levels may result from interference from danshen, Chan Su, or ginseng).

Criteria

Available methodology must be specific and reliable.

Available methodology must be specific and reliable.

Blood concentration must correlate with therapeutic and toxic effects.

Blood concentration must correlate with therapeutic and toxic effects.

Therapeutic window is narrow with danger of toxicity on therapeutic doses.

Therapeutic window is narrow with danger of toxicity on therapeutic doses.

Correlation between blood concentration and dose is poor.

Correlation between blood concentration and dose is poor.

Clinical effect of drug is not easily determined.

Clinical effect of drug is not easily determined.

Drugs for Which Drug Monitoring May Be Useful

Drugs for Which Drug Monitoring May Be Useful

Antiepileptic drugs (e.g., phenobarbital, phenytoin)

Antiepileptic drugs (e.g., phenobarbital, phenytoin)

Theophylline

Theophylline

Antimicrobials (aminoglycosides [gentamicin, tobramycin, amikacin], chloramphenicol, vancomycin, flucytosine [5-fluorocytosine])

Antimicrobials (aminoglycosides [gentamicin, tobramycin, amikacin], chloramphenicol, vancomycin, flucytosine [5-fluorocytosine])

Antipsychotic drugs

Antipsychotic drugs

Antianxiety drugs

Antianxiety drugs

Cyclic antidepressants

Cyclic antidepressants

Lithium

Lithium

Cardiac glycosides, antiarrhythmics, antianginal, antihypertensive drugs

Cardiac glycosides, antiarrhythmics, antianginal, antihypertensive drugs

Antineoplastic drugs

Antineoplastic drugs

Immunosuppressant drugs

Immunosuppressant drugs

Anti-inflammatory drugs (e.g., NSAIDs, steroids)

Anti-inflammatory drugs (e.g., NSAIDs, steroids)

Drugs of abuse: addiction treatment, pain management

Drugs of abuse: addiction treatment, pain management

Athletic performance enhancement drugs (e.g., androgenic anabolic steroids, erythropoietin)

Athletic performance enhancement drugs (e.g., androgenic anabolic steroids, erythropoietin)

Pharmacokinetics

Pharmacokinetics

Pharmacokinetics (PK) is the study of the time course of drugs in the body. PK seeks to relate the concentration of drug in a specimen to the amount of drug administered (dose). PK investigates

Absorption

Absorption

Distribution

Distribution

Metabolism

Metabolism

Excretion or elimination

Excretion or elimination

Changes in these parameters affect drug concentrations.

Absorption

Absorption describes the process in which the drug or xenobiotic enters the bloodstream. For intravenous/intra-arterial administration, there is no absorption. Other common routes of administration include oral, intramuscular, subcutaneous, inhalation, rectal, intrathecal, oral mucosa, dermal, and intranasal. The following factors affect the bioavailability (amount absorbed compared with amount administered):

Surface area

Surface area

Solubility

Solubility

Blood supply

Blood supply

Concentration

Concentration

pH

pH

Molecular size and shape

Molecular size and shape

Degree of ionization

Degree of ionization

Distribution

This describes the transfer of the drug from site of administration throughout the body. This is generally movement from the bloodstream to tissues. Therefore, it is a function of the blood supply to the tissues. A drug may rapidly be distributed to highly perfused tissues such as brain, heart, liver, and kidney, whereas slower distribution will occur for muscle, fat, and bone. Factors affecting drug absorption are also relevant to distribution. Plasma protein binding is an additional factor to consider.

Metabolism

Drugs are chemically altered to facilitate removal from the body. This process is performed mainly in the liver by enzymes. Other sites of enzyme activity include the GI tract, blood, kidney, and lung. Phase I metabolism describes transformation of functional groups on the drug molecule. Phase II are known as conjugation reactions and involve addition of endogenous substances to render the compound more water soluble. The most common conjugation reaction involves the addition of uridine diphosphate-glucuronic acid with hydroxyl or amino groups to form glucuronides. Opiates and benzodiazepines are highly glucuronidated prior to excretion.

Excretion

Removal of drug from the body typically occurs in urine from the kidney, feces from the liver, and breath from the lung. Drugs are also eliminated in sweat, breast milk, and sebum. Removal of drug by the liver, clearance, depends on blood flow to the liver, which may be increased in the presence of food, the presence of phenobarbital, and decreased during exercise, dehydration, disease (cirrhosis, CHF), and the presence of anesthetics. Removal of drug also depends on the ability of the liver to extract drug from the bloodstream. This includes diffusion and carrier systems. Renal excretion is a function of filtration, secretion, and reabsorption. Again the processes that affect transfer across biologic membranes must be considered.

Conclusions

Conclusions

In general, increases in serum/plasma drug concentrations may be observed in

1.Overdose

2.Coingestion of drugs that compete for metabolic enzymes

3.Liver and renal failure/insufficiency

4.Age-related increases due to loss in enzyme activity, decreases in absorption, blood flow, intestinal motility

5.Genetic polymorphisms—slow metabolizers

6.Movement of drugs from tissue depots

In general, decreases in serum/plasma drug concentrations may be observed in

1.Decreased oral bioavailability

2.Increased metabolism due to coingestion of drugs that induce metabolic enzymes such as phenobarbital, phenytoin

3.Increased renal clearance

4.Increases in plasma proteins (results in decreases in observed serum drug concentrations, since most tests measure unbound or free drug concentrations)

Alternate Matrices

Alternate Matrices

Drugs may be detected in nontraditional matrices

Meconium

Meconium

Oral fluid (saliva)

Oral fluid (saliva)

Sweat

Sweat

Hair

Hair

The majority of hospital laboratories do not offer testing in these specimens. Sample pretreatment prior to testing is often required and, therefore, are not offered on a “stat” basis. Special sample collection devices are available for sweat and oral fluid. Hair testing provides a longer detection window for drugs than does serum or urine and is generally reflective of chronic exposure.

Units

Units

Drugs concentrations are reported using several concentration units.

ng/mL, which are equivalent to mcg/L

ng/mL, which are equivalent to mcg/L

Note that “mcg” are used throughout this text because “ug” or “μg” are prohibited abbreviations. When handwritten the “u” may be misread as “m.”

mcg/mL, which are equivalent to mg/L

mcg/mL, which are equivalent to mg/L

Note that ethanol concentrations in the clinical setting are typically reported in mg/dL. Conversion to g% (g/dL) is often requested; for example, 80 mg/dL is equivalent to 0.08 g/dL. To convert mg/dL to g/dL, divide by 1,000. Conversely, to convert g/dL to mg/dL, multiply by 1,000.

ADDICTION MEDICINE

ADDICTION MEDICINE

Purpose

Purpose

Provision of professional health care services to treat a diagnosed substance use disorder

Application

Application

Drug testing during treatment to assess and monitor the clinical status of an individual. Testing should be limited to that which is medically necessary. Testing is appropriate in inpatient and outpatient settings and is especially important at the beginning of treatment. Periodic random drug testing in this population may act as a deterrent to substance use and aids in identifying lapses in treatment. In addition, such testing may identify compounds that have potential drug interactions with a prescribed medication.

Screening Methods and Limitations

Screening Methods and Limitations

Point of care or laboratory immunoassay tests are the most common screening tests utilized.

Point of care or laboratory immunoassay tests are the most common screening tests utilized.

Urine, serum, oral fluid, and hair are all potentially useful specimens to monitor drug abstinence or compliance with prescribed medications.

Urine, serum, oral fluid, and hair are all potentially useful specimens to monitor drug abstinence or compliance with prescribed medications.

Oral fluid and serum are the preferred specimens in assessing impairment.

Oral fluid and serum are the preferred specimens in assessing impairment.

Specialized laboratory services are usually required for drug testing in oral fluid and hair.

Specialized laboratory services are usually required for drug testing in oral fluid and hair.

Positive screening results should be confirmed before any adverse action is taken against an individual.

Positive screening results should be confirmed before any adverse action is taken against an individual.

A typical drug testing panel for addiction medicine includes

A typical drug testing panel for addiction medicine includes

Urine:

Urine:

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree