The Modern Approach to Patient Safety: Systems Thinking and the Swiss Cheese Model

The traditional approach to medical errors has been to blame the provider who delivers care directly to the patient, acting at what is sometimes called the “sharp end” of care: the doctor performing the transplant operation or diagnosing the patient’s chest pain, the nurse hanging the intravenous medication bag, or the pharmacist preparing the chemotherapy. Over the last decade, we have recognized that this approach overlooks the fact that most errors are committed by hardworking, well-trained individuals, and such errors are unlikely to be prevented by admonishing people to be more careful, or by shaming, firing, or suing them.

The modern patient safety movement replaces “the blame and shame game” with an approach known as systems thinking. This paradigm acknowledges the human condition—namely, that humans err—and concludes that safety depends on creating systems that anticipate errors and either prevent or catch them before they cause harm. Such an approach has been the cornerstone of safety improvements in other high-risk industries but has been ignored in medicine until the past decade.

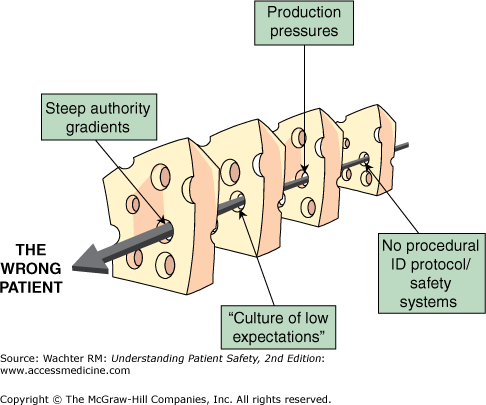

British psychologist James Reason’s “Swiss cheese model” of organizational accidents has been widely embraced as a mental model for system safety1,2 (Figure 2-1). This model, drawn from innumerable accident investigations in fields such as commercial aviation and nuclear power, emphasizes that in complex organizations, a single “sharp-end” (the person in the control booth in the nuclear plant, the surgeon making the incision) error is rarely enough to cause harm. Instead, such errors must penetrate multiple incomplete layers of protection (“layers of Swiss cheese”) to cause a devastating result. Reason’s model highlights the need to focus less on the (futile) goal of trying to perfect human behavior and more on aiming to shrink the holes in the Swiss cheese (sometimes referred to as latent errors) and create multiple overlapping layers of protection to decrease the probability that the holes will ever align and let an error slip through.

The Swiss cheese model emphasizes that analyses of medical errors need to focus on their “root causes”—not just the smoking gun, sharp-end error, but all the underlying conditions that made an error possible (or, in some situations, inevitable) (Chapter 14). A number of investigators have developed schema for categorizing the root causes of errors; the most widely used, by Charles Vincent, is shown in Table 2-1.3,4 The schema explicitly forces the error reviewer to ask whether there should have been a checklist or read back, whether the resident was too fatigued to think clearly, or whether the young nurse was too intimidated to speak up when she suspected an error.

| Framework | Contributory Factors | Examples of Problems That Contribute to Errors |

|---|---|---|

| Institutional | Regulatory context Medicolegal environment | Insufficient priority given by regulators to safety issues; legal pressures against open discussion, preventing the opportunity to learn from adverse events |

| Organization and management | Financial resources and constraints Policy standards and goals Safety culture and priorities | Lack of awareness of safety issues on the part of senior management; policies leading to inadequate staffing levels |

| Work environment | Staffing levels and mix of skills Patterns in workload and shifts Design, availability, and maintenance of equipment Administrative and managerial support | Heavy workloads, leading to fatigue; limited access to essential equipment; inadequate administrative support, leading to reduced time with patients |

| Team | Verbal communication Written communication Supervision and willingness to seek help Team leadership | Poor supervision of junior staff; poor communication among different professions; unwillingness of junior staff to seek assistance |

| Individual staff member | Knowledge and skills Motivation and attitude Physical and mental health | Lack of knowledge or experience; long-term fatigue and stress |

| Task | Availability and use of protocols Availability and accuracy of test results | Unavailability of test results or delay in obtaining them; lack of clear protocols and guidelines |

| Patient | Complexity and seriousness of condition Language and communication Personality and social factors | Distress; language barriers between patients and caregivers |

Errors at the Sharp End: Slips versus Mistakes

Even though we now understand that the root cause of hundreds of thousands of errors each year lies at the “blunt end,” the proximate cause is often an act committed (or neglected, or performed incorrectly) by a caregiver. Even as we embrace the systems approach as the most useful paradigm, it would be wrong not to tackle these human errors as well. After all, even a room filled with flammable gas will not explode unless someone strikes a match.

In thinking about human errors, it is useful to differentiate between “slips” and “mistakes”, and to do this, one must appreciate the difference between conscious behavior and automatic behavior. Conscious behavior is what we do when we “pay attention” to a task, and it is especially important when we are doing something new, like learning to play the piano or program our DVD player. On the other hand, automatic behaviors are the things we do almost unconsciously—they may have required a lot of thought initially, but after a while we do them virtually “in our sleep.” Humans prefer automatic behaviors because they take less energy, have predictable outcomes, and allow us to “multitask”—do other things at the same time. Some of these other tasks are also automatic behaviors, like driving a car while drinking coffee or talking on the phone, but some require conscious thought. These latter moments—when a doctor tries to write a “routine” prescription while also pondering his approach to a challenging patient—are particularly risky, both for making errors in the routine, automatic process (“slips”) or in the conscious process (“mistakes”).

Now that we’ve distinguished the two types of tasks, let’s turn to slips versus mistakes. Slips are inadvertent, unconscious lapses in the performance of some automatic task: you absently drive to work on Sunday morning because your automatic behavior kicks in and dictates your actions. Slips occur most often when we put an activity on “autopilot” so we can manage new sensory inputs, think through a problem, or deal with emotional upset, fatigue, or stress (a pretty good summing up of most healthcare environments). Mistakes, on the other hand, result from incorrect choices. Rather than blundering into them while we are distracted, we usually make mistakes because of insufficient knowledge, lack of experience or training, inadequate information (or inability to interpret available information properly), or applying the wrong set of rules or algorithms to a decision (we’ll delve into this area more deeply when we discuss diagnostic errors in Chapter 6).

When measured on an “errors per action” yardstick, conscious behaviors are more prone to mistakes than automatic behaviors are prone to slips. However, slips probably represent the greater overall threat to patient safety because so much of what healthcare providers do is automatic. Doctors and nurses are most likely to slip while doing something they have done correctly a thousand times: asking patients if they are allergic to any medications before writing a prescription, remembering to verify a patient’s identity before sending her off to a procedure, or loading a syringe with heparin (and not insulin) before flushing an IV line (the latter two cases are described in Chapters 15 and 4, respectively).

The complexity of healthcare work adds to the risks. Like pilots, soldiers, and others trained to work in high-risk occupations, doctors and nurses are programmed to do many specific tasks, under pressure, with a high degree of accuracy. But unlike most other professions, medical jobs typically combine three very different types of tasks: lots of conscious behaviors (complex decisions, judgment calls), many “customer” interactions, and innumerable automatic behaviors. Physician training, in particular, has traditionally emphasized the highly cognitive aspects with a small focus on the human interactions. But, until very recently, it has completely ignored the importance, and risky nature, of automatic behaviors.

With all of this in mind, how then should we respond to the inevitability of slips? Our typical response would be to reprimand (if not fire) a nurse for giving the wrong medication, admonishing her to “be more careful next time!” Even if the nurse did, she is just as likely to commit a different error while automatically carrying out a different task in a different setting. As James Reason reminds us, “Errors are largely unintentional. It is very difficult for management to control what people did not intend to do in the first place.”2

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree